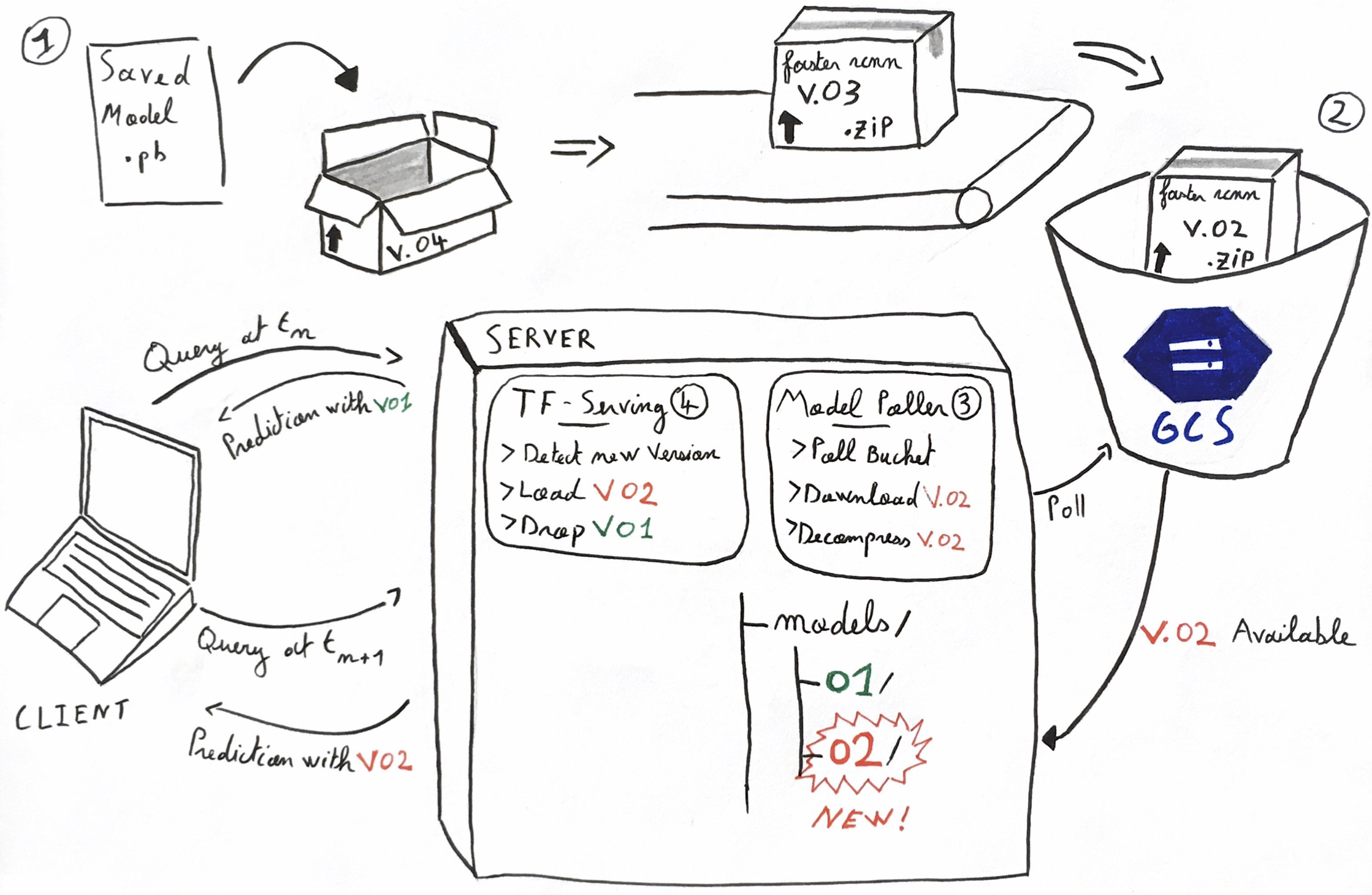

This repository illustrates the sidecar container design pattern applied to tensorflow serving to automatically pull new model versions from a storage bucket.

The goal of this pattern is to use a vanilla tensorflow-serving container coupled with a sidecar container polling a storage for new versions of a models.

When a new version of the model is pushed in the storage, it is downloaded, decompressed and moved to the directory used by tensorflow serving to load models. Then, tensorflow serving automatically load the new version, serves it and gracefully terminate the serving of the previous version.

- After training, a model is exported as

saved_modelthat can be used for inference. - The folder containing the

saved_modeland its assosciated variables is named with a version number e.g.0002. The folder0002is compressed in azipand uploaded in a storage bucket.

# Structure of the versionned model folder

0002/

|

|--saved_model.pb

|

|--variables/

| |

| ...-

In the server, two containers:

tensorflow/servingandmodel_pollerare running and share the same local file system.model_pollerpolls the bucket for new versions and download the new versions available for a given model. The new version of the model is downloaded in the folder used by tensorflow serving to check for model sources. -

tensorlfow/servingdetects that a new version of the model in available. It automatically loads it in memory. It starts serving incoming requests with the new version while finishing to process the requests on the previous version. Once all the requests on the former version have been handled it is unloaded from memory. The version upgrade has been without service interruption.

A Docker Image built from this respository Dockerfile can be obtained on Docker hub. You can quickly test the pattern with this image and a tensorflow serving model.

-

Setup a Google cloud project, check the steps detailed in docs/gcloud_setup.md. From now on, I assume you have a valid GCP project, created a storage bucket with a subscription and have a

jsonservice key. The IAM process is done with a Google Application Credentialsjsonkey passed to the container at runtime. -

The simplest way to reproduce the results is to pull the

model_pollerimage.

docker pull popszer/model_poller:v0.1.6- Run the

model_poller

# Update the environment variables and the `model_poller` image version accordingly

# Declare environment variables

PROJECT_ID=tensorflow-serving-229609

SUBSCRIPTION=model_subscription

SAVE_DIR=/shared_dir/models

GOOGLE_APPLICATION_CREDENTIALS="/shared_dir/tf-key.json"

# Run model poller

docker run --name model_poller -t \

-v "/Users/fpaupier/Desktop/tst_poller:/shared_dir" \

-e PROJECT_ID=$PROJECT_ID \

-e SUBSCRIPTION=$SUBSCRIPTION \

-e SAVE_DIR=$SAVE_DIR \

-e GOOGLE_APPLICATION_CREDENTIALS=$GOOGLE_APPLICATION_CREDENTIALS \

popszer/model_poller:v.0.1.6 &While the container is running, upload some files in the

bucket (you could use the console or gsutil) and watch as changes scroll by

in the $SAVE_DIR.

The model_poller is a sidecar container to be used with tensorflow_serving. The steps below are inspired from the official doc and gives perspective on how to use the two containers simultaneously.

- Download the TensorFlow Serving Docker image and repo

docker pull tensorflow/serving- Select a model to serve. For testing, you can find plenty of object detection models available on the model zoo.

I take

faster_rcnn_inception_v2_cocoas an example. The use case here is the following: You launch a first version0001of this model in production. After a while, you fine tune some hyper parameters and train it again. Now you want to serve the version0002. On your server you have a tensorflow/serving docker image running:

# TensorFlow Serving container and open the REST API port

docker run -t --rm -p 8501:8501 \

-v "/home/fpaupier/tf_models/saved_model_faster_rcnn:/models/faster_rcnn" \

-e MODEL_NAME=faster_rcnn \

tensorflow/serving &The model_poller sidecar shall download new versions of the model in the directory used by tensorflow/serving as the models' source , e.g. the /home/fpaupier/tf_models/saved_model_faster_rcnn folder here. Then, the models with the highest version number will be automatically served/ (_i.e. if the folder contains a folder 000x and one 000y with y > x, the version y will be served).

Time to test, upload the compressed model in the bucket listened by the model_poller and tensorflow/serving will start to serve it.

There is no downtime for the switch of model version.

You may want to modify the model_poller to fit it to your use case (different IAM process, change of cloud provider, ...). The steps below gives you guidelines to reproduce a working environment.

For testing and playing around you can use the local setup steps.

- Create a virtualenv

`which python3` -m venv model_polling- Activate the virtualenv

source /path/to/model_polling/bin/activate- Install the requirements

pip install -r requirements.txt- This example project uses GCP as cloud services provider. Make sure to authentificate your project. Create a service key, download it and set the environment variable accordingly:

export GOOGLE_APPLICATION_CREDENTIALS="/home/user/Downloads/[FILE_NAME].json"- Run the model poller app

python model_polling.py $PROJECT_ID $SUBSCRIPTION $SAVE_DIR- Finally, build the docker image:

# Where vx.y.z follow the semantic versionning --> https://semver.org/

docker build \

-t model_poller:vx.y.z \

--rm \

--no-cache .You can now push your image to an image repository and use it combined with tensorflow-serving to continuously deploy new model versions in production with no downtime.

This project is a proof of concept, thus it can be enhanced in many ways.

- Delete local model files when model are removed from the bucket.

- Investigate different IAM process

- Propose a sample k8s.yaml configuration file that would be use to deploy the

model_pollerandtensorflow/servingcontainers at the same time.

This project is no longer updated and the architecture detailled here is not the most up to date. Refer to the official tensorflow serving documentation for up to date information and best practices.