Build target libchatllm:

Assume MSVC is used.

-

Build target

libchatllm:cmake --build build --config Release --target libchatllm

-

Copy

libchatllm.dll,libchatllm.libandggml.dlltobindings;

-

Build target

libchatllm:cmake --build build --target libchatllm

Run chatllm.py with exactly the same command line options.

For example,

-

Linux:

python3 chatllm.py -i -m path/to/model -

Windows:

python chatllm.py -i -m path/to/model

There is also a Chatbot powered by Streamlit:

To start it:

streamlit run chatllm_st.py -- -i -m path/to/modelNote: "STOP" function is not implemented yet.

Here is a server providing OpenAI Compatible API. Note that most of the parameters are ignored. With this, one can start two servers one for chatting and one for code completion (a base model supporting fill-in-the-middle is required), and setup a fully functional local copilot in Visual Studio Code with the help of tools like twinny.

openai_api.py takes two arguments specifying models for chatting and code completion respectively. For example, use

DeepSeekCoder instructed for chatting, and its base model for code completion:

python openai_api.py path/to/deepseekcoder-1.3b.bin /path/to/deepseekcoder-1.3b-base.binAdditional arguments for each model can be specified too. For example:

python openai_api.py path/to/chat/model /path/to/fim/model --temp 0 --top_k 2 --- --temp 0.8Where --temp 0 --top_k 2 are passed to the chatting model, while --temp 0.8 are passed to the code completion model.

openai_api.py uses model and API path to select chatting or completion models: when Model name to something

either starting with fim or ending with fim, or API path is ending with /generate, code completion model is selected;

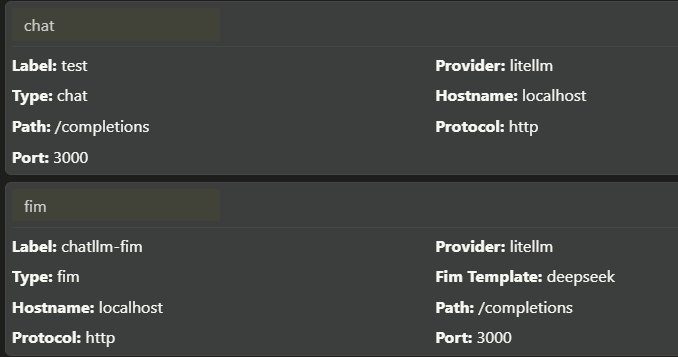

otherwise, chatting model is selected. Here is a reference configuration in twinny:

Note that, openai_api.py is tested to be compatible with provider litellm.

Some models that can be used for code completion:

- DeepSeekCoder: Coder-Base-1.3B

- CodeGemma v1.1: Base-2B, Base-7B

- StarCoder2: Base-3B, Base-7B, Base-15B (not recommended)

Run chatllm.ts with exactly the same command line options using Bun:

bun run chatllm.ts -i -m path/to/modelWARNING: Bun looks buggy on Linux.

libchatllm can be utilized by all languages that can call into dynamic libraries. Take C as an example:

-

Linux

-

Build

bindings\main.c:export LD_LIBRARY_PATH=.:$LD_LIBRARY_PATH gcc main.c libchatllm.so

-

Test

a.outwith exactly the same command line options.

-

-

Windows:

-

Build

bindings\main.c:cl main.c libchatllm.lib

-

Test

main.exewith exactly the same command line options.

-