Punica is a serving framework dedicated for serving multiple fine-tuned LoRA models. Atom uses its backbone framework without LoRA part to demonstrate its' efficiency in serving context. This codebase is modified from previous version of Punica.

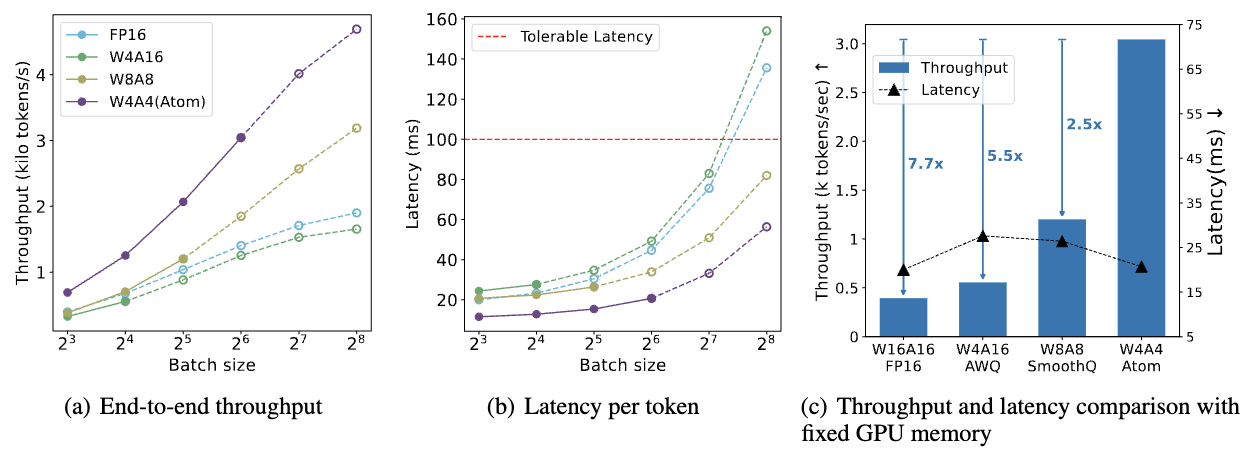

To evaluate the real production scenario, we collect real-world LLM chat logs from ShareGPT, from which we sample prefill prompts and decoding length to synthesize user requests. We adopt continous batching following Orca and manually set the corresponding batch size.

The backbone inference workflow of Punica is based on PyTorch Huggingface Transformers. We only subsitute corresponding kernels for each tested methods. For W8A8 evaluation, we apply SmoothQuant and also replace the attention kernel with FP8 FlashInfer implementation. For W4A16 evaluation, we utilize kernels from AWQ and replace all linear layers with AWQ quantized versions.

Note that current codebase is for efficiency evaluation. We use random weights and hack therefore no meaningful output.

Check README.md in each folder for detailed instructions.