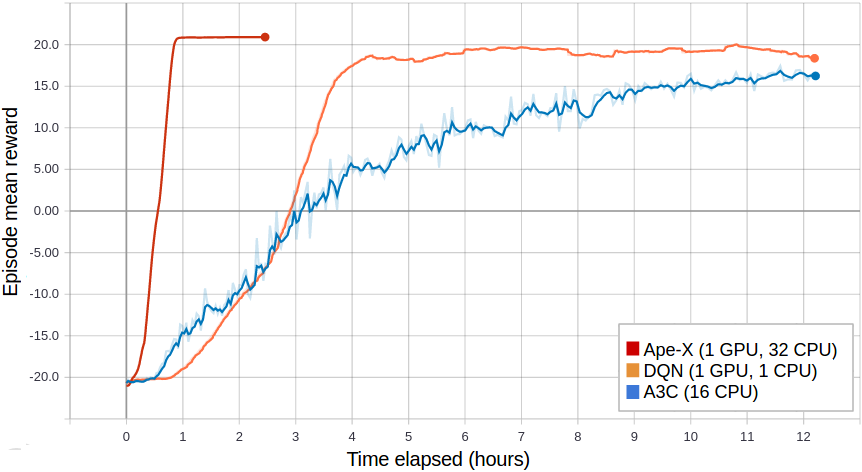

[paper] [implementation] Ape-X variations of DQN, DDPG, and QMIX (APEX_DQN, APEX_DDPG, APEX_QMIX) use a single GPU learner and many CPU workers for experience collection. Experience collection can scale to hundreds of CPU workers due to the distributed prioritization of experience prior to storage in replay buffers.

Tuned examples: PongNoFrameskip-v4, Pendulum-v0, MountainCarContinuous-v0, {BeamRider,Breakout,Qbert,SpaceInvaders}NoFrameskip-v4.

Atari results @10M steps: more details

| Atari env | RLlib Ape-X 8-workers | Mnih et al Async DQN 16-workers |

|---|---|---|

| BeamRider | 6134 | ~6000 |

| Breakout | 123 | ~50 |

| Qbert | 15302 | ~1200 |

| SpaceInvaders | 686 | ~600 |

Scalability:

| Atari env | RLlib Ape-X 8-workers @1 hour | Mnih et al Async DQN 16-workers @1 hour |

|---|---|---|

| BeamRider | 4873 | ~1000 |

| Breakout | 77 | ~10 |

| Qbert | 4083 | ~500 |

| SpaceInvaders | 646 | ~300 |

Ape-X specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/dqn/apex.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] In IMPALA, a central learner runs SGD in a tight loop while asynchronously pulling sample batches from many actor processes. RLlib's IMPALA implementation uses DeepMind's reference V-trace code. Note that we do not provide a deep residual network out of the box, but one can be plugged in as a custom model. Multiple learner GPUs and experience replay are also supported.

Tuned examples: PongNoFrameskip-v4, vectorized configuration, multi-gpu configuration, {BeamRider,Breakout,Qbert,SpaceInvaders}NoFrameskip-v4

Atari results @10M steps: more details

| Atari env | RLlib IMPALA 32-workers | Mnih et al A3C 16-workers |

|---|---|---|

| BeamRider | 2071 | ~3000 |

| Breakout | 385 | ~150 |

| Qbert | 4068 | ~1000 |

| SpaceInvaders | 719 | ~600 |

Scalability:

| Atari env | RLlib IMPALA 32-workers @1 hour | Mnih et al A3C 16-workers @1 hour |

|---|---|---|

| BeamRider | 3181 | ~1000 |

| Breakout | 538 | ~10 |

| Qbert | 10850 | ~500 |

| SpaceInvaders | 843 | ~300 |

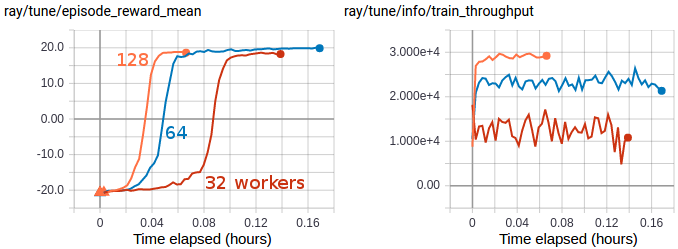

Multi-GPU IMPALA scales up to solve PongNoFrameskip-v4 in ~3 minutes using a pair of V100 GPUs and 128 CPU workers. The maximum training throughput reached is ~30k transitions per second (~120k environment frames per second).

IMPALA-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/impala/impala.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] We include an asynchronous variant of Proximal Policy Optimization (PPO) based on the IMPALA architecture. This is similar to IMPALA but using a surrogate policy loss with clipping. Compared to synchronous PPO, APPO is more efficient in wall-clock time due to its use of asynchronous sampling. Using a clipped loss also allows for multiple SGD passes, and therefore the potential for better sample efficiency compared to IMPALA. V-trace can also be enabled to correct for off-policy samples.

APPO is not always more efficient; it is often better to simply use PPO or IMPALA.

Tuned examples: PongNoFrameskip-v4

APPO-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/ppo/appo.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] RLlib implements A2C and A3C using SyncSamplesOptimizer and AsyncGradientsOptimizer respectively for policy optimization. These algorithms scale to up to 16-32 worker processes depending on the environment. Both a TensorFlow (LSTM), and PyTorch version are available.

Tuned examples: PongDeterministic-v4, PyTorch version, {BeamRider,Breakout,Qbert,SpaceInvaders}NoFrameskip-v4

Tip

Consider using IMPALA for faster training with similar timestep efficiency.

Atari results @10M steps: more details

| Atari env | RLlib A2C 5-workers | Mnih et al A3C 16-workers |

|---|---|---|

| BeamRider | 1401 | ~3000 |

| Breakout | 374 | ~150 |

| Qbert | 3620 | ~1000 |

| SpaceInvaders | 692 | ~600 |

A3C-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/a3c/a3c.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] DDPG is implemented similarly to DQN (below). The algorithm can be scaled by increasing the number of workers, switching to AsyncGradientsOptimizer, or using Ape-X. The improvements from TD3 are available though not enabled by default.

Tuned examples: Pendulum-v0, MountainCarContinuous-v0, HalfCheetah-v2, TD3 Pendulum-v0, TD3 InvertedPendulum-v2, TD3 Mujoco suite (Ant-v2, HalfCheetah-v2, Hopper-v2, Walker2d-v2).

DDPG-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/ddpg/ddpg.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] RLlib DQN is implemented using the SyncReplayOptimizer. The algorithm can be scaled by increasing the number of workers, using the AsyncGradientsOptimizer for async DQN, or using Ape-X. Memory usage is reduced by compressing samples in the replay buffer with LZ4. All of the DQN improvements evaluated in Rainbow are available, though not all are enabled by default. See also how to use parametric-actions in DQN.

Tuned examples: PongDeterministic-v4, Rainbow configuration, {BeamRider,Breakout,Qbert,SpaceInvaders}NoFrameskip-v4, with Dueling and Double-Q, with Distributional DQN.

Tip

Consider using Ape-X for faster training with similar timestep efficiency.

Atari results @10M steps: more details

| Atari env | RLlib DQN | RLlib Dueling DDQN | RLlib Dist. DQN | Hessel et al. DQN |

|---|---|---|---|---|

| BeamRider | 2869 | 1910 | 4447 | ~2000 |

| Breakout | 287 | 312 | 410 | ~150 |

| Qbert | 3921 | 7968 | 15780 | ~4000 |

| SpaceInvaders | 650 | 1001 | 1025 | ~500 |

DQN-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/dqn/dqn.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] We include a vanilla policy gradients implementation as an example algorithm in both TensorFlow and PyTorch. This is usually outperformed by PPO.

Tuned examples: CartPole-v0

PG-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/pg/pg.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

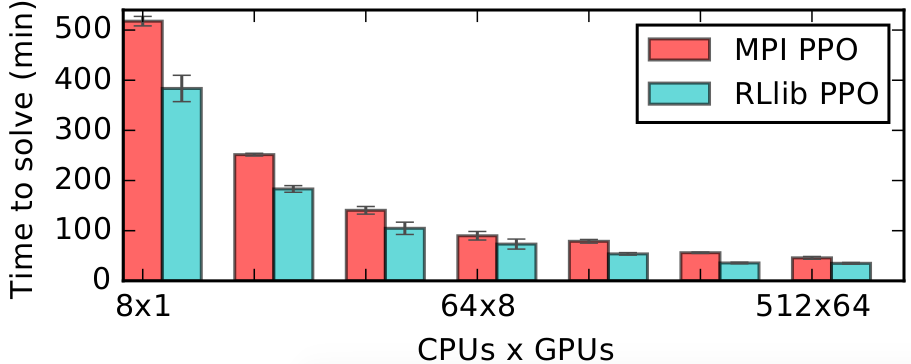

[paper] [implementation] PPO's clipped objective supports multiple SGD passes over the same batch of experiences. RLlib's multi-GPU optimizer pins that data in GPU memory to avoid unnecessary transfers from host memory, substantially improving performance over a naive implementation. RLlib's PPO scales out using multiple workers for experience collection, and also with multiple GPUs for SGD.

Tuned examples: Humanoid-v1, Hopper-v1, Pendulum-v0, PongDeterministic-v4, Walker2d-v1, HalfCheetah-v2, {BeamRider,Breakout,Qbert,SpaceInvaders}NoFrameskip-v4

Atari results: more details

| Atari env | RLlib PPO @10M | RLlib PPO @25M | Baselines PPO @10M |

|---|---|---|---|

| BeamRider | 2807 | 4480 | ~1800 |

| Breakout | 104 | 201 | ~250 |

| Qbert | 11085 | 14247 | ~14000 |

| SpaceInvaders | 671 | 944 | ~800 |

Scalability: more details

| MuJoCo env | RLlib PPO 16-workers @ 1h | Fan et al PPO 16-workers @ 1h |

|---|---|---|

| HalfCheetah | 9664 | ~7700 |

RLlib's multi-GPU PPO scales to multiple GPUs and hundreds of CPUs on solving the Humanoid-v1 task. Here we compare against a reference MPI-based implementation.

PPO-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/ppo/ppo.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

RLlib's soft-actor critic implementation is ported from the official SAC repo to better integrate with RLlib APIs. Note that SAC has two fields to configure for custom models: policy_model and Q_model, and currently has no support for non-continuous action distributions. It is also currently experimental.

Tuned examples: Pendulum-v0

SAC-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/sac/sac.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] ARS is a random search method for training linear policies for continuous control problems. Code here is adapted from https://github.com/modestyachts/ARS to integrate with RLlib APIs.

Tuned examples: CartPole-v0, Swimmer-v2

ARS-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/ars/ars.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

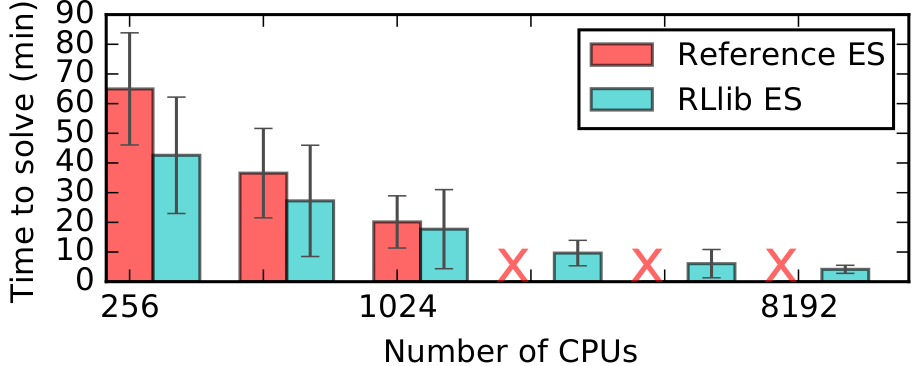

[paper] [implementation] Code here is adapted from https://github.com/openai/evolution-strategies-starter to execute in the distributed setting with Ray.

Tuned examples: Humanoid-v1

Scalability:

RLlib's ES implementation scales further and is faster than a reference Redis implementation on solving the Humanoid-v1 task.

ES-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/es/es.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] Q-Mix is a specialized multi-agent algorithm. Code here is adapted from https://github.com/oxwhirl/pymarl_alpha to integrate with RLlib multi-agent APIs. To use Q-Mix, you must specify an agent grouping in the environment (see the two-step game example). Currently, all agents in the group must be homogeneous. The algorithm can be scaled by increasing the number of workers or using Ape-X.

Q-Mix is implemented in PyTorch and is currently experimental.

Tuned examples: Two-step game

QMIX-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/qmix/qmix.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__

[paper] [implementation] MARWIL is a hybrid imitation learning and policy gradient algorithm suitable for training on batched historical data. When the beta hyperparameter is set to zero, the MARWIL objective reduces to vanilla imitation learning. MARWIL requires the offline datasets API to be used.

Tuned examples: CartPole-v0

MARWIL-specific configs (see also common configs):

.. literalinclude:: ../../rllib/agents/marwil/marwil.py :language: python :start-after: __sphinx_doc_begin__ :end-before: __sphinx_doc_end__