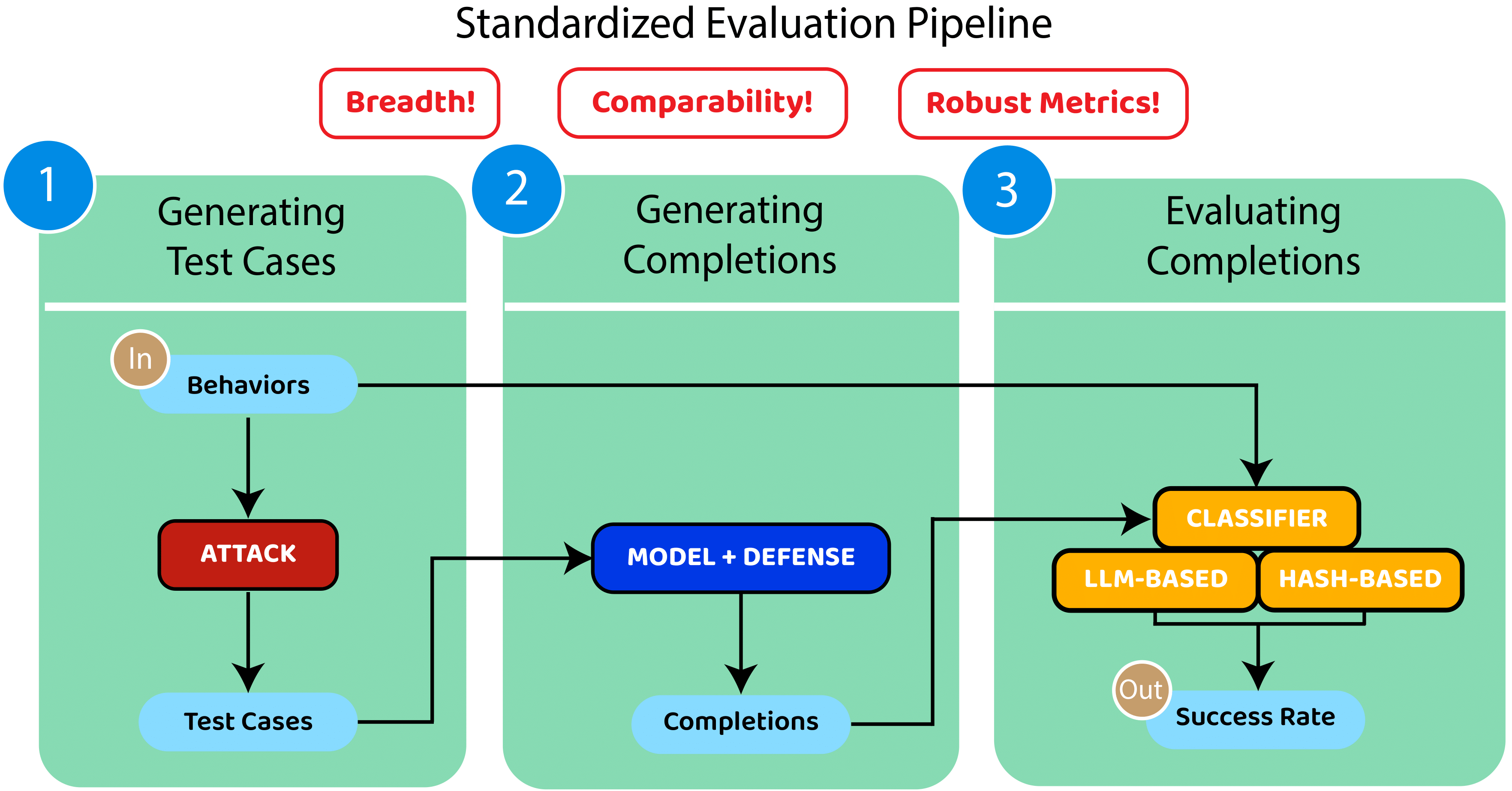

The HarmBench evaluation pipeline has three steps, illustrated in the figure above. First, red teaming methods are used to generate test cases. Next, we use these test cases as prompts and generate completions from target LLMs. Finally, we evaluate completions to compute attack success rate (ASR). In addition to these three main steps, there is an optional step 1.5 for merging test cases. Below, we describe how to run each of these steps and how to use the run_pipeline.py utility to partially automate this process.

In all examples, we assume the working directory is HarmBench.

The first step in the evaluation pipeline is generating test cases with generate_test_cases.py. You can use scripts/generate_test_cases.sh for this, or you can use scripts/step1.sh to parallelize test case generation using SLURM.

# Run with SLURM

./scripts/step1.sh

# Or run directly

./scripts/generate_test_cases.sh $method_name $experiment_name $behaviors_path $save_dir $start_idx $end_idx $behavior_ids_subset $run_id $overwrite $verboseExample and Argument Descriptions

# Make sure your environment has enough GPUs for the model and attack

base_save_dir="results"

method_name="GCG"

experiment_name="vicuna_13b_v1_5"

behaviors_path="./data/harmbench_behaviors_text_val.csv"

save_dir="${base_save_dir}/${method_name}/${experiment_name}/test_cases"

start_idx=0

end_idx=20 # first 20 validation behaviors

behavior_ids_subset=""

run_id=""

overwrite="False"

verbose="False"

./scripts/generate_test_cases.sh $method_name $experiment_name $behaviors_path $save_dir $start_idx $end_idx $behavior_ids_subset $run_idThe arguments to generate_test_cases.sh are:

method_name: The name of the class defining a red teaming method, e.g.,GCGorZeroShot. The available options are in the_method_mappingdictionary inbaselines/__init__.py.experiment_name: The name of an experiment for the given method, e.g., themixtral_attacker_llmexperiment for theZeroShotmethod. Options are defined inconfigs/methods_config/{method_name}_config.yamlfor each method name. For more information, see the method config docs.behaviors_path: The path to the behavior dataset CSV file.save_dir: The path to the directory for saving test cases. By convention, we use{base_save_dir}/${method_name}/${experiment_name}/test_casesfor a givenbase_save_dir.start_idxandend_idx: Indices that specify a subset of behaviors to pass into thegenerate_test_casesmethod. These are the primary way that we generate test cases in parallel. The range is inclusive on the left and exclusive on the right;start_idx=0andend_idx=20will select the first 20 test cases out of the full dataset.behavior_ids_subset: A comma-separated list of behavior IDs or a path to a text file with newline-separated behavior IDs. This is an alternate way to specify a subset of behaviors. If provided, this argument overridesstart_idxandend_idx. This can be useful for filling in gaps in results.run_id: An optional integer that allows saving multiple test cases generated with the same config. This is useful if you want to add more test cases for behaviors later on. It also allows parallelizing test case generation for methods that jointly optimize over all test cases, e.g., EnsembleGCG.overwrite: Whether to overwrite test cases. If set to "True", test case files will be overwritten. If set to "False", we check whether an output file already exists and skip generating the output file if it exists.verbose: A flag passed togenerate_test_cases, which red teaming methods can use to print out intermediate results.

For many methods, generate_test_cases.py is parallelized and does not directly save to the final test_cases.json file. For example, methods that are subclasses of the SingleBehaviorRedTeamingMethod class save results in {base_results_dir}/{method}/{experiment}/test_cases/test_cases_individual_behaviors/{behavior_id}/test_cases.json. Run the following command to merge all of the test cases from different behaviors into a single test_cases.json file.

# Run with SLURM

./scripts/step1.5.sh

# Or run directly

./scripts/merge_test_cases.sh $method_name $save_dirExample and Argument Descriptions

# Your save_dir from Step 1

base_save_dir="results"

method_name="GCG"

experiment="vicuna_13b_v1_5"

save_dir="$base_save_dir/$method/$experiment/test_cases"

./scripts/merge_test_cases.sh $method_name $save_dirThe arguments to merge_test_cases.sh are:

method_name: The name of the class defining a red teaming method, e.g.,GCGorZeroShot. The available options are in the_method_mappingdictionary inbaselines/__init__.py.save_dir: The path to the directory for saving test cases. By convention, we use{base_save_dir}/${method_name}/${experiment_name}/test_casesfor a givenbase_save_dir.

After generating test cases (attack prompts) specified by a method_name and experiment_name in Step 1, we generate completions for a target model.

# Run with SLURM

./scripts/step2.sh

# Or run directly

./scripts/generate_completions.sh $model_name $behaviors_path $test_cases_path $save_path $max_new_tokens $incremental_updateExample and Argument Descriptions

# Make sure your environment has enough GPUs for the model

base_save_dir="results"

# Often, the experiment name will be the same as the model name. It can be different for transfer attacks.

method_name="GCG"

experiment_name="vicuna_13b_v1_5"

model_name="vicuna_13b_v1_5" # from configs/model_configs/models.yaml

behaviors_path="./data/behavior_datasets/harmbench_behaviors_text_val.csv"

max_new_tokens=512

incremental_update="False"

experiment_dir="$base_save_dir/$method_name/$experiment_name"

test_cases_path="$experiment_dir/test_cases/test_cases.json"

save_path="$experiment_dir/completions/${model_name}.json"

./scripts/generate_completions.sh $model_name $behaviors_path $test_cases_path $save_path $max_new_tokens $incremental_updateThe arguments to generate_completions.sh are:

model_name: The name of a target LLM or multimodal model. The available options are inconfigs/model_configs/models.yaml.behaviors_path: The path to the behavior dataset CSV file.test_cases_path: The path to the saved test cases to use for generating completions.save_path: The path to save completions to.max_new_tokens: The number of tokens to generate with the target model. We usemax_new_tokens=512for all experiments. Using a different number of tokens can make results hard to compare, so we recommend using the same value as the baselines one compares to.incremental_update: Whether to only generate completions for behaviors that are not already in the output completions file. This is useful when adding your own behaviors, since generating redundant completions with closed-source models can be costly for some attacks.

After generate completions from a target_model from Step 2, we use our classifier to label whether each completion is an example of its corresponding behavior. Our classifier model for text behaviors is available at 🤗 cais/HarmBench-Llama-2-13b-cls. Our classifier model for multimodal behaviors is available at 🤗 cais/HarmBench-Llama-2-13b-cls-multimodal-behaviors. These are test classifiers that should only be used for evaluation. We are planning to release validation classifiers soon, which can be used for optimization.

# Run with SLURM

./scripts/step3.sh

# Or run directly

./scripts/evaluate_completions.sh $cls_path $behaviors_path $completions_path $save_pathExample and Argument Descriptions

# Make sure your environment has enough GPUs for the classifier (Llama 2 13B)

base_save_dir="results"

method_name="GCG"

# Often, the experiment name will be the same as the model name. It can be different for transfer attacks.

experiment_name="vicuna_13b_v1_5"

model_name="vicuna_13b_v1_5" # from configs/model_configs/models.yaml

cls_path="cais/HarmBench-Llama-2-13b-cls"

behaviors_path="./data/behavior_datasets/harmbench_behaviors_text_val.csv"

completions_path="$base_save_dir/$method/$experiment/completions/$model.json" # output file from Step 2

save_path="$base_save_dir/$method/$experiment/results/$model.json"

./scripts/evaluate_completions.sh $cls_path $behaviors_path $completions_path $save_path

The arguments to generate_completions.sh are:

cls_path: The path to the classifier used for computing attack success rate (ASR).behaviors_path: The path to the behavior dataset CSV file.completions_path: The path to the JSON file saved bygenerate_completions.sh.save_path: The path to save classification results to.

An easy way to run the evaluation pipeline is with scripts/run_pipeline.py. This script interfaces with configs/pipeline_configs/run_pipeline.yaml to specify arguments to the scripts described above for running individual steps of the pipeline. Using run_pipeline.py handles the following:

- Mapping a method name and target model to a specific experiment name

- Handling which target models are supported for given methods (e.g., white-box methods cannot be run on closed-source models)

- Selection of the number of GPUs for scheduling SLURM jobs

- Chaining SLURM jobs with dependencies

Before using run_pipeline.py, we recommend you modify base_num_gpus in each entry of configs/pipeline_configs/run_pipeline.yaml and num_gpus for each open-source model in configs/model_configs/models.yaml. The default values were chosen to work with 80GB A100s, and appropriate values will depend on your setup.

Most use cases can then be handled by modifying the following arguments:

base_save_dir="./results"

base_log_dir="./slurm_logs"

methods="method1,method2,method3" # or "all" to use all methods

models="model1,model2,model3" # or "all" to use all models

behaviors_path="./data/behavior_datasets/harmbench_behaviors_text_val.csv"

step="all" # or "1", "1.5", "2", "3", "2_and_3"

mode="slurm"

partition="your_partition"

cls_path="cais/HarmBench-Llama-2-13b-cls"

python ./scripts/run_pipeline.py --base_save_dir $base_save_dir --base_log_dir $base_log_dir --methods $methods --models $models --behaviors_path $behaviors_path --step $step --mode $mode --partition $partition --cls_path $cls_pathExamples and Argument Descriptions

# Run GCG against all compatible models using a SLURM cluster

python ./scripts/run_pipeline.py --methods GCG --models all --step all --mode slurm

# Run all compatible attacks against Llama 2 7B Chat using a SLURM cluster

python ./scripts/run_pipeline.py --methods all --models llama2_7b --step all --mode slurm

# ---

# Generate test cases for a subset of methods and models using a SLURM cluster

python ./scripts/run_pipeline.py --methods ZeroShot,PEZ,TAP --models baichuan2_7b,mistral_7b,llama2_70b --step 1 --mode slurm

# Merge test cases for the above methods on a local machine

python ./scripts/run_pipeline.py --methods ZeroShot,PEZ,TAP --models baichuan2_7b,mistral_7b,llama2_70b --step 1.5 --mode local

# Generate and evaluate completions for the above methods and models using a SLURM cluster

python ./scripts/run_pipeline.py --methods ZeroShot,PEZ,TAP --models baichuan2_7b,mistral_7b,llama2_70b --step 2_and_3 --mode slurmArguments to run_pipeline.py that are used in all steps of the evaluation pipeline are:

base_save_dir: The base directory for saving test cases, completions, and results for a specific set of behaviors. Specific test cases and results are saved inbase_save_dir/{method_name}/{experiment_name}base_log_dir: The base directory for saving slurm logs when usingmode="slurm". Specific logs are saved inbase_log_dir/{method_name}/{experiment_name}.pipeline_config_path: The path to the pipeline config to use. Defaults to./configs/pipeline_configs/run_pipeline.yaml.models_config_path: The path to the model config to use. Defaults to./configs/model_configs/models.yaml.methods: A string formatted as a comma-separated list of proper method names (proper method names are the entries inrun_pipeline.yaml).models: A string formatted as a comma-separated list of model names (options are inmodels_config_path)behaviors_path: The path to the behavior dataset CSV file.step: The step of the pipeline to run. Available options are "all", "1", "1.5", "2", "3", and "2_and_3". This must be a string.mode: Whether to run the pipeline by submitting SLURM jobs or running scripts locally. In theslurmmode, jobs are submitted with appropriate dependencies. In thelocalmode, steps of the pipeline are sequentially run on the current machine. In thelocal_parallelmode, we use Ray andCUDA_VISIBLE_DEVICESto simulate a miniature cluster. We recommend using theslurmmode (or submitting a PR for a different cluster manager) to enable parallelizing test case generation across multiple machines. For a single machine (e.g., an AWS instance),local_parallelmode can parallelize the pipeline across the different GPUs on the machine without needing to set up Slurm.partition: The partition to use when submitting SLURM jobs

Arguments that are only used in step 1 of the evaluation pipeline are:

behavior_ids_subset: An optional comma-separated list of behavior IDs, or a path to a newline-separated list of behavior IDs. If provided, test cases will only be generated for the specified behaviors. This is useful for filling in missing results.overwrite: Whether to overwrite test cases. If set to "True", test case files will be overwritten. If set to "False", we check whether test cases already exist and skip generating them if so.verbose: A flag passed togenerate_test_cases, which red teaming methods can use to print out intermediate results.

Arguments that are only used in step 2 of the evaluation pipeline are:

max_new_tokens: The number of tokens to generate with target models. We usemax_new_tokens=512for all experiments. Using a different number of tokens can make results hard to compare, so we recommend using the same value as the baselines one compares to.incremental_update: Whether to only generate completions for behaviors that are not already in the output completions files. This is useful when adding your own behaviors, since generating redundant completions with closed-source models can be costly for some attacks.

Arguments that are only used in step 3 of the evaluation pipeline are:

cls_path: The path to the classifier used for computing attack success rate (ASR).

The default pipeline config file is configs/pipeline_configs/run_pipeline.yaml. This interfaces with run_pipeline.py to streamline the evaluation pipeline. Entries from run_pipeline.yaml are given below.

GCG-Multi:

class_name: EnsembleGCG

experiment_name_template: <model_name>

behavior_chunk_size: all_behaviors

base_num_gpus: 0

run_ids: [0, 1, 2, 3, 4]

allowed_target_model_types: [open_source]

GCG-Multi_1000steps:

class_name: EnsembleGCG

experiment_name_template: <model_name>_1000steps

behavior_chunk_size: all_behaviors

base_num_gpus: 0

run_ids: [0, 1, 2, 3, 4]

allowed_target_model_types: [open_source]

GCG-Transfer:

class_name: EnsembleGCG

experiment_name_template: llama2_7b_vicuna_7b_llama2_13b_vicuna_13b_multibehavior_1000steps

behavior_chunk_size: all_behaviors

base_num_gpus: 4

run_ids: [0, 1, 2, 3, 4]

allowed_target_model_types: [open_source, closed_source]

FewShot:

class_name: FewShot

experiment_name_template: <model_name>

behavior_chunk_size: 5

base_num_gpus: 2

allowed_target_model_types: [open_source]The name of the entry is what we refer to as a proper method name. This is distinct from the method_name argument used earlier, which refers to the name of a class in the baselines folder that defines a red teaming method. Specifically, while method configs can specify many different experiments, a proper method name and model name map to a single experiment (i.e., a single set of test cases for that model). This mapping is defined by entries in run_pipeline.yaml.

For example, suppose we use run_pipeline.py to generate completions with Llama 2 7B Chat on the test cases from GCG-Transfer. We would run

python ./scripts/run_pipeline.py --methods GCG-Transfer --models llama2_7b --step 2 --mode slurmThis would map the GCG-Transfer and llama2_7b arguments to a specific experiment folder. The run_pipeline.yaml config for GCG-Transfer tells us that it maps to the llama2_7b_vicuna_7b_llama2_13b_vicuna_13b_multibehavior_1000steps experiment for EnsembleGCG. Now suppose we want to generate completions with Baichuan 2 13B Chat on the test cases from GCG-Multi. We would run

python ./scripts/run_pipeline.py --methods GCG-Multi --models baichuan2_7b --step 2 --mode slurmThis would map the GCG-Multi and baichuan2_7b arguments to the baichuan2_7b experiment for EnsembleGCG. If we have another set of experiments in the EnsembleGCG config corresponding to 1000 optimization steps instead of the default 500 for EnsembleGCG, we can run this with run_pipeline.py using the GCG-Multi_1000steps proper method name. Note how both this and GCG-Multi have <model_name> in their experiment_name_template field. This is filled in by run_pipeline.py with the corresponding models.

If we run

python ./scripts/run_pipeline.py --methods GCG-Multi --models baichuan2_7b,llava_v1_5 --step 2 --mode slurmthen only jobs for baichuan2_7b will be submitted to SLURM. This is because llava_v1_5 has the field model_type: open_source_multimodal in its model config. This interfaces with run_pipeline.yaml and the allowed_target_model_types field in each proper method config. Only allowed model types can be run with a specific method.

The base_num_gpus field is used when scheduling slurm jobs. This is combined with the num_gpus field in model configs to determine how many GPUs to request in each SLURM job. You may want to change num_gpus and base_num_gpus depending on the GPUs you are using.