Our lab has released the image composition toolbox libcom, which integrates all the functions related to image composition. The toolbox would be continuously upgraded. Welcome to visit and try :-)

Our lab has released the image composition toolbox libcom, which integrates all the functions related to image composition. The toolbox would be continuously upgraded. Welcome to visit and try :-)

This is the official code of the following paper:

Inharmonious Region Localization (DIRL)

Jing Liang, Li Niu, Liqing Zhang

MoE Key Lab of Artificial Intelligence, Shanghai Jiao Tong University

(ICME 2021 | Bibtex)

Our latest research on Inharmonious Region Localization was accepted by AAAI 2022:

Inharmonious Region Localization by Magnifying Domain Discrepancy (MadisNet)

Jing Liang1, Li Niu1, Penghao Wu1, Fengjun Guo2, Teng Long2

1MoE Key Lab of Artificial Intelligence, Shanghai Jiao Tong University

2INTSIG

[paper|code]

Here are some examples of inharmonious images (top row) and their inharmonious region masks (bottom row). These inharmonious region could be infered by comparing the color or illuminance with surrounding area.

We also demonstrate our proposed DIRL(Deep Inharmonious Region Localization) network on the left part of following figure. The right part elaborates our proposed Bi-directional Feature Integration (BFI) block, Mask-guided Dual Attention (MDA) block, and Global-context Guided Decoder (GGD) block.

- Install PyTorch>=1.0 following the official instructions

- git clone https://github.com/bcmi/DIRL.git

- Install dependencies: pip install -r requirements.txt

In this paper, we conduct all of the experiments on the latest released harmonization dataset iHarmoney4.

One concern is that the inharmonious region in an inharmonious image may be ambiguous because the background can also be treated as inharmonious region. To avoid the ambiguity, we only use the inharmonious images without using paired harmonious images, and simply discard the images with foreground occupying larger than 50% area, which only account for about 2% of the whole dataset.

We tailor the training set to 64255 images and test set to 7237 images respectively, yielding le50_train.txt and le50_test.text files in this project. And you can further divide the training list into training set and validation set, in which we randomly choose 10% items in le50_train.txt as validation set.

If you want to use other datasets, please follow the dataset loader file:data/ihd_dataset.py

Please specify the bash file. We provide a training and a test bash examples:train.sh, test.sh

One quick training command:

python3 dirl_train.py --dataset_root <PATH_TO_DATASET> --checkpoints_dir <PATH_TO_SAVE> --batch_size 8 --gpu_ids 0Google Drive | Baidu Cloud (access code: hvto)

Download the model and put it to directory <save_dir>, where <save_dir> should be same as the bash parameter <checkpoints_dir>.

Here we show show qualitative comparision with state-of-art methods of other related fields:

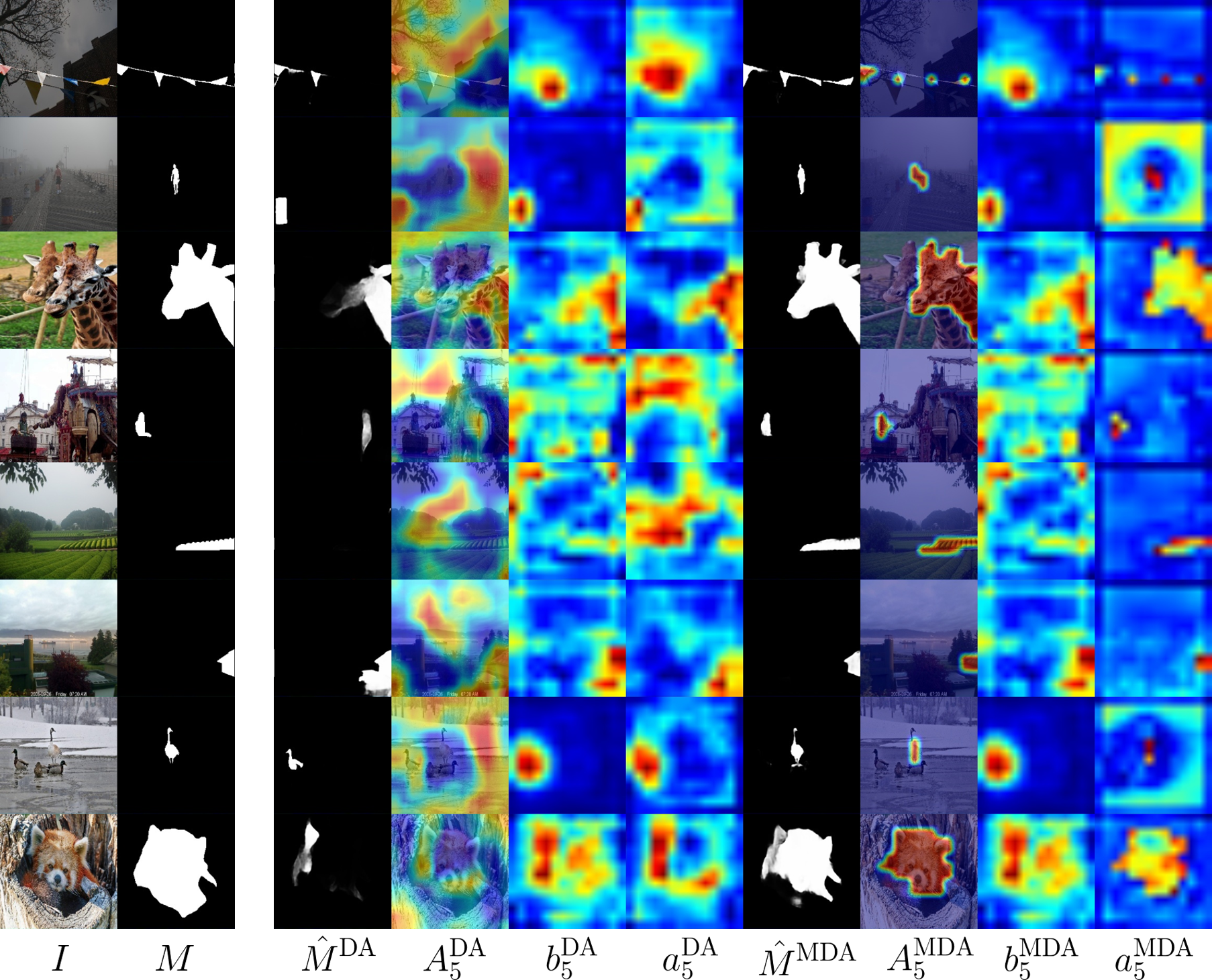

Besides, we visualize the refined feature from our proposed Mask-guided Dual Attention(MDA) block and compare it with vanilla Dual Attention(DA) block:

If you find this work or code is helpful in your research, please cite:

@inproceedings{Liang2021InharmoniousRL,

title={Inharmonious Region Localization},

author={Jing Liang and Li Niu and Liqing Zhang},

booktitle={ICME},

year={2021}

}

[1] Inharmonious Region Localization. Jing Liang, Li Niu, Liqing Zhang. Accepted by ICME. download