This project is to develop an Android version of "acoustic feature camera" developed in my other project acoustic features.

This app is for both training CNN and running inference for acoustic event detection: code

I reused part of this code that was written in C language by me for STM32 MCUs.

The DSP part used JTransforms.

I confirmed that the app runs on LG Nexus 5X and Google Pixel4. The code is short and self-explanatory, but I must explain that the app saves all the feature files in JSON format under "/Android/data/audio.processing.spectrogram/files/Music".

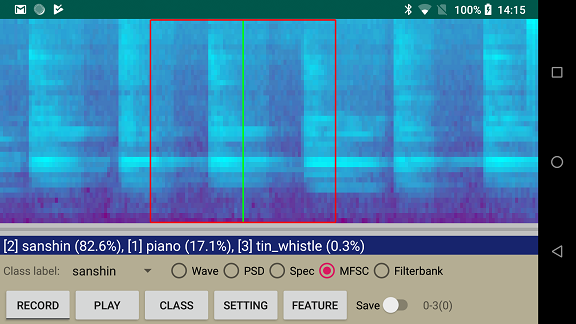

I developed a color map function "Coral reef sea" on my own to convert grayscale image into a color of the sea in Okinawa.

private fun applyColorMap(src: IntArray, dst: IntArray) {

for (i in src.indices) {

val mag = src[i]

dst[i] = Color.argb(0xff, 128 - mag / 2, mag, 128 + mag / 2)

}

}

This is a notebook for training a CNN model for musical instruments recognition: Jupyter notebook.

The audio feature corresponds to gray-scale image the size of 64(W) x 40(H).

Converting Keras model into TFLite model is just two lines of code:

converter = tf.lite.TFLiteConverter.from_keras_model(model)

tflite_model = converter.convert()

In case of "musical instruments" use case, the notebook generates two files, "musical_instruments-labels.txt" and "musical_instruments.tflite". I rename the files and place them under "assets" folder for the Android app:

-- assets --+-- labels.txt

|

+-- aed.tflite

Basically, short-time FFT is applied to raw PCM data to obtain audio feature as gray-scale image.

<< MEMS mic >>

|

V

[16bit PCM data] 16kHz mono sampling

|

[ Pre-emphasis ] FIR, alpha = 0.97

|

[Overlapping frames (50%)]

|

[Windowing] Hann window

|

[ Real FFT ]

|

[ PSD ] Absolute values of real FFT / N

|

[Filterbank(MFSCs)] 40 filters for obtaining Mel frequency spectral coefficients.

|

[Log scale] 20*Log10(x) in DB

|

[Normalzation] 0-255 range (like grayscale image)

|

V

<< Audio feature >> 64 bins x 40 mel filters

The steps taken for training a CNN model for Acoustic Event Detection is same as that for classification of grayscale images.

<< Audio feature >> 64 bins x 40 mel filters

|

V

[CNN training]

|

V

[Keras model(.h5)]

|

V

<< TFLite model >>

<< Audio feature >> 64 bins x 40 mel filters

|

V

[Trained CNN(.tflite)]

|

V

<< Results >>

I learned audio processing technique for machine learning from this site.