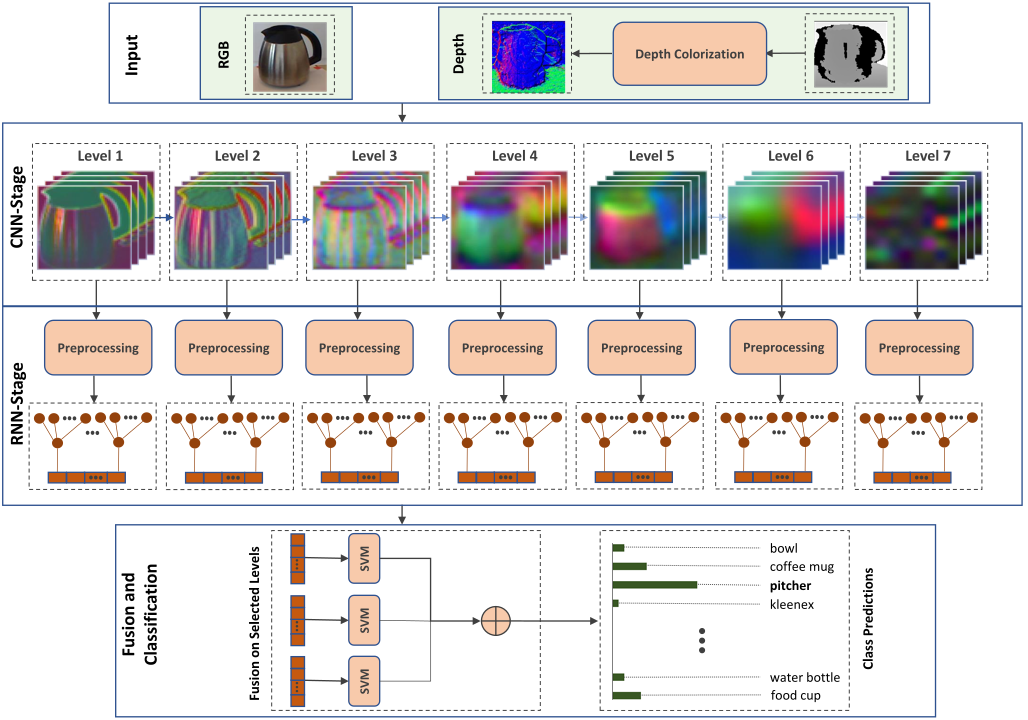

This repository presents the implementation of a general two-stage framework for RGB-D object and scene recognition tasks. The framework employs a convolutional neural network (CNN) model as the underlying feature extractor and random recursive neural network (RNN) to encode these features into high-level representations. For the details, please refer to:

When CNNs Meet Random RNNs: Towards Multi-Level Analysis for RGB-D Object and Scene Recognition

Ali Caglayan, Nevrez Imamoglu, Ahmet Burak Can, Ryosuke Nakamura

[arXiv] [Paper][Demo]

The framework is a general PyTorch-based codebase for RGB-D object and scene recognition. The overall structure has been designed in a modular and extendable way through a unified CNN and RNN process. Therefore, it offers an easy and flexible use. These also can be extended with new capabilities and combinations with different setups, and employing other models for implementing new ideas.

This work has been tested on the popular Washington RGB-D Object and SUN RGB-D Scene datasets demonstrating state-of-the-art results both in object and scene recognition tasks.

- Support both one-stage CNN feature extraction and two-stage incorporation of CNN-randRNN feature extraction.

- Applicable to AlexNet, VGGNet-16, ResNet-50, ResNet-101, DenseNet-121 as backbone CNN models.

- Pretrained models can be used as fixed feature extractors in a fast way. They also can be used after performing finetuning.

- A novel random pooling strategy, which extends the uniform randomness in RNNs and is applicable to both spatial and channel wise downsampling, is presented to cope with the high dimensionality of CNN activations.

- A soft voting approach based on individual SVM confidences for multi-modal fusion has been presented.

- An effective depth colorization based on surface normals has been presented.

- Clear and extendible code structure for supporting more datasets and applying to new ideas.

System requirements for each models are reported in the supplementary material. Ideally, it would be better to have a multi-core processor, 32 GB RAM, graphics card with at least 10 GB memory, and enough disk space to store models, features, etc. depending on saving choices and initial parameters.

conda has been used as the virtual environment manager and pip as the package manager. It is possible to use either pip or conda (or both) for package management. Before starting, it is needed to install following libraries:

- PyTorch

- Scikit-learn and OpenCV

- psutil, h5py, seaborn, and matplotlib libs.

We have installed these libraries withpipas below:

- Create virtual environment.

conda create -n cnnrandrnn python=3.7

conda activate cnnrandrnn

-

Install Pytorch according to your system preferences such as OS, package manager, and CUDA version (see more details here):

e.g.pip install torch==1.5.0+cu101 torchvision==0.6.0+cu101 -f https://download.pytorch.org/whl/torch_stable.html

This will install some other libs includingnumpy,pillow, etc. -

Install

scikit-learnand OpenCV libraries:

pip install -U scikit-learn

pip install opencv-python

- Install

psutil,h5py,seabornandmatplotliblibs:

pip install psutil

pip install h5py

pip install seaborn

pip install -U matplotlib

The following directory structure is a reference to run the code as described in this documentation. This structure can be changed according to the command line parameters.

CNN_randRNN ├── data │ ├── wrgbd │ │ │──eval-set │ │ │ ├──apple │ │ │ ├──ball │ │ │ ├──... │ │ │ ├──water_bottle │ │ │──split.mat │ ├── sunrgbd │ │ │──SUNRGBD │ │ │ ├──kv1 │ │ │ ├──kv2 │ │ │ ├──realsense │ │ │ ├──xtion │ │ │──allsplit.mat │ │ │──SUNRGBDMeta.mat │ │ │──organized-set │ │ │ ├──Depth_Colorized_HDF5 │ │ │ │ ├──test │ │ │ │ ├──train │ │ │ ├──RGB_JPG │ │ │ │ ├──test │ │ │ │ ├──train │ │ │──models-features │ │ │ ├──fine_tuning │ │ │ │ ├──resnet101_Depth_Colorized_HDF5_best_checkpoint.pth │ │ │ │ ├──resnet101_RGB_JPG_best_checkpoint.pth │ │ │ ├──overall_pipeline_run │ │ │ │ ├──svm_estimators │ │ │ │ │ ├──resnet101_Depth_Colorized_HDF5_l5.sav │ │ │ │ │ ├──resnet101_Depth_Colorized_HDF5_l6.sav │ │ │ │ │ ├──resnet101_Depth_Colorized_HDF5_l7.sav │ │ │ │ │ ├──resnet101_RGB_JPG_l5.sav │ │ │ │ │ ├──resnet101_RGB_JPG_l6.sav │ │ │ │ │ ├──resnet101_RGB_JPG_l7.sav │ │ │ │ ├──demo_images │ │ │ ├──random_weights │ │ │ │ ├──resnet101_reduction_random_weights.pkl │ │ │ │ ├──resnet101_rnn_random_weights.pkl ├── src ├── logs

Washington RGB-D Object dataset is available here. We have tested our framework using cropped evaluation set without extra background subtraction. Uncompress the data and place in data/wrgbd (see the file structure above).

To convert depth maps to colorized RGB-like depth representations:

sh run_steps.sh step="COLORIZED_DEPTH_SAVE"

python main_steps.py --dataset-path "../data/wrgbd/" --data-type "depthcrop" --debug-mode 0

Note that you might need to export /src/utils to the PYTHONPATH (e.g. export PYTHONPATH=$PYTHONPATH:/home/user/path_to_project/CNN_randRNN/src/utils). debug-mode with 1 runs the framework for a small proportion of data (you can choose the size with debug-size parameter, which sets the number of samples for each instance.) This will create colorized depth images under the /data/wrgbd/models-features/colorized_depth_images.

Before demonstrating how to run the program, see the explanations for command line parameters with their default values here.

To run the overall pipeline with the default parameter values:

python main.py

This will train/test SVM for every 7 layers. You may want to make levels other than that of optimum ones to the comment lines. It is also possible to run the system step by step. See the details here.

This codebase is presented based on Washington RGB-D object recognition. It can also be applied to SUN RGB-D Scene dataset. Please see the details here to use SUN RGB-D Scene dataset. This can also be considered as a reference guide for the use of other datasets.

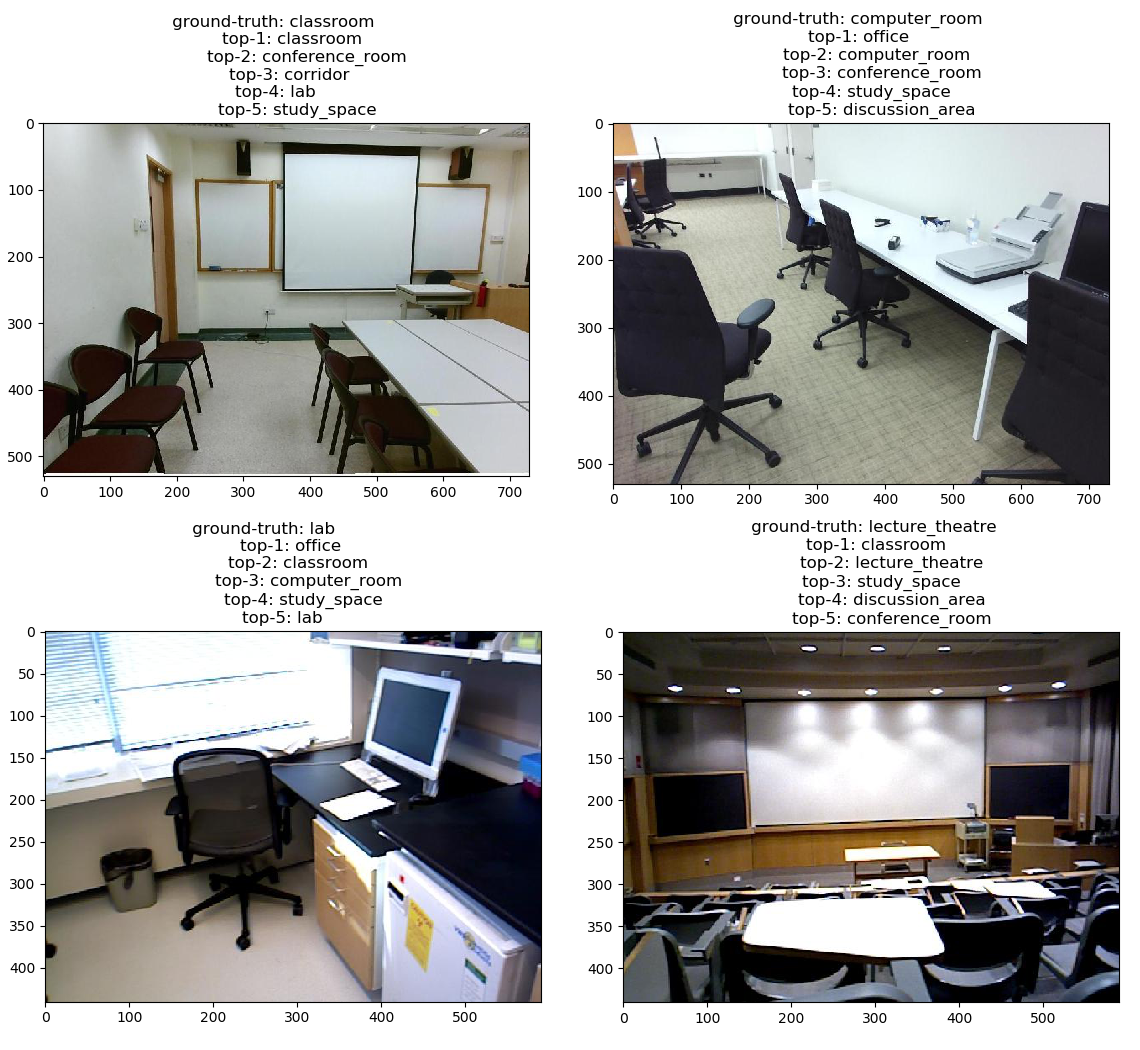

A demo application using RGB images is presented. Download trained models and RNN random weights here. Uncompress the folder and place as the file structure given above.

There are two run modes. Run the demo application with the default parameters for each mode as below:

python demo.py --mode "image" |

python demo.py --mode "camera" |

|

|

image mode takes the images in the demo_images folder, while the camera modes takes camera images as inputs.

If you find this work useful in your research, please consider citing:

@article{Caglayan2022CNNrandRNN,

title={When CNNs meet random RNNs: Towards multi-level analysis for RGB-D object and scene recognition},

journal = {Computer Vision and Image Understanding},

author={Ali Caglayan and Nevrez Imamoglu and Ahmet Burak Can and Ryosuke Nakamura},

volume = {217},

pages = {103373},

issn = {1077-3142},

doi = {https://doi.org/10.1016/j.cviu.2022.103373},

year={2022}

}

This project is released under the MIT License (see the LICENSE file for details).

This paper is based on the results obtained from a project commissioned by the New Energy and Industrial Technology Development Organization (NEDO).