This is a clean and robust Pytorch implementation of Noisy-Duel-DDQN on Atari.

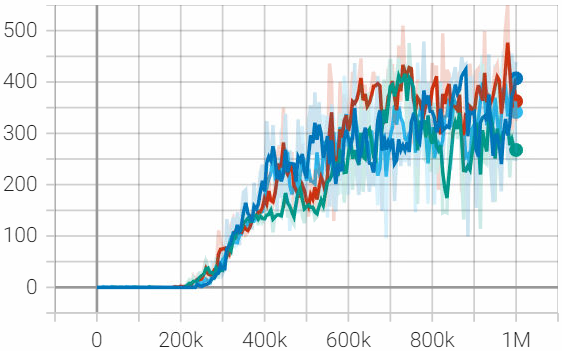

| Pong | Enduro |

|---|---|

|

|

|

|

All the experiments are trained with same hyperparameters. Other RL algorithms by Pytorch can be found here.

gymnasium==0.29.1

numpy==1.26.1

pytorch==2.1.0

python==3.11.5P.S. You can install the Atari environment via pip install gymnasium[atari] gymnasium[accept-rom-license]

python main.py # Train PongNoFrameskip-v4 with DQNpython main.py --Double True --Duel False --Noisy False # Use Double DQNpython main.py --Double False --Duel True --Noisy False # Use Duel DQNpython main.py --Double False --Duel False --Noisy True # Use Noisy DQNpython main.py --Double True --Duel True --Noisy True # Use Double Duel Noisy DQNIf you want to train on different enviroments, just run

python main.py --EnvIdex 20 # Train EnduroNoFrameskip-v4 with DQNThe --EnvIdex can be set to be 1~57, where

1: "Alien",

2: "Amidar",

...

20: "Enduro",

...

57: "Zaxxon"For more details, please refer to AtariNames.py.

Note that the hyperparameters of this code is a light version (we only use a replay buffer of size 10000 to save memory). Thus, the default hyperparameters may not perform well on all the games. If you want a more robost hyperparameters, please check the DQN paper (Nature).

python main.py --render True --EnvIdex 20 --Double True --Duel True --Noisy False --Loadmodel True --ModelIdex 900 # Play with Enduropython main.py --render True --EnvIdex 37 --Double True --Duel True --Noisy True --Loadmodel True --ModelIdex 700 # Play with PongYou can use the tensorboard to record anv visualize the training curve.

- Installation (please make sure PyTorch is installed already):

pip install tensorboard

pip install packaging- Record (the training curves will be saved at '\runs'):

python main.py --write True- Visualization:

tensorboard --logdir runsFor more details of Hyperparameter Setting, please check 'main.py'

NoisyNet DQN: Fortunato M, Azar M G, Piot B, et al. Noisy networks for exploration[J]. arXiv preprint arXiv:1706.10295, 2017.