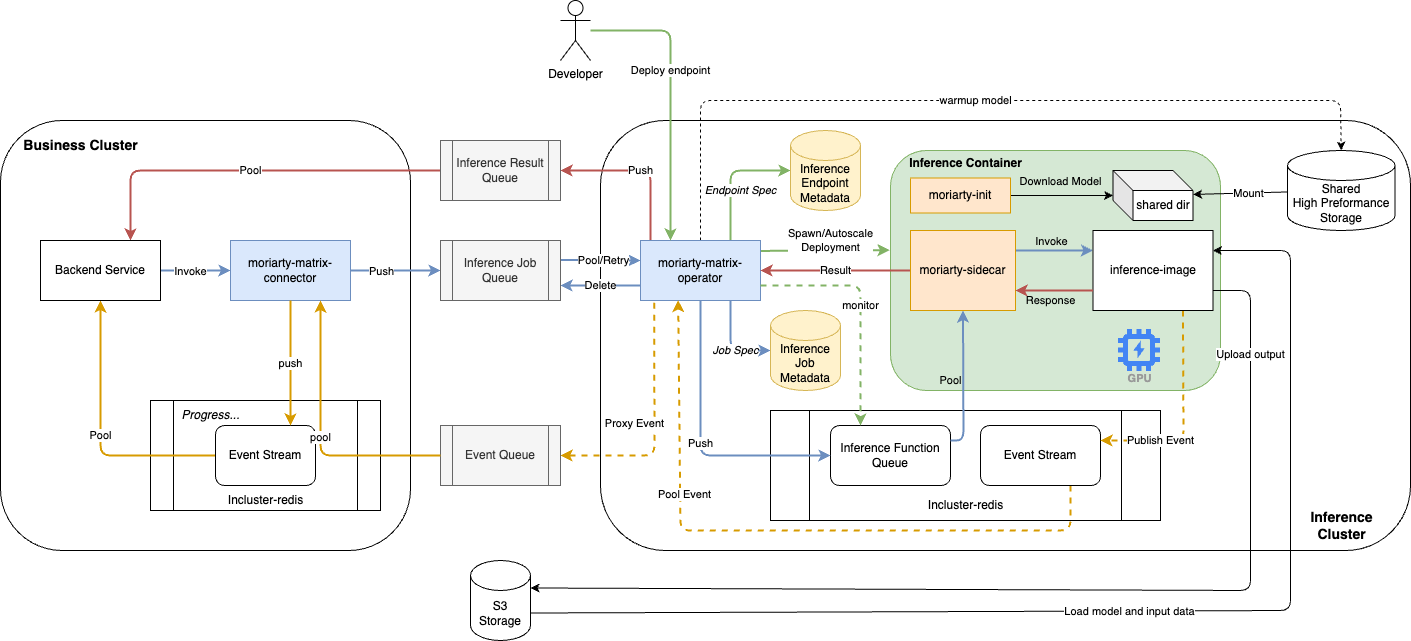

Moriarty is a set of components for building asynchronous inference cluster.

Relying on cloud vendors or self-built global queue services, asynchronous inference clusters can be built without exposing ports to the public.

- Preventing client timeout.

- Avoid HTTP disconnection due to network or other issues.

- Reducing HTTP queries with queues.

- Deploy on Multi/Hybrid/Private cloud, even on bare metal.

This project came from my deep use of Asynchronous Inferenc for AWS Sagemaker, and as far as I know, only AWS and Aliyun provide asynchronous inference support.

For open source projects, there are many deployment solutions, but most of them are synchronous inference (based on HTTP or RPC).I don't find any alternative for async inference. Maybe Kubeflow pipeline can be used for asynchronous inference. But without serving support(Leave model in GPU as a service, not load per job), there is a significant overhead of GPU memory cache and model load time.

Key Components:

- Matrix: single producer, multiple consumers.

Connectoras producer, provide HTTP API for Backend Service and push invoke request to the global Job Queue.Operatoras consumer, pull tasks from the Job Queue and push them to local queue. Pulling or not depends on the load of inference cluster. And also,Operatorwill autoscale inference container if needed. - Endpoint: Deploy a function as an HTTP service.

- Sidecar: Proxy and transform queue message into HTTP request.

- Init: Init script for inference container

CLIs:

moriarty-matrix: Manager matrix componentsmoriarty-operator: Start the operator componentmoriarty-connector: Start the connector componentmoriarty-sidecar: Start the sidecar componentmoriarty-deploy: Requestoperator's API or database for deploy inference endpoint.

pip install moriarty[matrix] for all components.

Or use docker image

docker pull wh1isper/moriarty or docker pull ghcr.io/wh1isper/moriarty:dev

docker pull wh1isper/moriarty:devfor developing version

Install pre-commit before commit

pip install pre-commit

pre-commit install

Install package locally with test dependencies

pip install -e .[test]

Run tests with pytest

pytest -v tests/