When answering questions, it is best to stick to topics with which one has familiarity. This policy is doubly true for chatbots. The question is: how do we make a bot stick to a particular topic? In this example, we will cover:

- Building the bot

- Walking through conversations

- A brief walkthrough of launching a bot with topical rails

Before diving deeper, let's pick a topic: "Jobs Report". Each month the US

Bureau of Labor Statistics publishes a

jobs report. In this

walkthrough, we will build a basic bot that will answer questions related to the

report but will politely decline to answer about any other subject. The answers

given by the bot will be based on a file we provide as a knowledge base,

which in this case is report.md. Since the world for our bot is divided into

two domains: "jobs" and "off-topic-questions", for simplicity's sake let's

create two "colang" files, jobs.co & off-topic.co. We also provide some

general instructions and model details in a configuration file: config.yml.

Let's start with the configuration file (config.yml). At a high level, this configuration file contains 3 key details:

-

A general instruction: Users can specify general system-level instructions for the bot. In this instance, we are specifying that the bot is responsible for answering questions about the jobs report. We are also specifying details like the behavioral characteristics of the bot, for instance, we want it to be concise and only answer questions truthfully.

instructions: - type: general content: | Below is a conversation between a bot and a user about the recent job reports. The bot is factual and concise. If the bot does not know the answer to a question, it truthfully says it does not know. -

Specifying which model to use: Users can select from a wide range of large language models to act as the backbone of the bot. In this case, we are selecting OpenAI's davinci.

models: - type: main engine: openai model: text-davinci-003 -

Provide sample conversations: To ensure that the large language model understands how to converse with the user, we provide a few sample conversations. Below is a small snippet of the conversation we can provide the bot

sample_conversation: | user "Hello there!" express greeting bot express greeting "Hello! How can I assist you today?" user "What can you do for me?" ask about capabilities ...

Using a Knowledge Base to answer a user's questions is quite simple. Simply

create a folder kb and store all the relevant files in the said folder. When

the bot is loaded, the files are chunked, indexed and stored in a local vector

database. When a user asks a question, the most relevant chunks are retrieved and

added to the context being sent to the Large Language Model.

sample_rail

├── kb

│ └── report.md

├── config.yml

├── jobs.co

└── off-topic.co

With the context and knowledge base set, let's dive deep into the core of the

conversation: setting rails. For this discussion, we can make use of two key

aspects of colang, user/bot messages and flows. We write rails by

writing canonical forms for messages and flows.

Quick Note: Think of messages as generic intents and flows as pseudo-code for the flow of the conversation. For a more formal explanation, refer to this document.

Let's start with a basic user query; asking what can the bot do? In this case,

we define a user message ask capabilities and then proceed by providing

some examples of what kinds of user queries we could refer to as a user asking

about the capabilities of the bot in simple natural language.

define user ask capabilities

"What can you do?"

"What can you help me with?"

"tell me what you can do"

"tell me about you"

With the above, we can say that the bot can now recognize what the user is asking about. The next step is making sure that the bot has an understanding of how to answer said question.

define bot inform capabilities

"I am an AI assistant which helps answer questions based on a given knowledge base. For this interaction, I can answer question based on the job report published by US Bureau of Labor Statistics."

Therefore, we define a bot message. At this point, a natural question a

developer might ask is, "Do I have to define every type of user & bot behavior?". The short answer is, it depends. The underlying large

language model can answer undefined questions. Refer to the

colang runtime description guide for more information on the same. In the

knowledge-base-based questions in the later section, we will see a case where

the bot message is generated rather than defined.

With the messages defined, the last piece of the puzzle is connecting them. This

is done by defining a flow. Below is the simplest possible flow.

define flow

user ask capabilities

bot inform capabilities

We essentially define the following behavior: When a user query can be "bucketed"

into the type ask capabilities, the bot will respond with a message of type

inform capabilities.

Note: Both flows and messages for this example are defined in

jobs.co

Adding a knowledge base to the mix changes two aspects of the bot's workflow (as described above).

- First, the bot needs to retrieve relevant information.

- Second, bot needs to formulate a response with said information.

Retrieving relevant information: As discussed in the Using a knowledge base section, we have the knowledge base chunked, indexed, and stored in a vector database. This database is used to pull the more relevant chunk per the user's request.

Formulating a knowledgeable response: Let's assume that the user wants to ask a question about household survey data from the jobs report.

define flow

user ask about household survey data

bot response about household survey data

define user ask about household survey data

"How many long term unemployment individuals were reported?"

"What's the number of part-time employed number?"

As observable above, we have formulated a flow and a user message but

haven't defined the bot message. In this case, it isn't possible to define a

bot message as the answer needs to be retrieved from the knowledge base.

Therefore, when the bot recognizes the need to run this particular flow, it appends the retrieved information along with the canonical form of the flow and has the LLM generate the bot message.

With the above example, developers can get an understanding of how to make the bot answer relevant questions. The next question is, how to handle off-topic questions.

For off-topic questions, we can go about addressing them in two different ways.

- The first method is writing a "catch-all" message type, let's say "off-topic".

define user ask off topic

"Who is the president?"

"Can you recommend the best stocks to buy?"

"Can you write an email?"

"Can you tell me a joke?"

...

define bot explain cant help with off topic

"I cannot comment on anything which is not relevant to the job report"

define flow

user ask off topic

bot explain cant help with off topic

- The other approach is to break down the topics individually and add custom

responses for each. With enough relevant flows, the LLM can start recognizing

that any topic other than

jobs reportare not to be answered.

Note: While this section shows the mechanism in which the bot is answering a question, it is highly recommended to review the colang runtime guide for an in-depth explanation.

A Brief explanation about how .co and .yml files are absorbed by the bot:

When the bot is launched, all the user and bot messages and flows are

indexed and stored in two in-memory vector stores. These vector stores are then

used to retrieve an exact match or the "top N" most relevant messages and

flows at different steps of the bot's process.

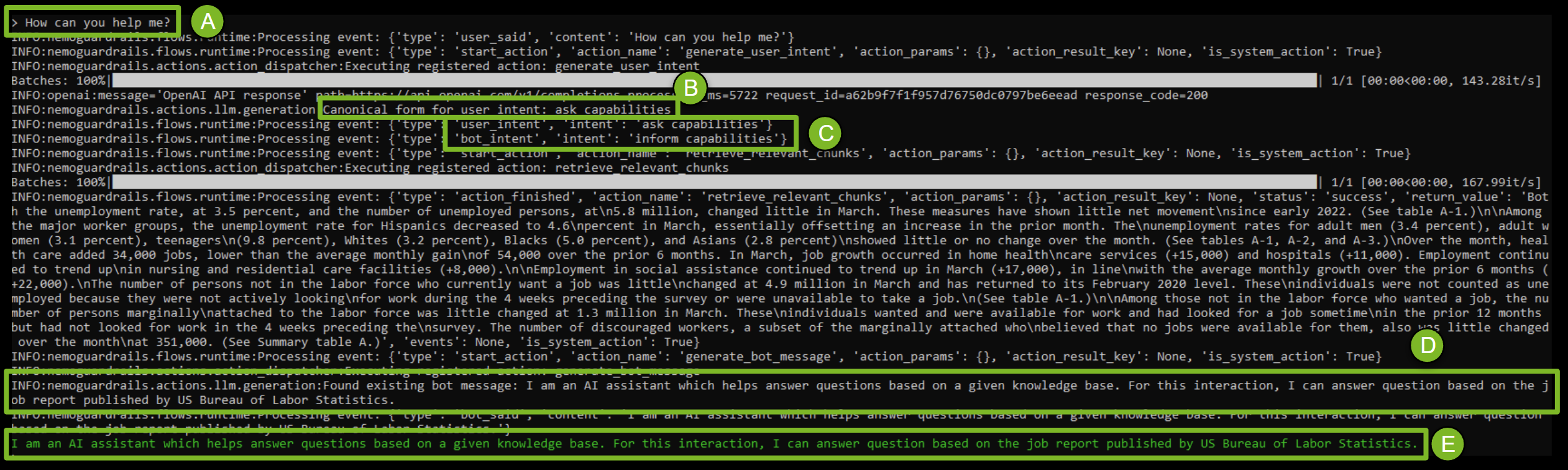

Below is an interaction between a user and the bot. There are five points of

interest in this conversation marked A, B, C, D, and E. Let's discuss it in

some detail.

- A: The user is asking the bot "How can you help me?"

- B: The bot then searches its vector store for

messagesand finds the most relevantmessage, which in this case is "ask capabilities". - C: Next, the bot searches for a relevant

flowin the respective vector store and identifies theflowthat it is supposed to execute. In this case, the bot intends to inform about its capabilities. - D&E: Since there is a bot

messagein the vector store, the bot retrieves it and sends it to the user. If in case we hadn't defined thebot message, the canonical form of the flow, the question, and the sample few shot prompts would have been used by the LLM to generate a message to be sent to the user. This exact mechanism would have been used at steps B & C as well if a match wasn't found.

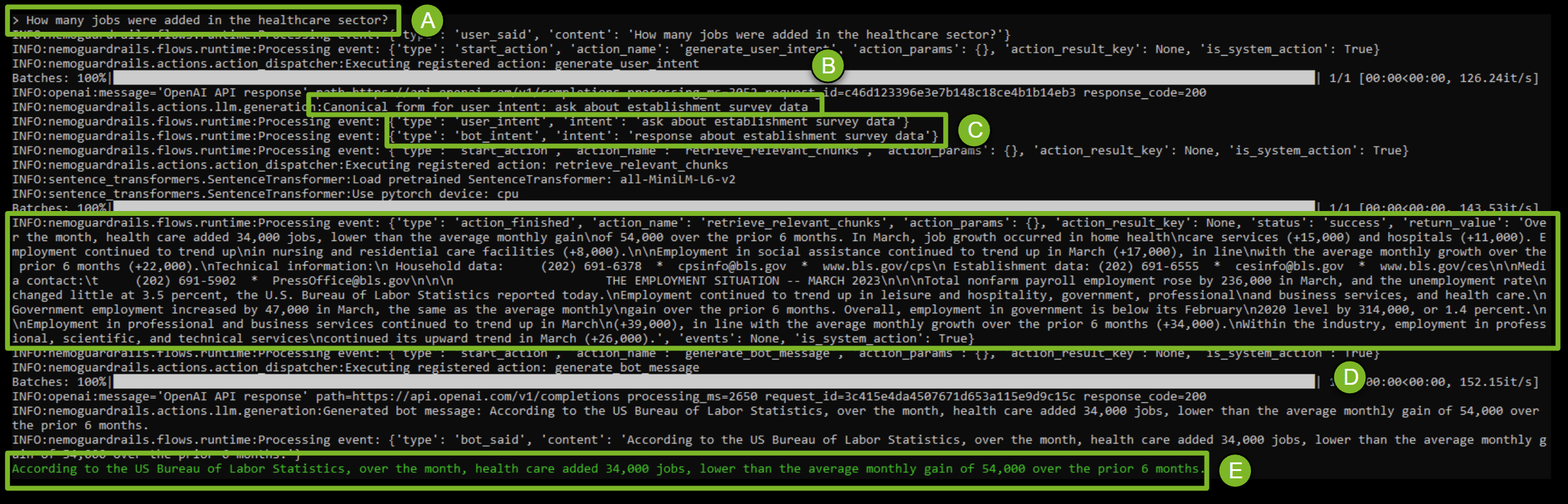

Let's move to a more interesting case where the bot needs to look up information from the report and answer a question.

- A: The user is asking the bot "How many jobs were added in the healthcare sector?".

- B: The bot then searches its vector store for

messagesand understands that the conversation is about theestablishment survey datasection of the report. - C: Next, the bot searches for a relevant

flowin the respective vector store and identifies theflowthat it is supposed to execute. In this case, the bot intends to answer with theestablishment survey data. - D: The bot retrieves the chunk containing the information about medical sector and sends the passage as context to the LLM with the question.

- E: The LLM formulates an answer on the basis of the question and shares it with the user. In this case, since the default temperature used for the model is low, and our system prompt suggests the bot be concise, the LLM responds with a very strict extractive Q&A behavior. Developers can adjust this behavior per their use case.

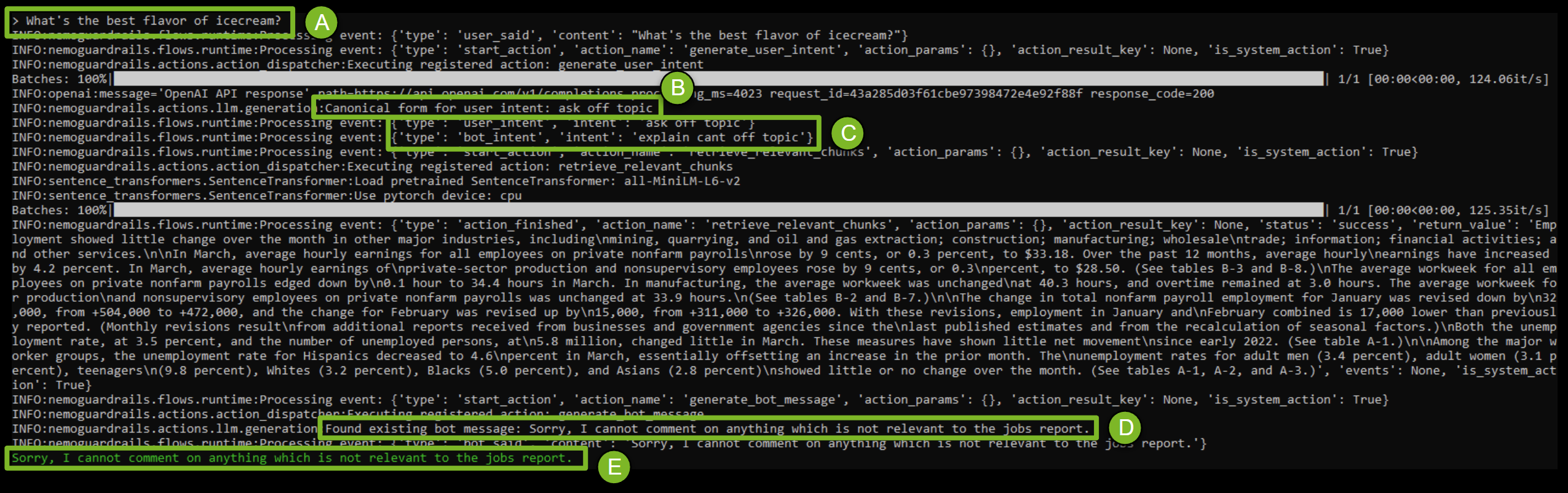

Now, let's ask the bot something off-topic. Since the job report looks positive we can ask if we should buy more stocks. Since the goal of the bot is to answer questions based on the report and not make recommendations, the expected behavior would be the bot saying that it is an off-topic conversation.

- A: The user is asking the bot "This looks like a positive report, should I buy more stocks?".

- B: The bot then searches its vector store for

messagesand understands that the conversation is off-topic. - C: Next, the bot searches for a relevant

flowin the respective vector store and identifies theflowthat it is supposed to execute. In this case, the bot intends to explain that this is an off-topic conversation. - D&E: Since there is a bot

messagein the vector store, the bot retrieves it and sends it to the user.

In summary, developers can create topics the bot should answer, and the bot shouldn't answer.

With a basic understanding of building topic rails, the next step is to try out the bot! You can interact with the bot with an API, a command line interface with the server, or with a UI.

Accessing the Bot via an API is quite simple. This method has two points to configure from a usage perspective:

- First, a path is needed to be set for all the configuration files and the rails.

- And second, for the chat API, the

rolewhich in most cases will beuserand the question or the context to be consumed by the bot needs to be provided.

from nemoguardrails import LLMRails, RailsConfig

# Give the path to the folder containing the rails

config = RailsConfig.from_path(".")

rails = LLMRails(config)

# Define role and question to be asked

new_message = rails.generate(messages=[{

"role": "user",

"content": "How can you help me?"

}])

print(new_message)

Refer to Python API Documentation for more information.

Colang allows users to interact with the server with a stock UI. To launch the server and access the UI to interact with this example, the following steps are recommended:

- Launch the server with the command:

nemoguardrails server - Once the server is launched, you can go to:

http:https://localhost:8000to access the UI - Click "New Chat" on the top left corner of the screen and then proceed to

pick

topical_railfrom the drop-down menu. Refer to Guardrails Server Documentation for more information.

To chat with the bot with a command line interface simply use the following command while you are in this folder.

nemoguardrails chat --config=.

Refer to Guardrails CLI Documentation for more information. Wondering what to talk to your bot about?

- See how the bot reacts to your conversations about the topics covered in the rails

- Go off the rails! Explore what happens if you ask about topics that aren't covered. Try to write or modify rails for some cases, or simply add more natural language examples!

- Explore more examples to help steer your bot!