US Department of Transportation Joint Program office (JPO) Operational Data Environment (ODE)

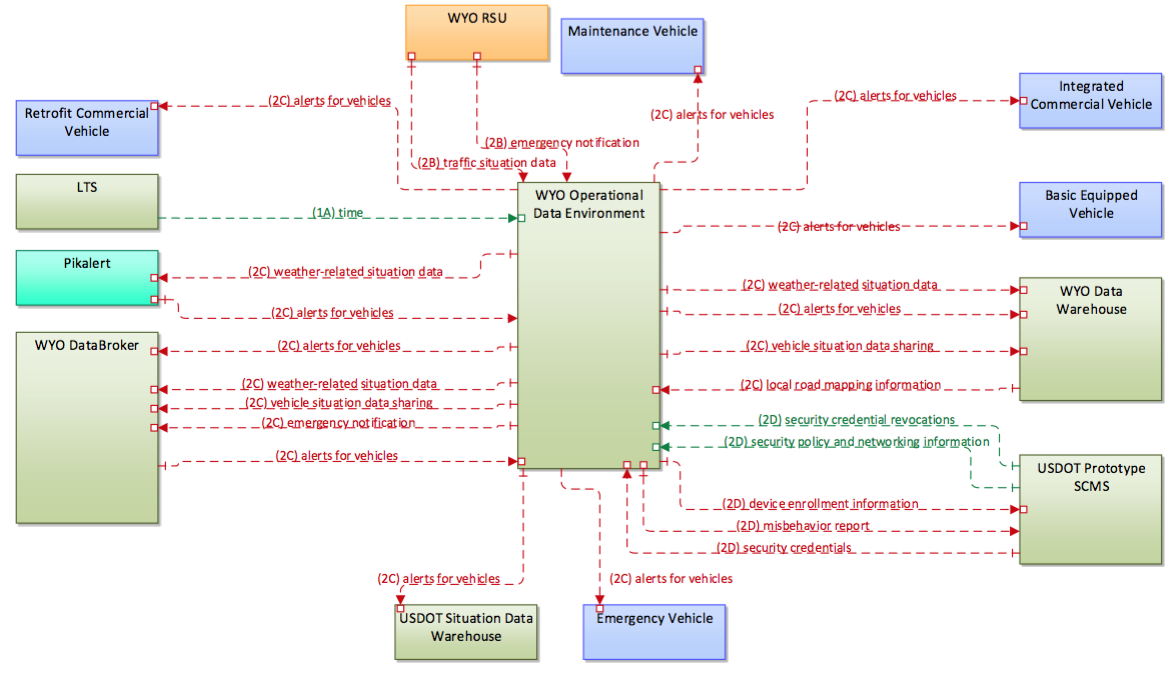

In the context of ITS, an Operational Data Environment is a real-time data acquisition and distribution software system that processes and routes data from Connected-X devices –including connected vehicles (CV), personal mobile devices, and infrastructure components and sensors –to subscribing applications to support the operation, maintenance, and use of the transportation system, as well as related research and development efforts.

This project is currently working with the Wyoming Department of Transportation (WYDOT) as one of the Connected Vehicle (CV) Pilot sites to showcase the value of and spur the adoption of CV Technology in the United States.

As one of the three selected pilots, WYDOT is focusing on improving safety and mobility by creating new ways to communicate road and travel information to commercial truck drivers and fleet managers along the 402 miles of Interstate 80 (I-80 henceforth) in the State. For the pilot project, WYDOT will work in a planning phase through September 2016. The deployment process will happen in the second phase (ending in September 2017) followed by an 18-month demonstration period in the third phase (starting in October 2017).

The current project goals for the ODE have been developed specifically for the use case of WYDOT. Additional capabilities and system functions are planned for later releases.

- Collect CV Data: Connected vehicle data from field may be collected from vehicle OBUs directly or through RSUs. Data collected include Basic Safety Messages Part I and Part 2, Event Logs and other probe data (weather sensors, etc.). These messages are ingested into the operational data environment (ODE) where the data is then further channeled to other subsystems.

- Support Data Brokerage: The WYDOT Data Broker is a sub-system that is responsible for interfacing with various WYDOT Transportation Management Center (TMC) systems gathering information on current traffic conditions, incidents, construction, operator actions and road conditions. The data broker then distributes information from PikAlert, the ODE and the WYDOT interfaces based on business rules. The data broker develops a traveler information message (TIM) for segments on I-80, and provide event or condition information back to the WYDOT interfaces

- Distribute traveler information messages (TIM): The data broker distributes the TIM message to the operational data environment (ODE) which will then communicate the message back to the OBUs, RSUs and the situational data warehouse (SDW)

- Store data: Data generated by the system (both from the field and the back-office sub-systems) are stored in the WYDOT data warehouse.

- ODE-42 Clean up the kafka adapter and make it work with Kafka broker Integrated kafka. Kept Stomp as the high level WebSocket API protocol.

- ODE-36 - Docker, docker-compose, Kafka and ode Integration

- Main repository on GitHub (public)

- https://github.com/usdot-jpo-ode/jpo-ode

- [email protected]:usdot-jpo-ode/jpo-ode.git

- Private repository on BitBucket

- https://[email protected]/usdot-jpo-ode/jpo-ode-private.git

- [email protected]:usdot-jpo-ode/jpo-ode-private.git

https://usdotjpoode.atlassian.net/secure/Dashboard.jspa

https://usdotjpoode.atlassian.net/wiki/

https://travis-ci.org/usdot-jpo-ode/jpo-ode

To allow Travis run your build when you push your changes to your public fork of the jpo-ode repository, you must define the following secure environment variable using Travis CLI (https://github.com/travis-ci/travis.rb).

Run:

travis login --org

Enter personal github account credentials and then run this:

travis env set PRIVATE_REPO_URL_UN_PW 'https://<bitbucketusername>:<password>@bitbucket.org/usdot-jpo-ode/jpo-ode-private.git' -r <travis username>/jpo-ode

The login information will be saved and this needs to be done only once.

In order to allow Sonar to run, personal key must be added with this command: (Key can be obtained from the JPO-ODE development team)

travis env set SONAR_SECURITY_TOKEN <key> -pr <user-account>/<repo-name>

https://sonarqube.com/dashboard/index?id=us.dot.its.jpo.ode%3Ajpo-ode%3Adevelop

The following instructions describe the procedure to fetch, build and run the application.

Clone the source code from GitHub and BitBucket repositories using Git commands:

git clone https://github.com/usdot-jpo-ode/jpo-ode.git

git clone https://[email protected]/usdot-jpo-ode/jpo-ode-private.gitNOTE: If running on Windows, please make sure that your global git config is set up to not convert End-of-Line characters during checkout. This is important for building docker images correctly.

git config --global core.autocrlf falseTo build the application use maven command line.

Step 1. Navigate to the root directory of the jpo-ode-private project:

cd jpo-ode-private/

mvn clean

mvn installIt is important you run mvn clean first and then mvn install because mvn clean installs the required OSS jar file in your maven local repository.

Step 2. Navigate to the root directory of the jpo-ode project:

If you wish to change the application properties, such as change the location of the upload service via ode.uploadLocation property or set the ode.kafkaBrokers to something other than the $DOCKER_HOST_IP:9092, modify jpo-ode-svcs\src\main\resources\application.properties file as desired.

Run the following commands to build the application containers.

cd jpo-ode (or cd ../jpo-ode if you are in the jpo-ode-private directory)

mvn clean install

docker-compose buildAlternatively, run the script build script.

To run the application, from jpo-ode directory:

docker-compose up --no-recreate -dNOTE: It's important to run docker-compose up with no-recreate option. Otherwise you may run into [this issue] (wurstmeister/kafka-docker#100).

Alternatively, run deploy script.

Check the deployment by running docker-compose ps. You can start and stop service using docker-compose start and docker-compose stop commands.

If using the multi-broker docker-compose file, you can change the scaling by running docker-compose scale <service>=n where service is the service you would like to scale and n is the number of instances. For example, docker-compose scale kafka=3.

To build and run the application in one step, run the build-and-deploy script.

You can run the application on your local machine while other services are deployed on a host environment. To do so, run the following:

docker-compose start zookeeper kafka

java -jar jpo-ode-svcs/target/jpo-ode-svcs-0.0.1-SNAPSHOT.jarYou should be able to access the jpo-ode UI at localhost:8080.

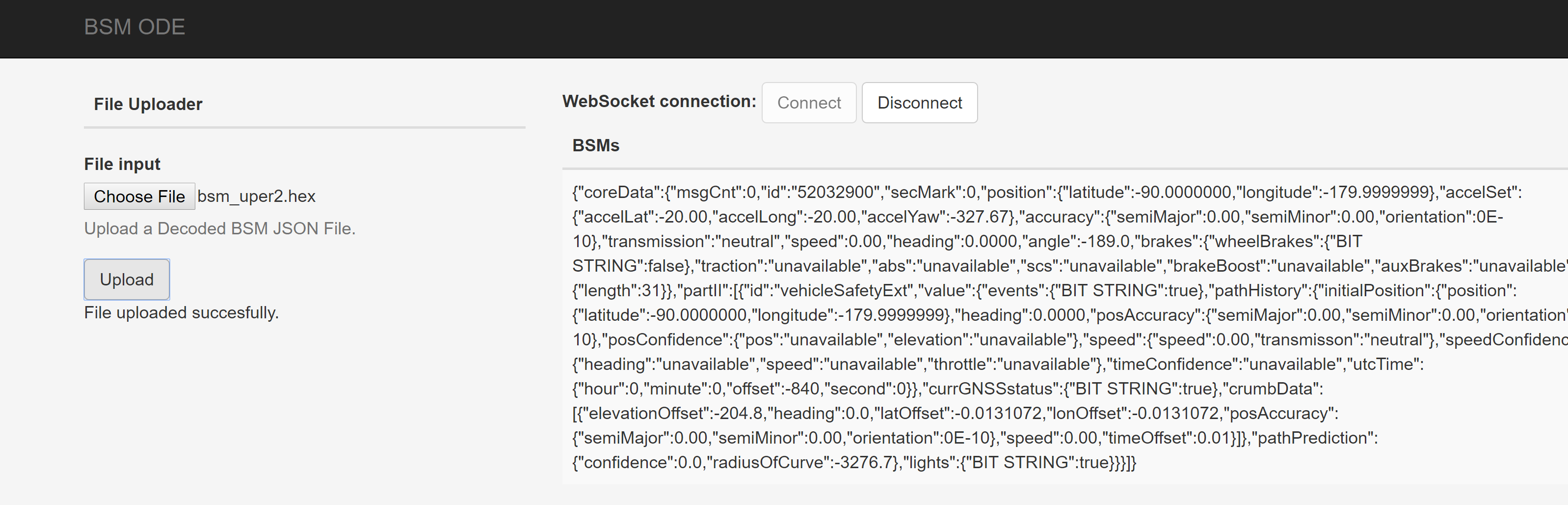

Upload a file containing BSM messages in ASN.1 Hexadecimal encoded format. For example, a file containing the following record:

401480CA4000000000000000000000000000000000000000000000000000000000000000F800D9EFFFB7FFF00000000000000000000000000000000000000000000000000000001FE07000000000000000000000000000000000001FF0

- Press the

Connectbutton to connect to the ODE WebSocket service. - Press

Choose Filebutton to select the file with the ASN.1 Hex BSM record in it. - Press

Uploadbutton to upload the file to ODE.

Another way data can be uploaded to the ODE is through copying the file to the location specified by the ode.uploadLocation property. Default location is the uploads directory directly off of the directory where ODE is launched.

The result of uploading and decoding of the message will be displayed on the UI screen.

Install the IDE of your choice:

- Eclipse: https://eclipse.org/

- STS: https://spring.io/tools/sts/all

- IntelliJ: https://www.jetbrains.com/idea/