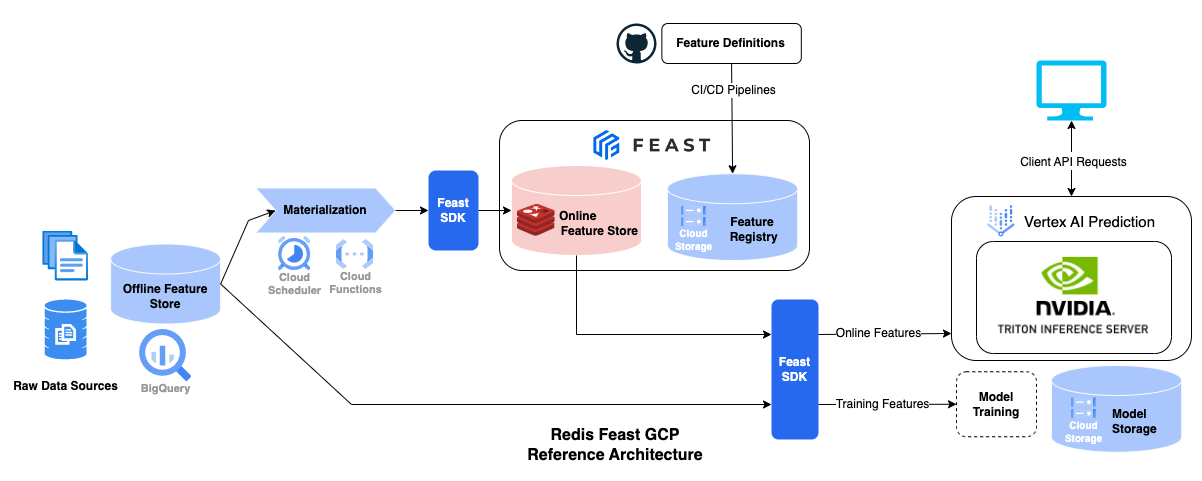

An end-to-end machine learning feature store reference architecture using Feast and Redis Enterprise (as the Online Feature Store) deployed on Google Cloud Platform.

This prototype is a reference architecture. All components are containerized, and various customizations and optimizations might be required before running in production for your specific use case.

To demonstrate the value of a Feature Store, we provide a demo application that forecasts the counts of administered COVID-19 vaccine doses (by US state) for the next week.

The Feature Store fuses together weekly google search trends data along with lagging vaccine dose counts. Both datasets are open source and provided free to the public.

The full system will include:

- GCP infrastructure setup and teardown

- Offline (BigQuery) and Online (Redis Enterprise) Feature Stores using Feast

- Model serving in Vertex AI + NVIDIA Triton Inference Server

The architecture takes advantage of GCP managed services in combination with Feast, Redis, and Triton.

- Feast feature definitions in a GitHub repository (here).

- Feature registry persisted in a Cloud Storage bucket with Feast and COMING SOON Cloud Build for CI/CD.

- Offline feature data stored in BigQuery as the source of record.

- Daily Cloud Scheduler tasks to trigger a materialization Cloud Function that will migrate the latest feature updates to the Online feature store.

- Model serving with Vertex AI Prediction using a custom NVIDIA Triton Inference Server container.

- Online feature retrieval from Redis (low latency) with Feast.

By the end of this tutorial, you will have all components running in your GCP project.

The demo contains several smaller apps organized by Docker Compose. Below we will cover prereq's and setup tasks.

Install Docker on your machine. Docker Desktop is best, thanks to it's ease of use, in our opinion.

In order to run this in Google Cloud, you will need a GCP project. The steps are

-

If you don't have one create a new GCP project

-

Acquire a GCP service account credential file and download to your machine, somewhere safe.

- IAM -> Service Account -> Create service account

-

Create a new key for that service account.

This demo provisions GCP infrastructure from your localhost. So, we need to handle local environment variables, thankfully all handled by Docker and a .env file.

Make the env file and enter values as prompted. See template below:

$ make envPROJECT_ID={gcp-project-id} (project-id NOT project-number)

GCP_REGION={preferred-gcp-region}

GOOGLE_APPLICATION_CREDENTIALS={local-path-to-gcp-creds}

SERVICE_ACCOUNT_EMAIL={your-gcp-scv-account-email}

BUCKET_NAME={your-gcp-bucket-name} (must be globally unique)

If you are bringing an existing Redis Enterprise instance from Redis Enterprise Cloud, add Redis instance reference to the env file. Make sure to record the public endpoint {host}:{port} and password. There's a 30Mb Free Tier which will be perfect for this demo.

cat <<EOF >> .env

REDIS_CONNECTION_STRING=<host:port>

REDIS_PASSWORD=<password>

EOFThen, skip to Build Containers section.

If you want to provision a Redis Enterpirse database instance using your existing Redis Enterprise in Google Cloud Marketplace subscription with the Make utility in this repo, you'll follow the steps below:

- Collect Redis Enterprise Cloud Access Key and Secret Key in Redis Enterpirse Console

- Add the keys collected in step 1 to the env file as follows:

cat <<EOF >> .env

REDISCLOUD_ACCESS_KEY=<Redis Enterprise Cloud Access Key>

REDISCLOUD_SECRET_KEY=<Redis Enterprise Cloud Secret Key>

REDIS_SUBSCRIPTION_NAME=<Name of your Redis Enterprise Subscription>

REDIS_SUBSCRIPTION_CIDR=<Deployment CIDR for your Redis Enterprise Subscription cluster, ex. 192.168.88.0/24>

EOF- Run the following command to deploy your Redis Enterprise database instance

$ make tf-deployAssuming all above steps are done, build the docker images required to run the different setup steps.

From the root of the project, run:

$ make dockerTIP: Disable docker buildkit for Mac machines (if you have trouble with this step)

export DOCKER_BUILDKIT=0The script will build a base Docker image and then build separate images for each setup step: setup and jupyter.

This will take some time, so grab a cup of coffee.

The provided Makefile wraps bash and Docker commands to make it super easy to run. This particular step:

- Provisions GCP infrastructure

- Generates the feature store

- Deploys the model with Triton Inference Server on Vertex AI

$ make setupAt the completion of this step, most of the architecture above will be deployed in your GCP project.

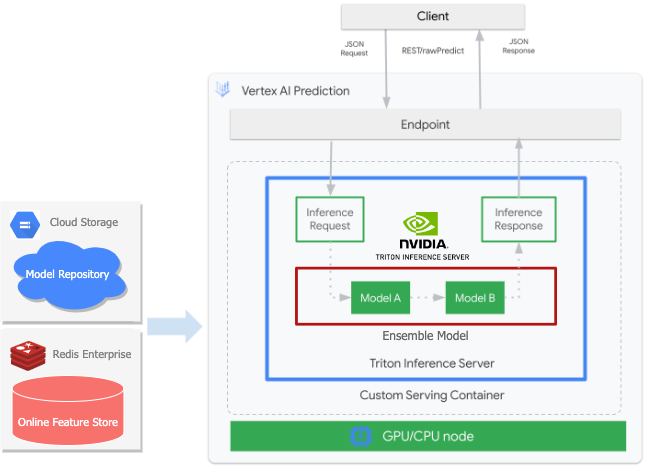

As noted above, Vertex AI allows you to deploy a custom serving container as long as it meets baseline requirements. Additionally, we can use a popular serving frameworks like NVIDIA's Triton Inference Server. NVIDIA and GCP already did the integration legwork so that we can use them together:

In this architecture, a Triton ensemble model combines both a Python backend step (for Feast feature retrieval) and a FIL backend step (for the XGboost model). It's packaged, hosted and served by Vertex AI Prediction.

With the Feature Store in place, utilize the following add-ons to perform different tasks as desired.

This repo provides several helper/tutorial notebooks for working with Feast, Redis, and GCP. Open a Jupyter session to explore these resources:

$ make jupyterCleanup GCP infrastructure and teardown Feature Store.

$ make teardownIf you are running the make tf-deploy command to provision a Redis Enterprise database instance, you'll need to run "$ make tf-destroy" to remove the database instance.

Besides running the teardown container, you can run docker compose down periodically after shutting down containers to clean up excess networks and unused Docker artifacts.