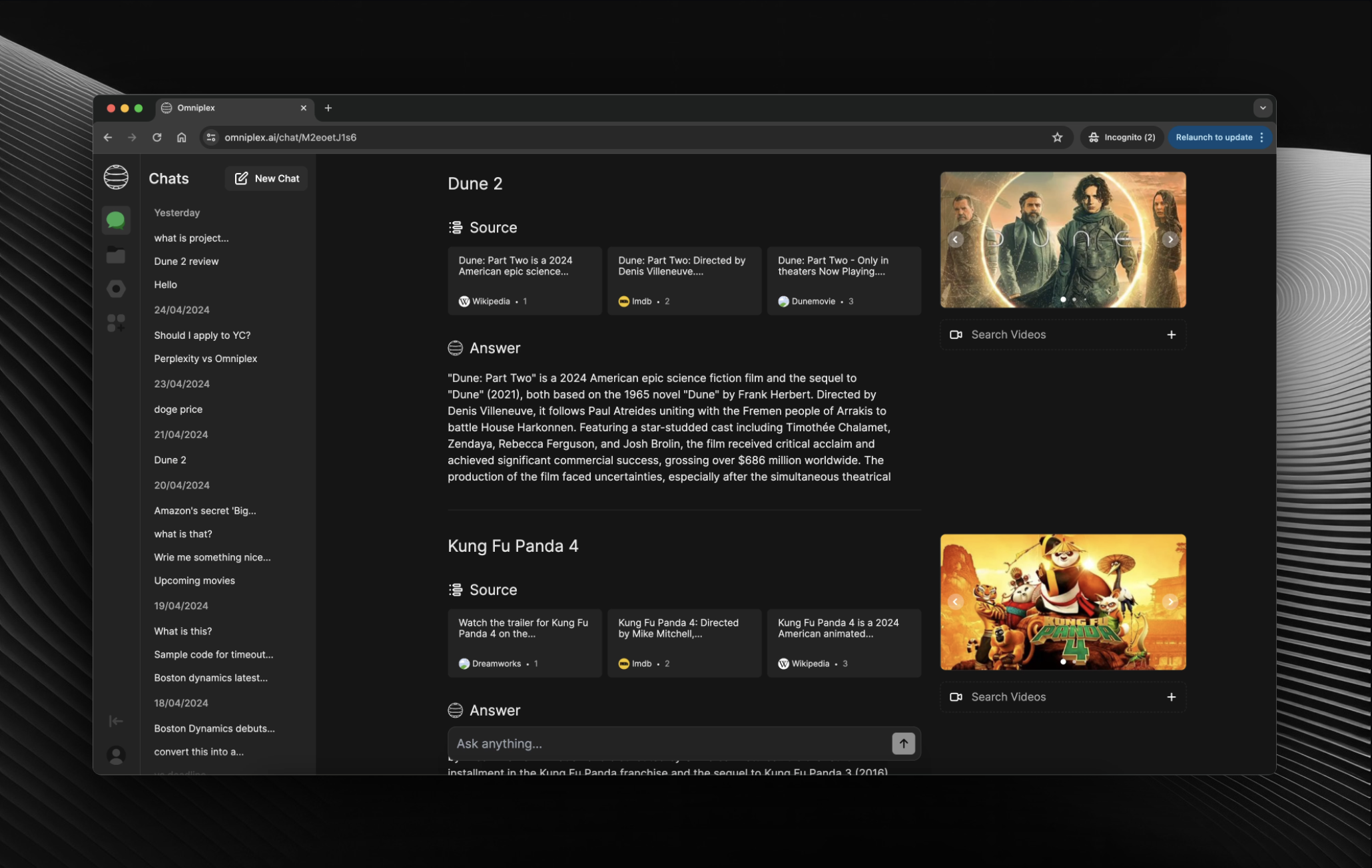

Open-Source Perplexity

Website

·

Discord

·

Reddit

Our focus is on establishing core functionality and essential features. As we continue to develop Omniplex, we are committed to implementing best practices, refining the codebase, and introducing new features to enhance the user experience.

To run the project, modify the code in the Chat component to use the // Development Code.

- Fork & Clone the repository

git clone [email protected]:[YOUR_GITHUB_ACCOUNT]/omniplex.git- Install the dependencies

yarn- Fill out secrets in

.env.local

BING_API_KEY=

OPENAI_API_KEY=

OPENWEATHERMAP_API_KEY=

ALPHA_VANTAGE_API_KEY=

FINNHUB_API_KEY=- Run the development server

yarn dev- Open https://localhost:3000 in your browser to see the app.

This is just a hacky way but very easy to implement. We will be adding a more robust way to add plugins in the future. Feel free to understand from the sample plugin we have added.

- Update the types in

types.tsto include the new plugin data types. - Update the

toolsapi inapito include the new plugin function call. - Update the

api.tsinutilsfile to include the new plugin data. - Update the

chatSlice.tsinstoreto include the new plugin reducer. - Create a new folder in the

componentsdirectory for the UI of the plugin. - Update the

chat.tsxto handle the new plugin inuseEffect. - Call the plugin function and return the data as props to source.

- Update the

source.tsto use the plugin UI. - Lastly Update the

data.tsinutilsto show in the plugin tab.

- Add the new LLM apiKey in env and add the related npm package.

ANTHROPIC_API_KEY=******- Update the

chatinapi

import Anthropic from "@anthropic-ai/sdk";

import { OpenAIStream, StreamingTextResponse } from "ai";

const anthropic = new Anthropic({

apiKey: process.env.ANTHROPIC_API_KEY,

});

export const runtime = "edge";

export async function POST(req: Request) {

const {

messages,

model,

temperature,

max_tokens,

top_p,

frequency_penalty,

presence_penalty,

} = await req.json();

const response = await anthropic.messages.create({

stream: true,

model: model,

temperature: temperature,

max_tokens: max_tokens,

top_p: top_p,

frequency_penalty: frequency_penalty,

presence_penalty: presence_penalty,

messages: messages,

});

const stream = OpenAIStream(response);

return new StreamingTextResponse(stream);

}- Update the

datainutils

export const MODELS = [

{ label: "Claude 3 Haiku", value: "claude-3-haiku-20240307" },

{ label: "Claude 3 Sonnet", value: "claude-3-sonnet-20240229" },

{ label: "Claude 3 Opus", value: "claude-3-opus-20240229" },

];We recently transitioned from the pages directory to the app directory, which involved significant changes to the project structure and architecture. As a result, you may encounter some inconsistencies or rough edges in the codebase.

- Images & Videos for Search

- Upload for Vision Model

- Chat History for Users

- Shared Chats & Fork

- Settings for LLMs

- Custom OG Metadata

- Faster API Requests

- Allow Multiple LLMs

- Plugin Development

- Function Calling with Gen UI

- Language: TypeScript

- Frontend Framework: React

- State Management: Redux

- Web Framework: Next.js

- Backend and Database: Firebase

- UI Library: NextUI & Tremor

- CSS Framework: TailwindCSS

- AI SDK: Vercel AI SDK

- LLM: OpenAI

- Search API: Bing

- Weather API: OpenWeatherMap

- Stocks API: Alpha Vantage & Finnhub

- Dictionary API: WordnikFree Dictionary API

- Hosting & Analytics: Vercel

- Authentication, Storage & Database: Firebase

We welcome contributions from the community! If you'd like to contribute to Openpanel, please follow these steps:

- Fork the repository

- Create a new branch for your feature or bug fix

- Make your changes and commit them with descriptive messages

- Push your changes to your forked repository

- Submit a pull request to the main repository

Please ensure that your code follows our coding conventions and passes all tests before submitting a pull request.

This project is licensed under the AGPL-3.0 license.

If you have any questions or suggestions, feel free to reach out to us at Contact.

Happy coding! 🚀