-

Notifications

You must be signed in to change notification settings - Fork 147

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Discussion: should we use divide-and-conquer reduce for instruction-level parallelism? #748

Comments

|

If this give 3x speedup which we can't get another way that does seem very worthwhile. It's worth also comparing to #494 which attacks the problem of reductions and SIMD rather differently. |

|

In #702 I also wondered whether using a parallel formulation of Timings: so perhaps there's a benefit in this approach for It's worse for |

|

Very interesting! I must confess that I was a bit skeptical if the parallel prefix is useful for |

|

My thought was that for a simple |

|

Don't it need at least |

That's exactly the point, reducing data dependencies is like getting "additional processors for free". My understanding is that pipelining lets the hardware execute more instructions per clock cycle when there's no data dependencies. If a value from the previous instruction isn't computed yet and written to a register, it may stall the next instruction which needs to read from that register. So the throughput may be cut by a factor of several times. This is mitigated by hardware tricks like out of order execution so I'm not sure how to measure accurately in isolation. |

|

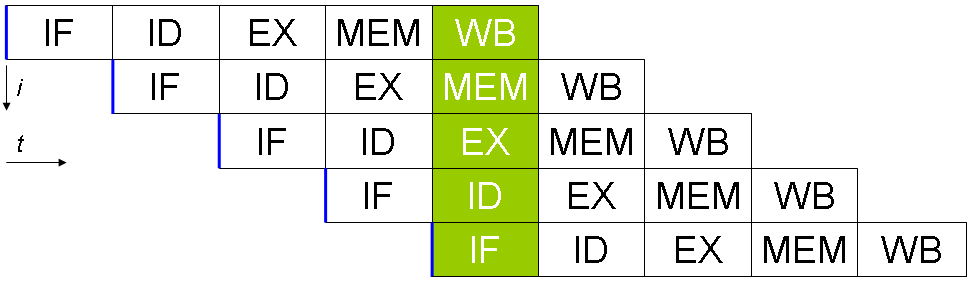

Hmm... Maybe I still don't get what pipelining does. But don't you still need to have different units (that can work in parallel)? For example, here is a picture from Wikipedia: IIUC you get 5x more throughput here because you have 5 distinct units that are involved in the code you are executing. |

|

That's true, there's different hardware units working in parallel, they're just distinct non-equivalent pieces of hardware. You could say that in this diagram we have N=5 for the purposes of ILP, but I don't think that's accurate because it depends on the type of hazard. In the data dependency we have in In the wikipedia diagram, EX for inst2 depends on WB for inst1 so in principle inst2 will be delayed for two cycles stalling the pipeline. Similarly for inst3 and inst2. If we had a branch instruction rather than But in reality we have branch prediction and out of order execution which could possibly hide pipeline stalls by overlapping them with the benchmarking harness. And I have no idea how similar the wikipedia diagram is to a real processor! |

|

I think I understand that cutting data dependency helps superscalar CPU. But still, aren't there a limited number of execution units you can use at the same time? Wouldn't it create some upper bound for the effective parallelism we can have? I just wondered how it compares to the "typical large" static array sizes (Although I don't know that would be either. I noticed that N=256 is used in perf/*.jl). Well, I guess the best way is to do some benchmarks for different vector lengths and look at the scaling. |

Yeah for sure there's an upper bound on the parallelism. But that doesn't imply an upper bound on the array size this is relevant to. The straightforward method has |

To connect these two sentences (in a most trivial way), don't you need the all |

|

Yes, it's only approximately true when there's some level of dependence between operations. In reality there's a bottleneck of dependencies in the center of the tree which might cause trouble. My surface-level understanding is that a certain percentage of stalls can be mitigated with out of order execution and other hardware hacks, but it's hard to see how that would work if every instruction coming down the pipeline is dependent on the previous one. |

|

I remember seeing https://www.llvm.org/docs/CommandGuide/llvm-mca.html and https://github.com/vchuravy/MCAnalyzer.jl so I tried using it: julia> @inbounds function mapsum(f, xs)

acc = f(xs[1])

for i in 2:length(xs)

mark_start()

acc += f(xs[i])

end

mark_end()

acc

end

mapsum (generic function with 1 method)

julia> xs = [SVector(tuple((1.0:16.0)...))]

1-element Array{SArray{Tuple{16},Float64,1,16},1}:

[1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0, 10.0, 11.0, 12.0, 13.0, 14.0, 15.0, 16.0]

julia> analyze(mapsum, Tuple{typeof(cumsum_tree_16), typeof(xs)})

...

Block Throughput: 34.05 Cycles Throughput Bottleneck: Backend

...

Total Num Of Uops: 133

...

julia> analyze(mapsum, Tuple{typeof(cumsum), typeof(xs)})

...

Block Throughput: 53.02 Cycles Throughput Bottleneck: Backend

...

Total Num Of Uops: 152

...I have no idea how to parse the output but it says Please tell me if you can decode something interesting from the full output :) Full output

|

|

Wow, this is cool.

Well I suppose the number of assembly operations can go up while the number of micro-ops goes down (maybe this is what The hardware available on the ports is documented in https://en.wikichip.org/wiki/intel/microarchitectures/skylake_(client)#Scheduler_Ports_.26_Execution_Units I tried out Another thing which was interesting was enabling the timeline output and bottleneck analysis by hacking MCAnalyzer.llvm_mca to use Base.run(`$llvm_mca --bottleneck-analysis --timeline -mcpu $(llvm_march(march)) $asmfile`)You can clearly see the register data dependency in the timelines, for example The timeline view for llvm-mca is described at It's very interesting to see this stuff. |

|

It seems the timeline output from |

As I discussed briefly with @c42f in #702 (comment), using the divide-and-conquer approach in

reduceon static arrays may be useful for leveraging instruction-level parallelism (ILP). I cooked up two (somewhat contrived?) examples and it turned out that using divide-and-conquer approach can be 3x to 4x faster (in my laptop) than sequential (foldl) approach. This is the case when "parallelizing" both compute- and memory- bound instructions.I also made two versions of benchmarks with and without

@simd. I was surprised that putting@simddidn't help improving thefoldlversion.Anyway, I think it seems to be a pretty good motivation for using this approach in StaticArrays.jl, although it'd be even nicer if there are more "real-world" benchmarks like this. What do you think? Does it make sense to use this approach in StaticArrays.jl?

Code

I'm using

NTupleas a model ofSVectorhere.mapmapreduce!is the main function that I benchmarked.Output

Here is a typical result in my laptop:

The text was updated successfully, but these errors were encountered: