This is the main page where we will post the latest updates to the featurewiz library. Make sure you upgrade your featurewiz library everytime you run to take advantage of new features. There are new updates almost every week!

Featurewiz now introduces The BlaggingClassifier one of the best classifiers ever, built by # Original Author: Gilles Louppe and Licensed in BSD 3 clause with Adaptations by Tom E Fawcett. This is an amazing classifier that everyone must try for their best imbalanced and multi-class problems. Don't just take our word for it, try it out yourself and see!

In addition, featurewiz introduces three additional classifiers built for multi-class and binary classification problems. They are: The IterativeBestClassifier, IterativeDoubleClassifier and IterativeSearchClassifier. You can read more about how they work in their docstrings. Try them out and see.

The FeatureWiz transformer now includes powerful deep learning auto encoders in the new auto_encoders argument. They will transform your features into a lower dimension space but capturing important patterns inherent in your dataset. This is known as "feature extraction" and is a powerful tool for tackling very difficult problems in classification. You can set the auto_encoders option to VAE for Variational Auto Encoder, DAE for Denoising Auto Encoder, CNN for CNN's and GAN for GAN data augmentation for generating synthetic data. These options will completely replace your existing features. Suppose you want to add them as additional features? You can do that by setting the auto_encoders option to VAE_ADD, DAE_ADD, and CNN_ADD and featurewiz will automatically add these features to your existing dataset. In addition, it will do feature selection among your old and new features. Isn't that awesome? I have uploaded a sample notebook for you to test and improve your Classifier performance using these options for Imbalanced datasets. Please send me comments via email which is displayed on my Github main page.

Update (November 2023): The FeatureWiz transformer (version 0.4.3 on) includes an "add_missing" flag

The FeatureWiz transformer now includes an add_missing flag which will add a new column for missing values for all your variables in your dataset. This will help you catch missing values as an added signal when you use FeatureWiz library. Try it out and let us know in your comments via email.

The new FeatureWiz transformer includes a categorical encoder + date-time + NLP transfomer that transforms all your string, text, date-time columns into numeric variables in one step. You will see a fully transformed (all-numeric) dataset when you use FeatureWiz transformer. Try it out and let us know in your comments via email.

There are two flags that are available to skip the recursive xgboost and/or SULOV methods. They are the skip_xgboost and skip_sulov flags. They are by default set to False. But you can change them to True if you want to skip them.

The latest version of featurewiz is here! The new 3.0 version of featurewiz provides slightly better performance by about 1-2% in diverse datasets (your experience may vary). Install it and check it out!

The latest version of XGBoost 1.7+ does not work with featurewiz. They have made massive changes to their API. So please switch to xgboost 1.5 if you want to run featurewiz.

featurewiz 2.0 is here. You have two small performance improvements:

- SULOV method now has a higher correlation limit of 0.90 as default. This means fewer variables are removed and hence more vars are selected. You can always set it back to the old limit by setting

corr_limit=0.70 if you want.

2. Recursive XGBoost algorithm is tighter in that it selects fewer features in each iteration. To see how many it selects, set `verbose` flag to 1.

The net effect is that the same number of features are selected but they are better at producing more accurate models. Try it out and let us know.

featurewiz now has a new input: skip_sulov flag is here. You can set it to True to skip the SULOV method if needed.

featurewiz now has a "silent" mode which you can set using the "verbose=0" option. It will run silently with no charts or graphs and very minimal verbose output. Hope this helps!

featurewiz as of version 0.1.50 or higher has multiple high performance models that you can use to build highly performant models once you have completed feature selection. These models are based on LightGBM and XGBoost and have even Stacking and Blending ensembles. You can find them as functions starting with "simple_" and "complex_" under featurewiz. All the best!

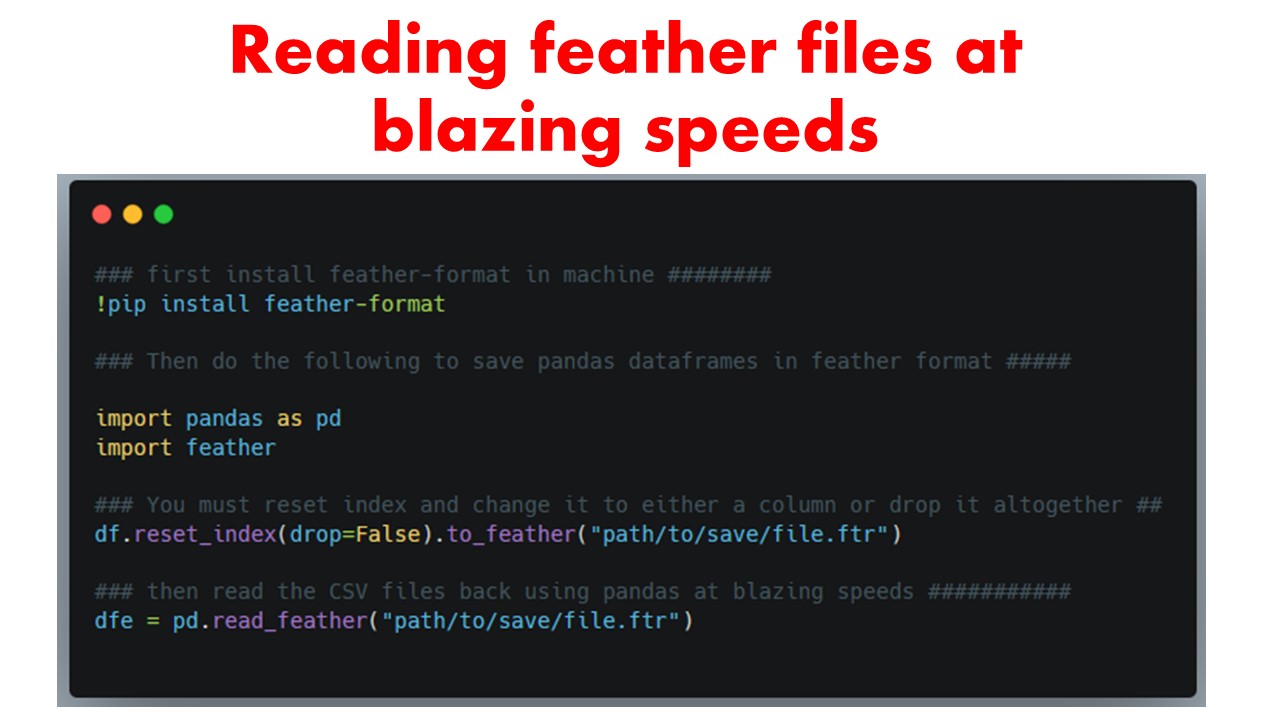

featurewiz as of version 0.1.04 or higher can read feather-format files at blazing speeds. See example below on how to convert your CSV files to feather. Then you can feed those '.ftr' files to featurewiz and it will read it 10-100X faster!

featurewiz now runs at blazing speeds thanks to using GPU's by default. So if you are running a large data set on Colab and/or Kaggle, make sure you turn on the GPU kernels. featurewiz will automatically detect that GPU is turned on and will utilize XGBoost using GPU-hist. That will ensure it will crunch your datasets even faster. I have tested it with a very large data set and it reduced the running time from 52 mins to 1 minute! That's a 98% reduction in running time using GPU compared to CPU!

Update (Jan 2022): FeatureWiz is now a sklearn-compatible transformer that you can use in data pipelines

FeatureWiz as of version 0.0.90 or higher is a scikit-learn compatible feature selection transformer. You can perform fit and predict as follows. You will get a Transformer that can select the top variables from your dataset. You can also use it in sklearn pipelines as a Transformer.

from featurewiz import FeatureWiz

features = FeatureWiz(corr_limit=0.70, feature_engg='', category_encoders='',

dask_xgboost_flag=False, nrows=None, verbose=2)

X_train_selected, y_train = features.fit_transform(X_train, y_train)

X_test_selected = features.transform(X_test)

features.features ### provides the list of selected features ###

Featurewiz is now upgraded with XGBOOST 1.5.1 for DASK for blazing fast performance even for very large data sets! Set dask_xgboost_flag = True to run dask + xgboost.

featurewiz now runs with a default setting of nrows=None. This means it will run using all rows. But if you want it to run faster, then you can change nrows to 1000 or whatever, so it will sample that many rows and run.

Featurewiz has lots of new fast model training functions that you can use to train highly performant models with the features selected by featurewiz. They are:

- simple_LightGBM_model() - simple regression and classification with one target label.

- simple_XGBoost_model() - simple regression and classification with one target label.

- complex_LightGBM_model() - more complex multi-label and multi-class models.

- complex_XGBoost_model() - more complex multi-label and multi-class models.

- Stacking_Classifier(): Stacking model that can handle multi-label, multi-class problems.

- Stacking_Regressor(): Stacking model that can handle multi-label, regression problems.

- Blending_Regressor(): Blending model that can handle multi-label, regression problems.