This is the code for the paper

Julieta Martinez, Rayat Hossain, Javier Romero, James J. Little. A simple yet effective baseline for 3d human pose estimation. In ICCV, 2017. https://arxiv.org/pdf/1705.03098.pdf.

The code in this repository was mostly written by Julieta Martinez, Rayat Hossain and Javier Romero.

We provide a strong baseline for 3d human pose estimation that also sheds light on the challenges of current approaches. Our model is lightweight and we strive to make our code transparent, compact, and easy-to-understand.

- h5py

- tensorflow 1.0 or later

- Watch our video: https://youtu.be/Hmi3Pd9x1BE

- Clone this repository and get the data. We provide the Human3.6M dataset in 3d points, camera parameters to produce ground truth 2d detections, and Stacked Hourglass detections.

git clone https://github.com/una-dinosauria/3d-pose-baseline.git

cd 3d-pose-baseline

mkdir data

cd data

wget https://www.dropbox.com/s/e35qv3n6zlkouki/h36m.zip

unzip h36m.zip

rm h36m.zip

cd ..For a quick demo, you can train for one epoch and visualize the results. To train, run

python src/predict_3dpose.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh --epochs 1

This should take about <5 minutes to complete on a GTX 1080, and give you around 75 mm of error on the test set.

Now, to visualize the results, simply run

python src/predict_3dpose.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh --epochs 1 --sample --load 24371

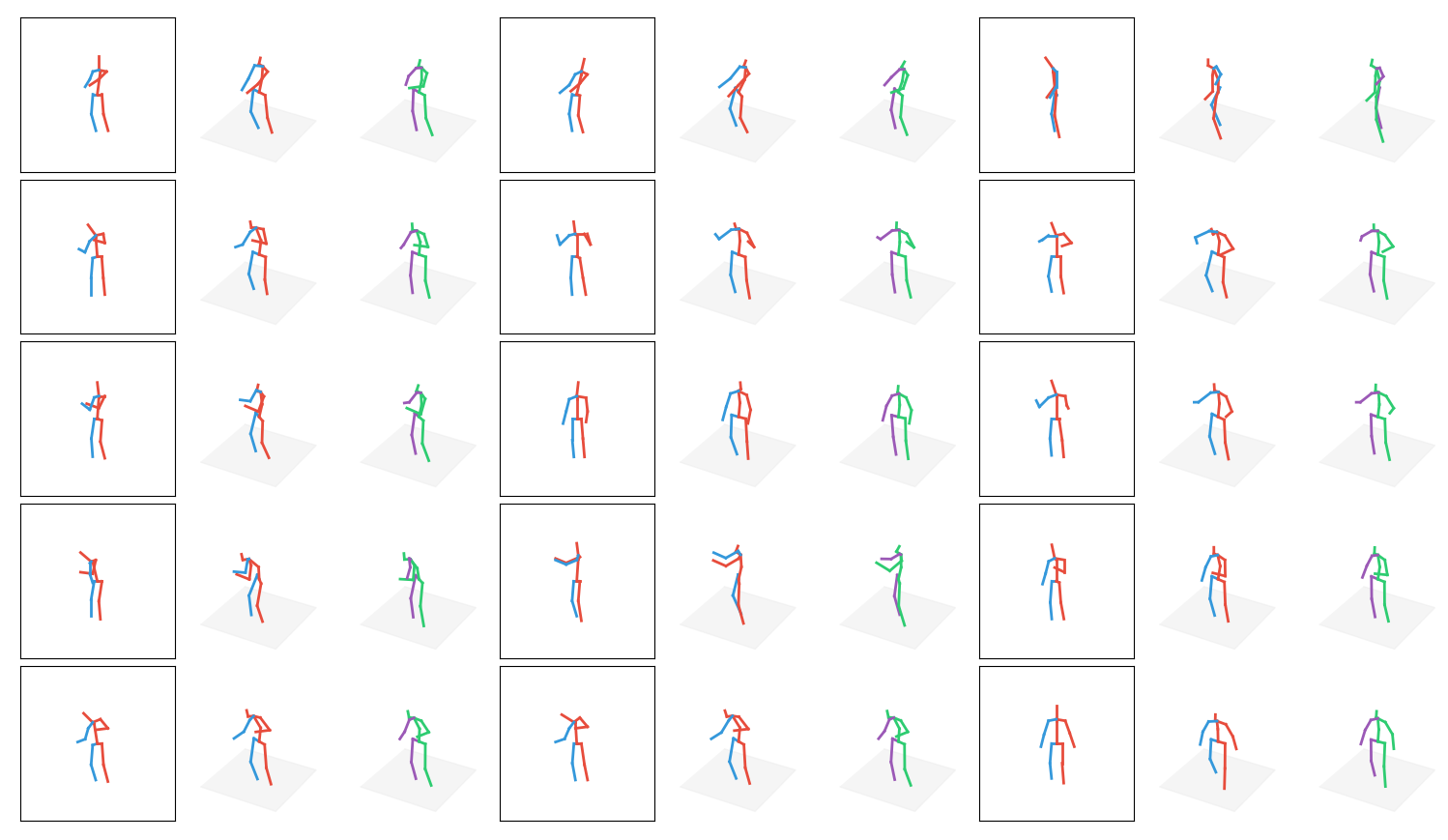

This will produce a visualization similar to this:

openpose/tf-pose-estimation/keras_Realtime_Multi-Person_Pose_Estimation to 3d-Pose-Baseline

- setup openpose and use

--write_jsonflag to export Pose Keypoints.

or

- fork tf-pose-estimation and add

--output_jsonflag to export Pose Keypoints likepython run_webcam.py --model=mobilenet_thin --resize=432x368 --camera=0 --output_json /path/to/directory, check diff

or

-

fork keras_Realtime_Multi-Person_Pose_Estimation and use

python demo_image.py --image sample_images/p1.jpgfor single image orpython demo_camera.pyfor webcam feed. check keypoints diff and webcam diff for more info. -

Download Pre-trained model below

-

simply run

python src/openpose_3dpose_sandbox.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh --epochs 200 --load 4874200 --pose_estimation_json /path/to/json_directory --write_gif --gif_fps 24 , optional --verbose 3 for debug and for interpolation add --interpolation and use --multiplier.

- or for 'Real Time'

python3.5 src/openpose_3dpose_sandbox_realtime.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh --epochs 200 --load 4874200 --pose_estimation_json /path/to/json_directory

- use

--write_jsonand--write_imagesflag to export keypoints and frame image from openpose, image will be used as imageplane inside maya. - run

python src/openpose_3dpose_sandbox.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh --epochs 200 --load 4874200 --pose_estimation_json /path/to/json_directory --write_gif --gif_fps 24. - for interpolation add

--interpolationand use--multiplier 0.5.

3d pose baseline now creates a json file 3d_data.json with x, y, z coordinates inside maya folder

- change variables in

maya/maya_skeleton.py. setthreed_pose_baselineto main 3d-pose-baseline andopenpose_imagesto same path as--write_images(step 1) - open maya and import

maya/maya_skeleton.py.

maya_skeleton.py will load the data(3d_data.json and 2d_data.json) to build a skeleton, parenting joints and setting the predicted animation provided by 3d-pose-baseline.

- create a imageplane and use created images inside

maya/image_plane/as sequence.

-

"real-time" stream, openpose > 3d-pose-baseline > maya (soon)

-

implemented unity stream, check work of Zhenyu Chen openpose_3d-pose-baseline_unity3d

To train a model with clean 2d detections, run:

python src/predict_3dpose.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise

This corresponds to Table 2, bottom row. Ours (GT detections) (MA)

To train on Stacked Hourglass detections, run

python src/predict_3dpose.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh

This corresponds to Table 2, next-to-last row. Ours (SH detections) (MA)

On a GTX 1080 GPU, this takes <8 ms for forward+backward computation, and <6 ms for forward-only computation per batch of 64.

We also provide a model pre-trained on Stacked-Hourglass detections, available through google drive

To test the model, decompress the file at the top level of this project, and call

python src/predict_3dpose.py --camera_frame --residual --batch_norm --dropout 0.5 --max_norm --evaluateActionWise --use_sh --epochs 200 --sample --load 4874200

If you use our code, please cite our work

@inproceedings{martinez_2017_3dbaseline,

title={A simple yet effective baseline for 3d human pose estimation},

author={Martinez, Julieta and Hossain, Rayat and Romero, Javier and Little, James J.},

booktitle={ICCV},

year={2017}

}

MIT