Author: Tobit Flatscher (August 2022 - February 2023)

The following sections will outline a few things to consider when setting up a real-time capable systems and optimizations that should help improve its real-time performance significantly and different techniques for benchmarking the real-time performance of a system. This guide is largely based on the exhaustive Red Hat optimisation guide, focusing on particular aspects of it. Additional information can be found in the Ubuntu real-time kernel tuning guide as well as here.

The latency on most computers that are optimised for energy efficiency - like laptops - will be a magnitude or two larger than the one of a desktop system as can also clearly be seen browsing the OSADL latency plots. It is therefore generally not advisable to use laptops for real-time tests (with and without a Docker): Some might have decent performance most of the time but might result in huge latencies in the magnitude of milliseconds when run over an extended period. Some single-board computers like the Raspberry Pi, seem to be surprisingly decent but still can't compete with a desktop computer. The Open Source Automation Development Lab eG (OSADL) performs long-term tests on several systems that can be inspected on their website in case you want to compare the performance of your system to others.

Real-time performance might be improved by changing kernel parameters when recompiling the kernel. On the OSADL long-term test farm website you can inspect and download the kernel configuration for a particular system (e.g. here). Important settings that might help reduce latency include disabling all irrelevant debugging feature. The parameters of your current configuration can be displayed with $ cat /boot/config-$(uname -r).

The operating system uses system tick time to generate interrupt requests for scheduling events that can cause a context switch for time-sliced/round-robin scheulding of tasks with the same priority. The resolution of this timer ticks can be adjusted improving the accuracy of timed events.

For Linux the currently highest supported tick rate is 1000Hz and can be set with the kernel parameter:

CONFIG_HZ_1000=y # Requires to comment the other entries such as CONFIG_HZ=250

CONFIG_HZ=1000In order to be more performant one can also stop the periodic ticks on idle and further omit the scheduling ticks on CPUs that only have one runnable task as described here as well as here:

CONFIG_NO_HZ_IDLE=y

CONFIG_NO_HZ_FULL=yLatter allows to improve worst-case latency by the duration of the scheduling-clock interrupt. One has to explicitly mark the CPUs that this should be applied to with nohz_full=6-8 but it is not possible to mark all of the CPUs as adaptive-tick CPUs! By using the kernel parameter CONFIG_NO_HZ_FULL_ALL=y one can activate this mode for all CPUs except the boot CPU.

For lock-less programming Linux has a read-copy update (RCU) synchronization mechanism that avoids lock-based primitives when multiple threads concurrently read and update elements that are linked through pointers and belong to shared data structures avoiding inconsistencies. This mechanisms sometimes queues callbacks on CPUs to be performed at a future moment. One can exclude certain CPUs from running RCU callbacks by compiling the kernel with

CONFIG_RCU_NOCB_CPU=yand later specifying a list of CPUs that this should be applied to with e.g. rcu_nocbs=6-8.

During operation the operating system may scale the CPU frequency up and down in order to improve performance or save energy. Responsible for this are

- The scaling governor: Algorithms that compute the CPU frequency depending on the load etc.

- The scaling driver that interacts with the CPU to enforce the desired frequency

For more detailed information see e.g. Arch Linux.

While these settings might help save energy, they generally increase latency and should thus be de-activated on real-time systems.

On Intel CPUs (and similarly on AMD processors) the driver offers two possibilities to reduce the power consumption (see e.g. here and here as well as the documentation on the Linux kernel here or here for a technical in-depth overview):

- P-states: The processor can be run at lower voltages and/or frequency levels in order to decrease power consumption

- C-states: Idle states, where subsystems are powered down.

Both states are numbered, where 0 corresponds to operational state with maximum performance, and the higher levels corresponding to power-saving (likely latency-increasing) modes.

From my experience the major impact on latency have the C-states while changing the P-states to performance has only a minor influence if any. Often one can change both of these settings in the BIOS but the Linux operating systems often discard the corresponding BIOS settings. It is therefore important to configure the operating system accordingly as we will see in the next section.

Additionally there are other dynamic frequency scaling features, like Turbo-Boost that allow to temporarily raise the operating frequency above the nominal frequency when demanding tasks are run. To what degree this is possible depends on the number of active cores, current and power consumption as well as CPU temperature. Values for different processors can be found e.g. on WikiChip. This feature can be deactivated inside the operating system as well as the BIOS and is often de-activated on servers that are supposed to run 24/7 on full load. For best real-time performance you should turn hyperthreading off.

It is essential to low latencies to disable the idle states of the processor (see the section below for actual benchmarks). This can be done in several different ways as described here:

-

One can use kernel parameters such as

intel_idle.max_cstate=0for disabling the Intel driver orprocessor.max_cstate=0 idle=pollfor keeping the processor constantly in C0. These approaches are permanent and can only be changed after a reboot. -

One can control them dynamically through the

/dev/cpu_dma_latencyfile. One opens it and writes the maximum allowable latency. With the following command the latency values of the different states can be determined$ cd /sys/devices/system/cpu/cpu0/cpuidle $ for state in state{0..4} ; do echo c-$state `cat $state/name` `cat $state/latency` ; done

If

0is set this will keep the processor inC0. It is important that this file must be kept open when closing it the processor will be allowed to idle again. The value of the/dev/cpu_dma_latencyfile is given in hexadecimal and can be read with:$ HEX_LATENCY=$(sudo xxd -p /dev/cpu_dma_latency | tac -rs .. | echo "$(tr -d '\n')") && echo $((0x${HEX_LATENCY}))

Finally one can also set the C-states for each core independently dynamically by writing to

/sys/devices/system/cpu/cpu${i}/cpuidle/${cstate}/disablefor each CPU core${i}in$(nproc)and each${cstate}inls /sys/devices/system/cpu/cpu0/cpuidle | grep state.

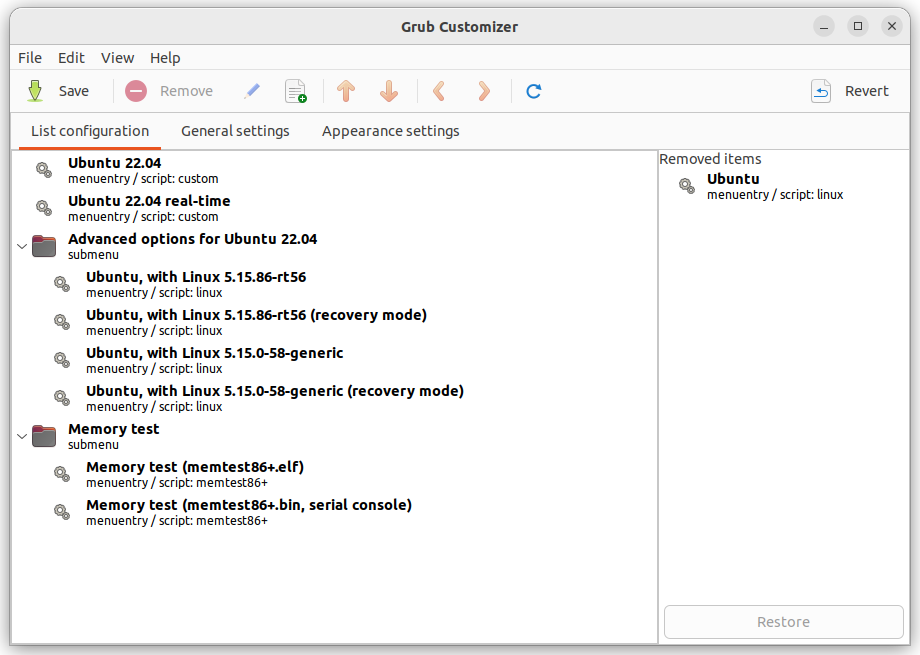

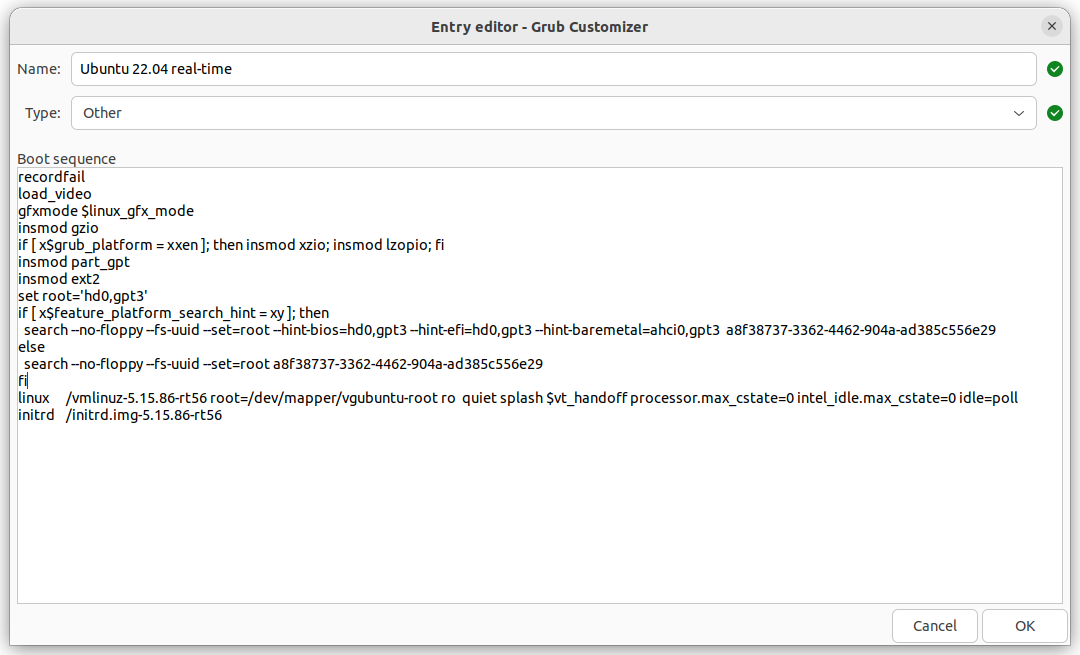

I personally prefer the first option as when I am working on real-time relevant code I do not want to call a script every time. Instead I will boot into a dedicated kernel with the corresponding kernel parameters activated. I will generate another boot entry using Grub-Customizer (see screenshots below), called Ubuntu 22.04 real-time where I basically clone the X.XX.XX-rtXX configuration adding processor.max_cstate=0 intel_idle.max_cstate=0 idle=poll (see second-last line of the screenshot on the right).

|

|

|---|---|

| Grub configuration | Disabling C-states just for the PREEMPT_RT kernel |

After boot you can use $ cat /proc/cmdline to double check the settings.

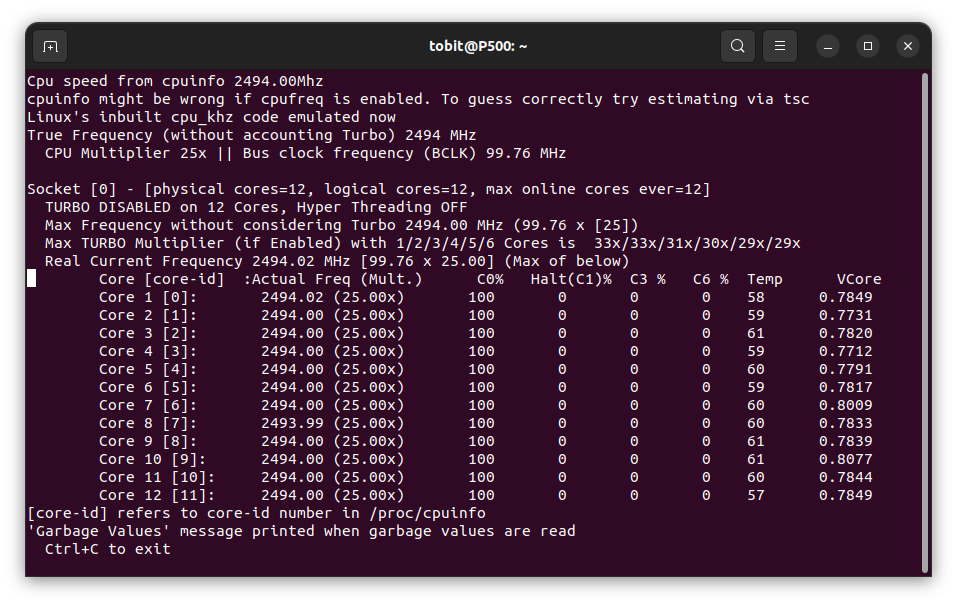

Additionally i7z can be very useful for monitoring the C-states. After installing it with

$ sudo apt-get install i7zcontinue to run it with

$ sudo i7zIt will show you the percentage of time that each individual core is spending in a particular C-state:

Additionally there is cpupower. The problem with it is though that it is specific for a kernel, meaning it has be downloaded through $ sudo apt-get install linux-tools-$(uname -r) and is generally not available for a real-time kernel that you compiled from source, meaning you would have to compile it as well when compiling the kernel!

An important test for evaluating the latencies of a real-time system caused by hardware, firmware and operating system as a whole is the cyclictest. It repeatedly measures the difference between a thread's intended and actual wake-up time (see here for more details). For real-time purposes the worst-case latency is actually far more important than the average latency as any violation of a deadline might be fatal. Ideally we would want to test it in combination with a stress test such as $ stress-ng -c $(nproc) and run it for an extended period of times (hours, days or even weeks).

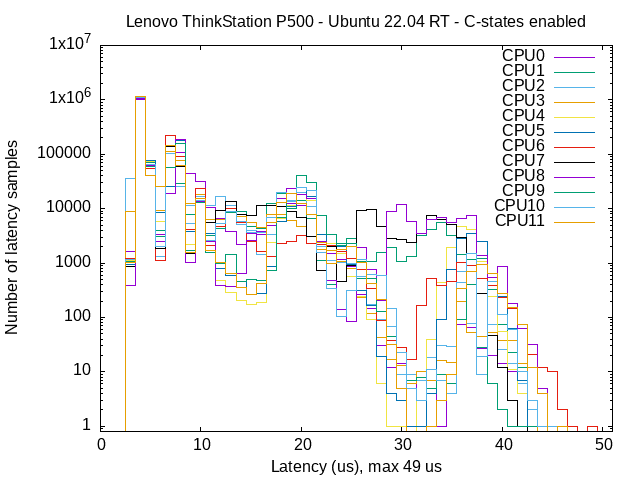

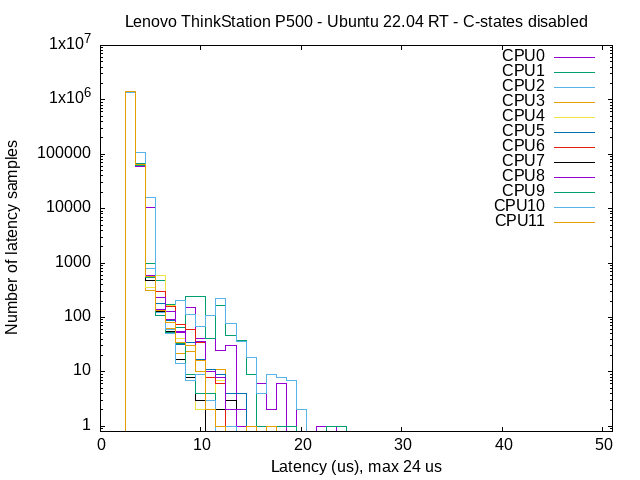

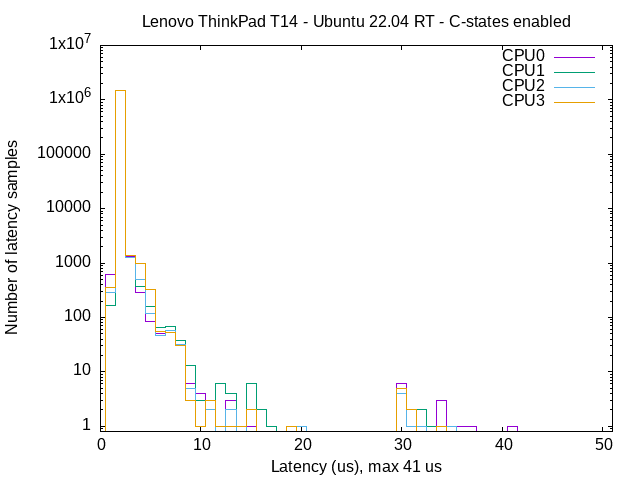

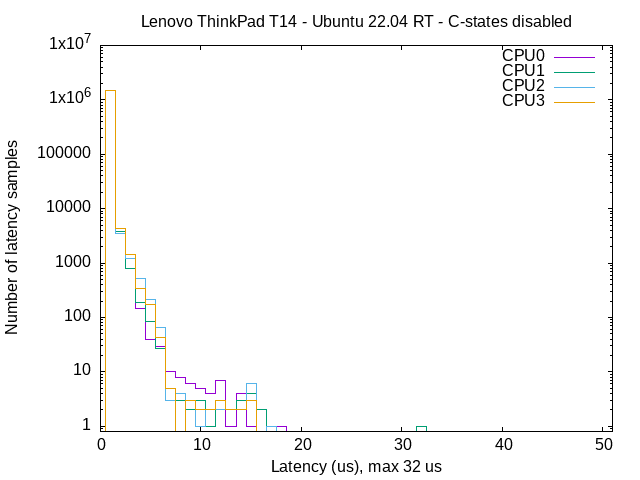

The effectiveness of turning the C-states off is evaluated for two systems, a Lenovo ThinkStation P500 tower (with an Intel(R) Xeon(R) E5-2680 v3 @ 2.50GHz twelve-core CPU and 32GB of ECC-DDR4-RAM) and a Lenovo ThinkPad T14 Gen1 notebook (with an Intel(R) Core(TM) i5-10210U quad-core CPU and 16GB of DDR4-RAM). In both cases a cyclictest with the following parameters is performed:

$ cyclictest --latency=750000 -D5m -m -Sp90 -i200 -h400 -qThe parameter latency here is essential as per default cyclictest writes to /dev/cpu_dma_latency, effectively disabling the C-states. This way one has a realistic overview of what the system might be capable of but not how another program will behave that does not use the same optimization!

The test duration with five minutes is quite short. For true real-time systems the system latency is generally investigated over the course of days or weeks (generally also applying stress tests to the system) as certain driver-related issues might only manifest themselves over time. Nonetheless the following test should be sufficient to illustrate the differences in latency.

Both systems use the same self-compiled version of an Ubuntu 22.04 5.15.86-rt56 kernel. Intel Turbo-boost is disabled in the BIOS. Furthermore all tests for Docker are ran with Docker-Compose directly (without Visual Studio Code in between). Finally for best performance the T14 notebook was plugged in during the entire test.

|

|

|---|---|

Lenovo ThinkStation P500 running PREEMPT_RT with C-states enabled |

Lenovo ThinkStation P500 running PREEMPT_RT with C-states disabled |

|

|

|---|---|

Lenovo ThinkPad T14 Gen1 running PREEMPT_RT with C-states enabled |

Lenovo ThinkPad T14 Gen1 running PREEMPT_RT with C-states disabled |

While latencies in the sub 100us region are generally far than enough for robotics, we can see that the latency is greatly reduced for both systems when de-activating the C-states, even halfed in the case of the Lenovo ThinkStation P500. These differences are also visible when using a Docker, as the latencies from real-time capable code ran inside a Docker are virtually indistinguishable from processes ran on the host system. It should be mentioned that the T14 has a remarkable performance for a notebook (several notebooks I used suffered from severe and regular latency spikes in the 500us region) but when running the test for extended periods rare latency spikes of up to two milliseconds were observed.

For detecting system management interrupts we can use the hwlatdetect tool. It works by consuming all the processor ("hogging", leaving other processes unable to run!), polling the CPU time stamp counter and looking for gaps which then is an indicator that in this time frame an SMI was processed.

You can run it with the following command as described here:

$ sudo hwlatdetect --duration=60sIt will then output a report at the end.

Typically one will reserve a CPU core for a particular real-time task as described for Grub and Linux here and here. If hyper-threading is activated in the BIOS (not recommended) also the corresponding virtual core has to be isolated. The indices of the second virtual cores follow the physical ones.

You can isolate the CPUs by adding the following option (the numbers correspond to the list of CPUs we want to isolate):

GRUB_CMDLINE_LINUX_DEFAULT=“isolcpus=1-3,5,7”to /etc/default/grub and then update grub with $ sudo update-grub and then reboot.

Additionally it makes sense to also isolate the corresponding hardware interrupts (IRQs) (on a dedicated CPU), disabling the irqbalance daemon and binding the process to a particular CPU as described here. This is in particular crucial for applications that include network and EtherCAT communication.

For this disable irqbalance with:

$ systemctl disable irqbalance

$ systemctl stop irqbalanceThen check the interrupts with

$ cat /proc/interruptsNote down the IRQ number at the beginning of the relevant line and then you can place the interrupt of the corresponding IRQ onto the core of your choice:

$ echo <cpu_core> > /proc/irq/<irq_id>/smp_affinityFinally we can also activate full dynticks internals and prohibit RCUs from running on our isolated cores (as described here and here) by adding

nohz_full=1-3,5,7 rcu_nocbs=1-3,5,7where the number corresponds to the CPUs we would like to isolate.

Putting this together with the previous Grub configuration options this leaves us with:

GRUB_CMDLINE_LINUX_DEFAULT=“isolcpus=1-3,5,7 nohz_full=1-3,5,7 rcu_nocbs=1-3,5,7”