changed

CHANGELOG.md

|

|

@@ -1,3 +1,22 @@

|

|

1

|

+ # 0.7.0 (April 23, 2017)

|

|

2

|

+

|

|

3

|

+ Smaller convenience features in here - the biggest part of work went into breaking reports in [benchee_html](https://github.com/PragTob/benchee_html) apart :)

|

|

4

|

+

|

|

5

|

+ ## Features (User Facing)

|

|

6

|

+ * the print out of the Erlang version now is less verbose (just major/minor)

|

|

7

|

+ * the fast_warning will now also tell you how to disable it

|

|

8

|

+ * When `print: [benchmarking: false]` is set, information about which input is being benchmarked at the moment also won't be printed

|

|

9

|

+ * generation of statistics parallelized (thanks hh.ex - @nesQuick and @dszam)

|

|

10

|

+

|

|

11

|

+ ## Breaking Changes (User Facing)

|

|

12

|

+ * If you use the more verbose interface (`Benchee.init` and friends, e.g. not `Benchee.run`) then you have to insert a `Benchee.system` call before `Benchee.measure` (preferably right after `Benchee.init`)

|

|

13

|

+

|

|

14

|

+ ## Features (Plugins)

|

|

15

|

+ * `Benchee.Utility.FileCreation.interleave/2` now also accepts a list of inputs which are then all interleaved in the file name appropriately. See the doctests for more details.

|

|

16

|

+

|

|

17

|

+ ## Breaking Changes (Plugins)

|

|

18

|

+ * `Benchee.measure/1` now also needs to have the system information generated by `Benchee.system/1` present if configuration information should be printed.

|

|

19

|

+

|

|

1

20

|

# 0.6.0 (November 30, 2016)

|

|

2

21

|

|

|

3

22

|

One of the biggest releases yet. Great stuff in here - more elixir like API for `Benchee.run/2` with the jobs as the primary argument and the optional options as the second argument and now also as the more idiomatic keyword list!

|

changed

README.md

|

|

@@ -1,7 +1,9 @@

|

|

1

|

- # Benchee [](https://hex.pm/packages/benchee) [](https://hexdocs.pm/benchee/) [](https://inch-ci.org/github/PragTob/benchee) [](https://travis-ci.org/PragTob/benchee)

|

|

1

|

+ # Benchee [](https://hex.pm/packages/benchee) [](https://hexdocs.pm/benchee/) [](https://inch-ci.org/github/PragTob/benchee) [](https://travis-ci.org/PragTob/benchee) [](https://coveralls.io/github/PragTob/benchee?branch=master)

|

|

2

2

|

|

|

3

3

|

Library for easy and nice (micro) benchmarking in Elixir. It allows you to compare the performance of different pieces of code at a glance. Benchee is also versatile and extensible, relying only on functions - no macros! There are also a bunch of [plugins](#plugins) to draw pretty graphs and more!

|

|

4

4

|

|

|

5

|

+ Benchee runs each of your functions for a given amount of time after an initial warmup. It uses the raw run times it could gather in that time to show different statistical values like average, iterations per second and the standard deviation.

|

|

6

|

+

|

|

5

7

|

Benchee has a nice and concise main interface, and its behavior can be altered through lots of [configuration options](#configuration):

|

|

6

8

|

|

|

7

9

|

```elixir

|

|

|

@@ -11,37 +13,37 @@ map_fun = fn(i) -> [i, i * i] end

|

|

11

13

|

Benchee.run(%{

|

|

12

14

|

"flat_map" => fn -> Enum.flat_map(list, map_fun) end,

|

|

13

15

|

"map.flatten" => fn -> list |> Enum.map(map_fun) |> List.flatten end

|

|

14

|

- }, time: 3)

|

|

16

|

+ }, time: 10)

|

|

15

17

|

```

|

|

16

18

|

|

|

17

19

|

Produces the following output on the console:

|

|

18

20

|

|

|

19

21

|

```

|

|

20

|

- tobi@happy ~/github/benchee $ mix run samples/run.exs

|

|

21

|

- Erlang/OTP 19 [erts-8.1] [source] [64-bit] [smp:8:8] [async-threads:10] [hipe] [kernel-poll:false]

|

|

22

|

- Elixir 1.3.4

|

|

22

|

+ tobi@speedy ~/github/benchee $ mix run samples/run.exs

|

|

23

|

+ Elixir 1.4.0

|

|

24

|

+ Erlang 19.1

|

|

23

25

|

Benchmark suite executing with the following configuration:

|

|

24

26

|

warmup: 2.0s

|

|

25

|

- time: 3.0s

|

|

27

|

+ time: 10.0s

|

|

26

28

|

parallel: 1

|

|

27

29

|

inputs: none specified

|

|

28

|

- Estimated total run time: 10.0s

|

|

30

|

+ Estimated total run time: 24.0s

|

|

29

31

|

|

|

30

32

|

Benchmarking flat_map...

|

|

31

33

|

Benchmarking map.flatten...

|

|

32

34

|

|

|

33

35

|

Name ips average deviation median

|

|

34

|

- map.flatten 1.04 K 0.96 ms ±21.82% 0.90 ms

|

|

35

|

- flat_map 0.66 K 1.51 ms ±16.98% 1.50 ms

|

|

36

|

+ flat_map 2.29 K 437.22 μs ±17.32% 418.00 μs

|

|

37

|

+ map.flatten 1.28 K 778.50 μs ±15.92% 767.00 μs

|

|

36

38

|

|

|

37

39

|

Comparison:

|

|

38

|

- map.flatten 1.04 K

|

|

39

|

- flat_map 0.66 K - 1.56x slower

|

|

40

|

+ flat_map 2.29 K

|

|

41

|

+ map.flatten 1.28 K - 1.78x slower

|

|

40

42

|

```

|

|

41

43

|

|

|

42

|

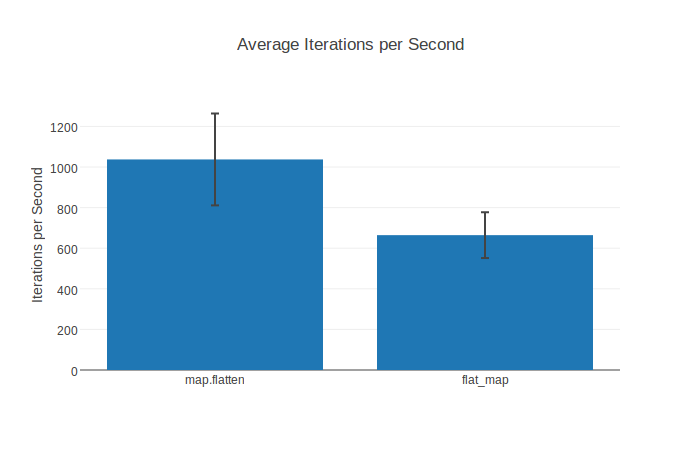

- The aforementioned [plugins](#plugins) like [benchee_html](https://github.com/PragTob/benchee_html) make it possible to generate nice looking [html reports](https://www.pragtob.info/benchee/flat_map.html) and export graphs as png images like this IPS comparison chart with standard deviation:

|

|

44

|

+ The aforementioned [plugins](#plugins) like [benchee_html](https://github.com/PragTob/benchee_html) make it possible to generate nice looking [html reports](https://www.pragtob.info/benchee/flat_map.html), where individual graphs can also be exported as PNG images:

|

|

43

45

|

|

|

44

|

-

|

|

46

|

+

|

|

45

47

|

|

|

46

48

|

## Features

|

|

47

49

|

|

|

|

@@ -71,7 +73,7 @@ Benchee only has a small runtime dependency on `deep_merge` for merging configur

|

|

71

73

|

Add benchee to your list of dependencies in `mix.exs`:

|

|

72

74

|

|

|

73

75

|

```elixir

|

|

74

|

- def deps do

|

|

76

|

+ defp deps do

|

|

75

77

|

[{:benchee, "~> 0.6", only: :dev}]

|

|

76

78

|

end

|

|

77

79

|

```

|

|

|

@@ -89,32 +91,32 @@ map_fun = fn(i) -> [i, i * i] end

|

|

89

91

|

Benchee.run(%{

|

|

90

92

|

"flat_map" => fn -> Enum.flat_map(list, map_fun) end,

|

|

91

93

|

"map.flatten" => fn -> list |> Enum.map(map_fun) |> List.flatten end

|

|

92

|

- }, time: 3)

|

|

94

|

+ })

|

|

93

95

|

```

|

|

94

96

|

|

|

95

97

|

This produces the following output:

|

|

96

98

|

|

|

97

99

|

```

|

|

98

|

- tobi@happy ~/github/benchee $ mix run samples/run.exs

|

|

99

|

- Erlang/OTP 19 [erts-8.1] [source] [64-bit] [smp:8:8] [async-threads:10] [hipe] [kernel-poll:false]

|

|

100

|

- Elixir 1.3.4

|

|

100

|

+ tobi@speedy ~/github/benchee $ mix run samples/run.exs

|

|

101

|

+ Elixir 1.4.0

|

|

102

|

+ Erlang 19.1

|

|

101

103

|

Benchmark suite executing with the following configuration:

|

|

102

104

|

warmup: 2.0s

|

|

103

|

- time: 3.0s

|

|

105

|

+ time: 5.0s

|

|

104

106

|

parallel: 1

|

|

105

107

|

inputs: none specified

|

|

106

|

- Estimated total run time: 10.0s

|

|

108

|

+ Estimated total run time: 14.0s

|

|

107

109

|

|

|

108

110

|

Benchmarking flat_map...

|

|

109

111

|

Benchmarking map.flatten...

|

|

110

112

|

|

|

111

113

|

Name ips average deviation median

|

|

112

|

- map.flatten 1.27 K 0.79 ms ±15.34% 0.76 ms

|

|

113

|

- flat_map 0.85 K 1.18 ms ±6.00% 1.23 ms

|

|

114

|

+ flat_map 2.28 K 438.07 μs ±16.66% 419.00 μs

|

|

115

|

+ map.flatten 1.25 K 802.99 μs ±13.40% 782.00 μs

|

|

114

116

|

|

|

115

117

|

Comparison:

|

|

116

|

- map.flatten 1.27 K

|

|

117

|

- flat_map 0.85 K - 1.49x slower

|

|

118

|

+ flat_map 2.28 K

|

|

119

|

+ map.flatten 1.25 K - 1.83x slower

|

|

118

120

|

```

|

|

119

121

|

|

|

120

122

|

See [Features](#features) for a description of the different statistical values and what they mean.

|

|

|

@@ -134,7 +136,7 @@ The available options are the following (also documented in [hexdocs](https://he

|

|

134

136

|

* `warmup` - the time in seconds for which a benchmark should be run without measuring times before real measurements start. This simulates a _"warm"_ running system. Defaults to 2.

|

|

135

137

|

* `time` - the time in seconds for how long each individual benchmark should be run and measured. Defaults to 5.

|

|

136

138

|

* `inputs` - a map from descriptive input names to some different input, your benchmarking jobs will then be run with each of these inputs. For this to work your benchmarking function gets the current input passed in as an argument into the function. Defaults to `nil`, aka no input specified and functions are called without an argument. See [Inputs](#inputs)

|

|

137

|

- * `parallel` - each job will be executed in `parallel` number processes. Gives you more data in the same time, but also puts a load on the system interfering with benchmark results. For more on the pros and cons of parallel benchmarking [check the wiki](https://github.com/PragTob/benchee/wiki/Parallel-Benchmarking). Defaults to 1.

|

|

139

|

+ * `parallel` - each the function of each job will be executed in `parallel` number processes. If `parallel` is `4` then 4 processes will be spawned that all execute the _same_ function for the given time. When these finish/the time is up 4 new processes will be spawned for the next job/function. This gives you more data in the same time, but also puts a load on the system interfering with benchmark results. For more on the pros and cons of parallel benchmarking [check the wiki](https://github.com/PragTob/benchee/wiki/Parallel-Benchmarking). Defaults to 1 (no parallel execution).

|

|

138

140

|

* `formatters` - list of formatter functions you'd like to run to output the benchmarking results of the suite when using `Benchee.run/2`. Functions need to accept one argument (which is the benchmarking suite with all data) and then use that to produce output. Used for plugins. Defaults to the builtin console formatter calling `Benchee.Formatters.Console.output/1`. See [Formatters](#formatters)

|

|

139

141

|

* `print` - a map from atoms to `true` or `false` to configure if the output identified by the atom will be printed during the standard Benchee benchmarking process. All options are enabled by default (true). Options are:

|

|

140

142

|

* `:benchmarking` - print when Benchee starts benchmarking a new job (Benchmarking name ..)

|

|

|

@@ -253,7 +255,19 @@ Benchee.run(%{

|

|

253

255

|

|

|

254

256

|

```

|

|

255

257

|

|

|

256

|

- ### More expanded/verbose usage

|

|

258

|

+ ### Setup and teardown

|

|

259

|

+

|

|

260

|

+ If you want to do setup and teardown, i.e. do something before or after the benchmarking suite executes this is very easy in benchee. As benchee is just plain old functions just do it before/after you call benchee:

|

|

261

|

+

|

|

262

|

+ ```elixir

|

|

263

|

+ your_setup()

|

|

264

|

+

|

|

265

|

+ Benchee.run %{"Awesome stuff" => fn -> magic end }

|

|

266

|

+

|

|

267

|

+ your_teardown()

|

|

268

|

+ ```

|

|

269

|

+

|

|

270

|

+ ### More verbose usage

|

|

257

271

|

|

|

258

272

|

It is important to note that the benchmarking code shown before is the convenience interface. The same benchmark in its more verbose form looks like this:

|

|

259

273

|

|

|

|

@@ -262,6 +276,7 @@ list = Enum.to_list(1..10_000)

|

|

262

276

|

map_fun = fn(i) -> [i, i * i] end

|

|

263

277

|

|

|

264

278

|

Benchee.init(time: 3)

|

|

279

|

+ |> Benchee.system

|

|

265

280

|

|> Benchee.benchmark("flat_map", fn -> Enum.flat_map(list, map_fun) end)

|

|

266

281

|

|> Benchee.benchmark("map.flatten",

|

|

267

282

|

fn -> list |> Enum.map(map_fun) |> List.flatten end)

|

|

|

@@ -293,10 +308,20 @@ Packages that work with Benchee to provide additional functionality.

|

|

293

308

|

* [benchee_csv](//github.com/PragTob/benchee_csv) - generate CSV from your Benchee benchmark results so you can import them into your favorite spreadsheet tool and make fancy graphs

|

|

294

309

|

* [benchee_json](//github.com/PragTob/benchee_json) - export suite results as JSON to feed anywhere or feed it to your JavaScript and make magic happen :)

|

|

295

310

|

|

|

296

|

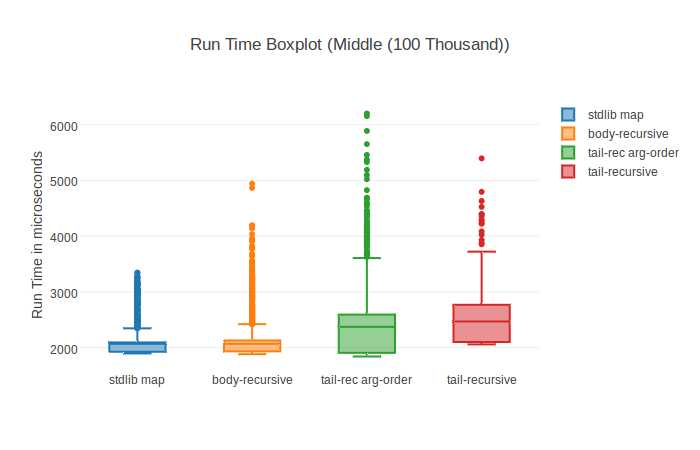

- With the HTML plugin for instance you can get fancy graphs like this boxplot (but normal bar chart is there as well):

|

|

311

|

+ With the HTML plugin for instance you can get fancy graphs like this boxplot:

|

|

297

312

|

|

|

298

313

|

|

|

299

314

|

|

|

315

|

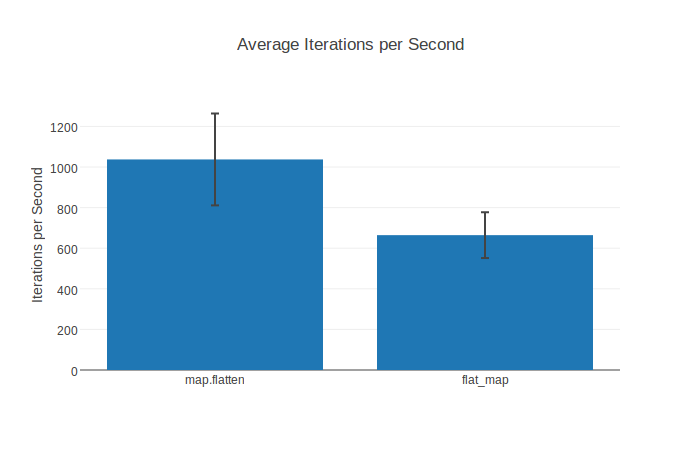

+ Of course there also are normal bar charts including standard deviation:

|

|

316

|

+

|

|

317

|

+

|

|

318

|

+

|

|

319

|

+ ## Presentation + general benchmarking advice

|

|

320

|

+

|

|

321

|

+ If you're into watching videos of conference talks and also want to learn more about benchmarking in general I can recommend watching my talk from [ElixirLive 2016](https://www.elixirlive.com/). [Slides can be found here](https://pragtob.wordpress.com/2016/12/03/slides-how-fast-is-it-really-benchmarking-in-elixir/), video - click the washed out image below ;)

|

|

322

|

+

|

|

323

|

+ [](https://www.youtube.com/watch?v=7-mE5CKXjkw)

|

|

324

|

+

|

|

300

325

|

## Contributing

|

|

301

326

|

|

|

302

327

|

Contributions to Benchee are very welcome! Bug reports, documentation, spelling corrections, whole features, feature ideas, bugfixes, new plugins, fancy graphics... all of those (and probably more) are much appreciated contributions!

|

changed

hex_metadata.config

|

|

@@ -10,8 +10,10 @@

|

|

10

10

|

<<"lib/benchee/conversion/duration.ex">>,

|

|

11

11

|

<<"lib/benchee/conversion/format.ex">>,

|

|

12

12

|

<<"lib/benchee/conversion/scale.ex">>,<<"lib/benchee/conversion/unit.ex">>,

|

|

13

|

- <<"lib/benchee/formatters/console.ex">>,<<"lib/benchee/statistics.ex">>,

|

|

14

|

- <<"lib/benchee/system.ex">>,<<"lib/benchee/utility/deep_convert.ex">>,

|

|

13

|

+ <<"lib/benchee/formatters/console.ex">>,

|

|

14

|

+ <<"lib/benchee/output/benchmark_printer.ex">>,

|

|

15

|

+ <<"lib/benchee/statistics.ex">>,<<"lib/benchee/system.ex">>,

|

|

16

|

+ <<"lib/benchee/utility/deep_convert.ex">>,

|

|

15

17

|

<<"lib/benchee/utility/file_creation.ex">>,

|

|

16

18

|

<<"lib/benchee/utility/map_value.ex">>,

|

|

17

19

|

<<"lib/benchee/utility/repeat_n.ex">>,<<"mix.exs">>,<<"README.md">>,

|

|

|

@@ -27,4 +29,4 @@

|

|

27

29

|

{<<"name">>,<<"deep_merge">>},

|

|

28

30

|

{<<"optional">>,false},

|

|

29

31

|

{<<"requirement">>,<<"~> 0.1">>}]]}.

|

|

30

|

- {<<"version">>,<<"0.6.0">>}.

|

|

32

|

+ {<<"version">>,<<"0.7.0">>}.

|

changed

lib/benchee.ex

|

|

@@ -4,8 +4,6 @@ defmodule Benchee do

|

|

4

4

|

as the very high level `Benchee.run` API.

|

|

5

5

|

"""

|

|

6

6

|

|

|

7

|

- alias Benchee.{Statistics, Config, Benchmark}

|

|

8

|

-

|

|

9

7

|

@doc """

|

|

10

8

|

Run benchmark jobs defined by a map and optionally provide configuration

|

|

11

9

|

options.

|

|

|

@@ -34,55 +32,33 @@ defmodule Benchee do

|

|

34

32

|

end

|

|

35

33

|

|

|

36

34

|

defp do_run(jobs, config) do

|

|

37

|

- suite = run_benchmarks jobs, config

|

|

38

|

- output_results suite

|

|

39

|

- suite

|

|

35

|

+ jobs

|

|

36

|

+ |> run_benchmarks(config)

|

|

37

|

+ |> output_results

|

|

40

38

|

end

|

|

41

39

|

|

|

42

40

|

defp run_benchmarks(jobs, config) do

|

|

43

41

|

config

|

|

44

42

|

|> Benchee.init

|

|

45

|

- |> Benchee.System.system

|

|

43

|

+ |> Benchee.system

|

|

46

44

|

|> Map.put(:jobs, jobs)

|

|

47

45

|

|> Benchee.measure

|

|

48

|

- |> Statistics.statistics

|

|

46

|

+ |> Benchee.statistics

|

|

49

47

|

end

|

|

50

48

|

|

|

51

49

|

defp output_results(suite = %{config: %{formatters: formatters}}) do

|

|

52

50

|

Enum.each formatters, fn(output_function) ->

|

|

53

51

|

output_function.(suite)

|

|

54

52

|

end

|

|

53

|

+ suite

|

|

55

54

|

end

|

|

56

55

|

|

|

57

|

- @doc """

|

|

58

|

- Convenience access to `Benchee.Config.init/1` to initialize the configuration.

|

|

59

|

- """

|

|

60

|

- def init(config \\ %{}) do

|

|

61

|

- Config.init(config)

|

|

62

|

- end

|

|

63

|

-

|

|

64

|

- @doc """

|

|

65

|

- Convenience access to `Benchee.Benchmark.benchmark/3` to define the benchmarks

|

|

66

|

- to run in this benchmarking suite.

|

|

67

|

- """

|

|

68

|

- def benchmark(suite, name, function) do

|

|

69

|

- Benchmark.benchmark(suite, name, function)

|

|

70

|

- end

|

|

71

|

-

|

|

72

|

-

|

|

73

|

- @doc """

|

|

74

|

- Convenience access to `Benchee.Benchmark.measure/1` to run the defined

|

|

75

|

- benchmarks and measure their run time.

|

|

76

|

- """

|

|

77

|

- def measure(suite) do

|

|

78

|

- Benchmark.measure(suite)

|

|

79

|

- end

|

|

80

|

-

|

|

81

|

- @doc """

|

|

82

|

- Convenience access to `Benchee.Statistics.statistics/1` to generate

|

|

83

|

- statistics.

|

|

84

|

- """

|

|

85

|

- def statistics(suite) do

|

|

86

|

- Statistics.statistics(suite)

|

|

87

|

- end

|

|

56

|

+ defdelegate init(), to: Benchee.Config

|

|

57

|

+ defdelegate init(config), to: Benchee.Config

|

|

58

|

+ defdelegate system(suite), to: Benchee.System

|

|

59

|

+ defdelegate measure(suite), to: Benchee.Benchmark

|

|

60

|

+ defdelegate measure(suite, printer), to: Benchee.Benchmark

|

|

61

|

+ defdelegate benchmark(suite, name, function), to: Benchee.Benchmark

|

|

62

|

+ defdelegate benchmark(suite, name, function, printer), to: Benchee.Benchmark

|

|

63

|

+ defdelegate statistics(suite), to: Benchee.Statistics

|

|

88

64

|

end

|

changed

lib/benchee/benchmark.ex

|

|

@@ -6,16 +6,16 @@ defmodule Benchee.Benchmark do

|

|

6

6

|

"""

|

|

7

7

|

|

|

8

8

|

alias Benchee.Utility.RepeatN

|

|

9

|

- alias Benchee.Conversion.Duration

|

|

9

|

+ alias Benchee.Output.BenchmarkPrinter, as: Printer

|

|

10

10

|

|

|

11

11

|

@doc """

|

|

12

12

|

Adds the given function and its associated name to the benchmarking jobs to

|

|

13

13

|

be run in this benchmarking suite as a tuple `{name, function}` to the list

|

|

14

14

|

under the `:jobs` key.

|

|

15

15

|

"""

|

|

16

|

- def benchmark(suite = %{jobs: jobs}, name, function) do

|

|

16

|

+ def benchmark(suite = %{jobs: jobs}, name, function, printer \\ Printer) do

|

|

17

17

|

if Map.has_key?(jobs, name) do

|

|

18

|

- IO.puts "You already have a job defined with the name \"#{name}\", you can't add two jobs with the same name!"

|

|

18

|

+ printer.duplicate_benchmark_warning name

|

|

19

19

|

suite

|

|

20

20

|

else

|

|

21

21

|

%{suite | jobs: Map.put(jobs, name, function)}

|

|

|

@@ -36,60 +36,13 @@ defmodule Benchee.Benchmark do

|

|

36

36

|

There will be `parallel` processes spawned exeuting the benchmark job in

|

|

37

37

|

parallel.

|

|

38

38

|

"""

|

|

39

|

- def measure(suite = %{jobs: jobs, config: config}) do

|

|

40

|

- print_configuration_information(jobs, config)

|

|

41

|

- run_times = record_runtimes(jobs, config)

|

|

39

|

+ def measure(suite = %{jobs: jobs, config: config}, printer \\ Printer) do

|

|

40

|

+ printer.configuration_information(suite)

|

|

41

|

+ run_times = record_runtimes(jobs, config, printer)

|

|

42

42

|

|

|

43

43

|

Map.put suite, :run_times, run_times

|

|

44

44

|

end

|

|

45

45

|

|

|

46

|

- defp print_configuration_information(_, %{print: %{configuration: false}}) do

|

|

47

|

- nil

|

|

48

|

- end

|

|

49

|

- defp print_configuration_information(jobs, config) do

|

|

50

|

- print_system_information()

|

|

51

|

- print_suite_information(jobs, config)

|

|

52

|

- end

|

|

53

|

-

|

|

54

|

- defp print_system_information do

|

|

55

|

- IO.write :erlang.system_info(:system_version)

|

|

56

|

- IO.puts "Elixir #{System.version}"

|

|

57

|

- end

|

|

58

|

-

|

|

59

|

- defp print_suite_information(jobs, %{parallel: parallel,

|

|

60

|

- time: time,

|

|

61

|

- warmup: warmup,

|

|

62

|

- inputs: inputs}) do

|

|

63

|

- warmup_seconds = time_precision Duration.scale(warmup, :second)

|

|

64

|

- time_seconds = time_precision Duration.scale(time, :second)

|

|

65

|

- job_count = map_size jobs

|

|

66

|

- exec_time = warmup_seconds + time_seconds

|

|

67

|

- total_time = time_precision(job_count * inputs_count(inputs) * exec_time)

|

|

68

|

-

|

|

69

|

- IO.puts "Benchmark suite executing with the following configuration:"

|

|

70

|

- IO.puts "warmup: #{warmup_seconds}s"

|

|

71

|

- IO.puts "time: #{time_seconds}s"

|

|

72

|

- IO.puts "parallel: #{parallel}"

|

|

73

|

- IO.puts "inputs: #{inputs_out(inputs)}"

|

|

74

|

- IO.puts "Estimated total run time: #{total_time}s"

|

|

75

|

- IO.puts ""

|

|

76

|

- end

|

|

77

|

-

|

|

78

|

- defp inputs_count(nil), do: 1 # no input specified still executes

|

|

79

|

- defp inputs_count(inputs), do: map_size(inputs)

|

|

80

|

-

|

|

81

|

- defp inputs_out(nil), do: "none specified"

|

|

82

|

- defp inputs_out(inputs) do

|

|

83

|

- inputs

|

|

84

|

- |> Map.keys

|

|

85

|

- |> Enum.join(", ")

|

|

86

|

- end

|

|

87

|

-

|

|

88

|

- @round_precision 2

|

|

89

|

- defp time_precision(float) do

|

|

90

|

- Float.round(float, @round_precision)

|

|

91

|

- end

|

|

92

|

-

|

|

93

46

|

@no_input :__no_input

|

|

94

47

|

@no_input_marker {@no_input, @no_input}

|

|

95

48

|

|

|

|

@@ -98,55 +51,45 @@ defmodule Benchee.Benchmark do

|

|

98

51

|

"""

|

|

99

52

|

def no_input, do: @no_input

|

|

100

53

|

|

|

101

|

- defp record_runtimes(jobs, config = %{inputs: nil}) do

|

|

102

|

- [runtimes_for_input(@no_input_marker, jobs, config)]

|

|

54

|

+ defp record_runtimes(jobs, config = %{inputs: nil}, printer) do

|

|

55

|

+ [runtimes_for_input(@no_input_marker, jobs, config, printer)]

|

|

103

56

|

|> Map.new

|

|

104

57

|

end

|

|

105

|

- defp record_runtimes(jobs, config = %{inputs: inputs}) do

|

|

58

|

+ defp record_runtimes(jobs, config = %{inputs: inputs}, printer) do

|

|

106

59

|

inputs

|

|

107

|

- |> Enum.map(fn(input) -> runtimes_for_input(input, jobs, config) end)

|

|

60

|

+ |> Enum.map(fn(input) ->

|

|

61

|

+ runtimes_for_input(input, jobs, config, printer)

|

|

62

|

+ end)

|

|

108

63

|

|> Map.new

|

|

109

64

|

end

|

|

110

65

|

|

|

111

|

- defp runtimes_for_input({input_name, input}, jobs, config) do

|

|

112

|

- print_input_information(input_name)

|

|

66

|

+ defp runtimes_for_input({input_name, input}, jobs, config, printer) do

|

|

67

|

+ printer.input_information(input_name, config)

|

|

113

68

|

|

|

114

|

- results = jobs

|

|

115

|

- |> Enum.map(fn(job) -> measure_job(job, input, config) end)

|

|

116

|

- |> Map.new

|

|

69

|

+ results =

|

|

70

|

+ jobs

|

|

71

|

+ |> Enum.map(fn(job) -> measure_job(job, input, config, printer) end)

|

|

72

|

+ |> Map.new

|

|

117

73

|

|

|

118

74

|

{input_name, results}

|

|

119

75

|

end

|

|

120

76

|

|

|

121

|

- defp print_input_information(@no_input) do

|

|

122

|

- # noop

|

|

123

|

- end

|

|

124

|

- defp print_input_information(input_name) do

|

|

125

|

- IO.puts "\nBenchmarking with input #{input_name}:"

|

|

126

|

- end

|

|

127

|

-

|

|

128

|

- defp measure_job({name, function}, input, config) do

|

|

129

|

- print_benchmarking name, config

|

|

130

|

- job_run_times = parallel_benchmark function, input, config

|

|

77

|

+ defp measure_job({name, function}, input, config, printer) do

|

|

78

|

+ printer.benchmarking name, config

|

|

79

|

+ job_run_times = parallel_benchmark function, input, config, printer

|

|

131

80

|

{name, job_run_times}

|

|

132

81

|

end

|

|

133

82

|

|

|

134

|

- defp print_benchmarking(_, %{print: %{benchmarking: false}}) do

|

|

135

|

- nil

|

|

136

|

- end

|

|

137

|

- defp print_benchmarking(name, _config) do

|

|

138

|

- IO.puts "Benchmarking #{name}..."

|

|

139

|

- end

|

|

140

|

-

|

|

141

83

|

defp parallel_benchmark(function,

|

|

142

84

|

input,

|

|

143

85

|

%{parallel: parallel,

|

|

144

86

|

time: time,

|

|

145

87

|

warmup: warmup,

|

|

146

|

- print: %{fast_warning: fast_warning}}) do

|

|

88

|

+ print: %{fast_warning: fast_warning}},

|

|

89

|

+ printer) do

|

|

147

90

|

pmap 1..parallel, fn ->

|

|

148

|

- run_warmup function, input, warmup

|

|

149

|

- measure_runtimes function, input, time, fast_warning

|

|

91

|

+ run_warmup function, input, warmup, printer

|

|

92

|

+ measure_runtimes function, input, time, fast_warning, printer

|

|

150

93

|

end

|

|

151

94

|

end

|

|

152

95

|

|

|

|

@@ -157,19 +100,19 @@ defmodule Benchee.Benchmark do

|

|

157

100

|

|> List.flatten

|

|

158

101

|

end

|

|

159

102

|

|

|

160

|

- defp run_warmup(function, input, time) do

|

|

161

|

- measure_runtimes(function, input, time, false)

|

|

103

|

+ defp run_warmup(function, input, time, printer) do

|

|

104

|

+ measure_runtimes(function, input, time, false, printer)

|

|

162

105

|

end

|

|

163

106

|

|

|

164

|

- defp measure_runtimes(function, input, time, display_fast_warning)

|

|

165

|

- defp measure_runtimes(_function, _input, 0, _) do

|

|

107

|

+ defp measure_runtimes(function, input, time, display_fast_warning, printer)

|

|

108

|

+ defp measure_runtimes(_function, _input, 0, _, _) do

|

|

166

109

|

[]

|

|

167

110

|

end

|

|

168

|

-

|

|

169

|

- defp measure_runtimes(function, input, time, display_fast_warning) do

|

|

111

|

+ defp measure_runtimes(function, input, time, display_fast_warning, printer) do

|

|

170

112

|

finish_time = current_time() + time

|

|

171

113

|

:erlang.garbage_collect

|

|

172

|

- {n, initial_run_time} = determine_n_times(function, input, display_fast_warning)

|

|

114

|

+ {n, initial_run_time} =

|

|

115

|

+ determine_n_times(function, input, display_fast_warning, printer)

|

|

173

116

|

do_benchmark(finish_time, function, input, [initial_run_time], n, current_time())

|

|

174

117

|

end

|

|

175

118

|

|

|

|

@@ -182,12 +125,12 @@ defmodule Benchee.Benchmark do

|

|

182

125

|

# executed in the measurement cycle.

|

|

183

126

|

@minimum_execution_time 10

|

|

184

127

|

@times_multiplicator 10

|

|

185

|

- defp determine_n_times(function, input, display_fast_warning) do

|

|

128

|

+ defp determine_n_times(function, input, display_fast_warning, printer) do

|

|

186

129

|

run_time = measure_call function, input

|

|

187

130

|

if run_time >= @minimum_execution_time do

|

|

188

131

|

{1, run_time}

|

|

189

132

|

else

|

|

190

|

- if display_fast_warning, do: print_fast_warning()

|

|

133

|

+ if display_fast_warning, do: printer.fast_warning()

|

|

191

134

|

try_n_times(function, input, @times_multiplicator)

|

|

192

135

|

end

|

|

193

136

|

end

|

|

|

@@ -201,13 +144,6 @@ defmodule Benchee.Benchmark do

|

|

201

144

|

end

|

|

202

145

|

end

|

|

203

146

|

|

|

204

|

- @fast_warning """

|

|

205

|

- Warning: The function you are trying to benchmark is super fast, making measures more unreliable! See: https://github.com/PragTob/benchee/wiki/Benchee-Warnings#fast-execution-warning

|

|

206

|

- """

|

|

207

|

- defp print_fast_warning do

|

|

208

|

- IO.puts @fast_warning

|

|

209

|

- end

|

|

210

|

-

|

|

211

147

|

defp do_benchmark(finish_time, function, input, run_times, n, now)

|

|

212

148

|

defp do_benchmark(finish_time, _, _, run_times, _n, now)

|

|

213

149

|

when now > finish_time do

|

changed

lib/benchee/config.ex

|

|

@@ -24,9 +24,15 @@ defmodule Benchee.Config do

|

|

24

24

|

to work your benchmarking function gets the current input passed in as an

|

|

25

25

|

argument into the function. Defaults to `nil`, aka no input specified and

|

|

26

26

|

functions are called without an argument.

|

|

27

|

- * `parallel` - each job will be executed in `parallel` number processes.

|

|

28

|

- Gives you more data in the same time, but also puts a load on the system

|

|

29

|

- interfering with benchmark results. Defaults to 1.

|

|

27

|

+ * `parallel` - each the function of each job will be executed in

|

|

28

|

+ `parallel` number processes. If `parallel` is `4` then 4 processes will be

|

|

29

|

+ spawned that all execute the _same_ function for the given time. When these

|

|

30

|

+ finish/the time is up 4 new processes will be spawned for the next

|

|

31

|

+ job/function. This gives you more data in the same time, but also puts a

|

|

32

|

+ load on the system interfering with benchmark results. For more on the pros

|

|

33

|

+ and cons of parallel benchmarking [check the

|

|

34

|

+ wiki](https://github.com/PragTob/benchee/wiki/Parallel-Benchmarking).

|

|

35

|

+ Defaults to 1 (no parallel execution).

|

|

30

36

|

* `formatters` - list of formatter functions you'd like to run to output the

|

|

31

37

|

benchmarking results of the suite when using `Benchee.run/2`. Functions need

|

|

32

38

|

to accept one argument (which is the benchmarking suite with all data) and

|

changed

lib/benchee/conversion/format.ex

|

|

@@ -57,12 +57,12 @@ defmodule Benchee.Conversion.Format do

|

|

57

57

|

|> format(module)

|

|

58

58

|

end

|

|

59

59

|

|

|

60

|

- # Returns the separator defined in `module.separator/0`, or the default, a space

|

|

61

|

- defp separator(module) do

|

|

62

|

- case function_exported?(module, :separator, 0) do

|

|

63

|

- true -> module.separator()

|

|

64

|

- false -> " "

|

|

65

|

- end

|

|

60

|

+

|

|

61

|

+ @default_separator " "

|

|

62

|

+ # should we need it again, a customer separator could be returned

|

|

63

|

+ # per module here

|

|

64

|

+ defp separator(_module) do

|

|

65

|

+ @default_separator

|

|

66

66

|

end

|

|

67

67

|

|

|

68

68

|

# Returns the separator, or an empty string if there isn't a label

|

added

lib/benchee/output/benchmark_printer.ex

|

|

@@ -0,0 +1,101 @@

|

|

1

|

+ defmodule Benchee.Output.BenchmarkPrinter do

|

|

2

|

+ @moduledoc """

|

|

3

|

+ Printing happening during the Benchmark stage.

|

|

4

|

+ """

|

|

5

|

+

|

|

6

|

+ alias Benchee.Conversion.Duration

|

|

7

|

+

|

|

8

|

+ @doc """

|

|

9

|

+ Shown when you try to define a benchmark with the same name twice.

|

|

10

|

+

|

|

11

|

+ How would you want to discern those anyhow?

|

|

12

|

+ """

|

|

13

|

+ def duplicate_benchmark_warning(name) do

|

|

14

|

+ IO.puts "You already have a job defined with the name \"#{name}\", you can't add two jobs with the same name!"

|

|

15

|

+ end

|

|

16

|

+

|

|

17

|

+ @doc """

|

|

18

|

+ Prints general information such as system information and estimated

|

|

19

|

+ benchmarking time.

|

|

20

|

+ """

|

|

21

|

+ def configuration_information(%{config: %{print: %{configuration: false}}}) do

|

|

22

|

+ nil

|

|

23

|

+ end

|

|

24

|

+ def configuration_information(%{jobs: jobs, system: sys, config: config}) do

|

|

25

|

+ system_information(sys)

|

|

26

|

+ suite_information(jobs, config)

|

|

27

|

+ end

|

|

28

|

+

|

|

29

|

+ defp system_information(%{erlang: erlang_version, elixir: elixir_version}) do

|

|

30

|

+ IO.puts "Elixir #{elixir_version}"

|

|

31

|

+ IO.puts "Erlang #{erlang_version}"

|

|

32

|

+ end

|

|

33

|

+

|

|

34

|

+ defp suite_information(jobs, %{parallel: parallel,

|

|

35

|

+ time: time,

|

|

36

|

+ warmup: warmup,

|

|

37

|

+ inputs: inputs}) do

|

|

38

|

+ warmup_seconds = time_precision Duration.scale(warmup, :second)

|

|

39

|

+ time_seconds = time_precision Duration.scale(time, :second)

|

|

40

|

+ job_count = map_size jobs

|

|

41

|

+ exec_time = warmup_seconds + time_seconds

|

|

42

|

+ total_time = time_precision(job_count * inputs_count(inputs) * exec_time)

|

|

43

|

+

|

|

44

|

+ IO.puts "Benchmark suite executing with the following configuration:"

|

|

45

|

+ IO.puts "warmup: #{warmup_seconds}s"

|

|

46

|

+ IO.puts "time: #{time_seconds}s"

|

|

47

|

+ IO.puts "parallel: #{parallel}"

|

|

48

|

+ IO.puts "inputs: #{inputs_out(inputs)}"

|

|

49

|

+ IO.puts "Estimated total run time: #{total_time}s"

|

|

50

|

+ IO.puts ""

|

|

51

|

+ end

|

|

52

|

+

|

|

53

|

+ defp inputs_count(nil), do: 1 # no input specified still executes

|

|

54

|

+ defp inputs_count(inputs), do: map_size(inputs)

|

|

55

|

+

|

|

56

|

+ defp inputs_out(nil), do: "none specified"

|

|

57

|

+ defp inputs_out(inputs) do

|

|

58

|

+ inputs

|

|

59

|

+ |> Map.keys

|

|

60

|

+ |> Enum.join(", ")

|

|

61

|

+ end

|

|

62

|

+

|

|

63

|

+ @round_precision 2

|

|

64

|

+ defp time_precision(float) do

|

|

65

|

+ Float.round(float, @round_precision)

|

|

66

|

+ end

|

|

67

|

+

|

|

68

|

+ @doc """

|

|

69

|

+ Prints a notice which job is currently being benchmarked.

|

|

70

|

+ """

|

|

71

|

+ def benchmarking(_, %{print: %{benchmarking: false}}), do: nil

|

|

72

|

+ def benchmarking(name, _config) do

|

|

73

|

+ IO.puts "Benchmarking #{name}..."

|

|

74

|

+ end

|

|

75

|

+

|

|

76

|

+ @doc """

|

|

77

|

+ Prints a warning about accuracy of benchmarks when the function is super fast.

|

|

78

|

+ """

|

|

79

|

+ def fast_warning do

|

|

80

|

+ IO.puts """

|

|

81

|

+ Warning: The function you are trying to benchmark is super fast, making measures more unreliable! See: https://github.com/PragTob/benchee/wiki/Benchee-Warnings#fast-execution-warning

|

|

82

|

+

|

|

83

|

+ You may disable this warning by passing print: [fast_warning: false] as

|

|

84

|

+ configuration options.

|

|

85

|

+ """

|

|

86

|

+ end

|

|

87

|

+

|

|

88

|

+ @doc """

|

|

89

|

+ Prints an informative message about which input is currently being

|

|

90

|

+ benchmarked, when multiple inputs were specified.

|

|

91

|

+ """

|

|

92

|

+ def input_information(_, %{print: %{benchmarking: false}}) do

|

|

93

|

+ nil

|

|

94

|

+ end

|

|

95

|

+ def input_information(input_name, _config) do

|

|

96

|

+ if input_name != Benchee.Benchmark.no_input do

|

|

97

|

+ IO.puts "\nBenchmarking with input #{input_name}:"

|

|

98

|

+ end

|

|

99

|

+ end

|

|

100

|

+

|

|

101

|

+ end

|

changed

lib/benchee/statistics.ex

|

|

@@ -65,7 +65,7 @@ defmodule Benchee.Statistics do

|

|

65

65

|

"""

|

|

66

66

|

def statistics(suite = %{run_times: run_times_per_input}) do

|

|

67

67

|

statistics = run_times_per_input

|

|

68

|

- |> map_values(&Statistics.job_statistics/1)

|

|

68

|

+ |> p_map_values(&Statistics.job_statistics/1)

|

|

69

69

|

|

|

70

70

|

Map.put suite, :statistics, statistics

|

|

71

71

|

end

|

changed

lib/benchee/utility/file_creation.ex

|

|

@@ -50,8 +50,9 @@ defmodule Benchee.Utility.FileCreation do

|

|

50

50

|

end

|

|

51

51

|

|

|

52

52

|

@doc """

|

|

53

|

- Gets file name/path and the input name together.

|

|

53

|

+ Gets file name/path, the input name and others together.

|

|

54

54

|

|

|

55

|

+ Takes a list of values to interleave or just a single value.

|

|

55

56

|

Handles the special no_input key to do no work at all.

|

|

56

57

|

|

|

57

58

|

## Examples

|

|

|

@@ -65,12 +66,51 @@ defmodule Benchee.Utility.FileCreation do

|

|

65

66

|

iex> Benchee.Utility.FileCreation.interleave("bench/abc.csv", "Big Input")

|

|

66

67

|

"bench/abc_big_input.csv"

|

|

67

68

|

|

|

69

|

+ iex> Benchee.Utility.FileCreation.interleave("bench/abc.csv",

|

|

70

|

+ ...> ["Big Input"])

|

|

71

|

+ "bench/abc_big_input.csv"

|

|

72

|

+

|

|

73

|

+ iex> Benchee.Utility.FileCreation.interleave("abc.csv", [])

|

|

74

|

+ "abc.csv"

|

|

75

|

+

|

|

76

|

+ iex> Benchee.Utility.FileCreation.interleave("bench/abc.csv",

|

|

77

|

+ ...> ["Big Input", "Comparison"])

|

|

78

|

+ "bench/abc_big_input_comparison.csv"

|

|

79

|

+

|

|

80

|

+ iex> Benchee.Utility.FileCreation.interleave("bench/A B C.csv",

|

|

81

|

+ ...> ["Big Input", "Comparison"])

|

|

82

|

+ "bench/A B C_big_input_comparison.csv"

|

|

83

|

+

|

|

84

|

+ iex> Benchee.Utility.FileCreation.interleave("bench/abc.csv",

|

|

85

|

+ ...> ["Big Input", "Comparison", "great Stuff"])

|

|

86

|

+ "bench/abc_big_input_comparison_great_stuff.csv"

|

|

87

|

+

|

|

68

88

|

iex> marker = Benchee.Benchmark.no_input

|

|

69

89

|

iex> Benchee.Utility.FileCreation.interleave("abc.csv", marker)

|

|

70

90

|

"abc.csv"

|

|

91

|

+ iex> Benchee.Utility.FileCreation.interleave("abc.csv", [marker])

|

|

92

|

+ "abc.csv"

|

|

93

|

+ iex> Benchee.Utility.FileCreation.interleave("abc.csv",

|

|

94

|

+ ...> [marker, "Comparison"])

|

|

95

|

+ "abc_comparison.csv"

|

|

96

|

+ iex> Benchee.Utility.FileCreation.interleave("abc.csv",

|

|

97

|

+ ...> ["Something cool", marker, "Comparison"])

|

|

98

|

+ "abc_something_cool_comparison.csv"

|

|

71

99

|

"""

|

|

100

|

+ def interleave(filename, names) when is_list(names) do

|

|

101

|

+ file_names = names

|

|

102

|

+ |> Enum.map(&to_filename/1)

|

|

103

|

+ |> prepend(Path.rootname(filename))

|

|

104

|

+ |> Enum.reject(fn(string) -> String.length(string) < 1 end)

|

|

105

|

+ |> Enum.join("_")

|

|

106

|

+ file_names <> Path.extname(filename)

|

|

107

|

+ end

|

|

72

108

|

def interleave(filename, name) do

|

|

73

|

- Path.rootname(filename) <> to_filename(name) <> Path.extname(filename)

|

|

109

|

+ interleave(filename, [name])

|

|

110

|

+ end

|

|

111

|

+

|

|

112

|

+ defp prepend(list, item) do

|

|

113

|

+ [item | list]

|

|

74

114

|

end

|

|

75

115

|

|

|

76

116

|

defp to_filename(name_string) do

|

|

|

@@ -78,7 +118,7 @@ defmodule Benchee.Utility.FileCreation do

|

|

78

118

|

case name_string do

|

|

79

119

|

^no_input -> ""

|

|

80

120

|

_ ->

|

|

81

|

- String.downcase("_" <> String.replace(name_string, " ", "_"))

|

|

121

|

+ String.downcase(String.replace(name_string, " ", "_"))

|

|

82

122

|

end

|

|

83

123

|

end

|

|

84

124

|

end

|

changed

lib/benchee/utility/map_value.ex

|

|

@@ -23,6 +23,30 @@ defmodule Benchee.Utility.MapValues do

|

|

23

23

|

|> Map.new

|

|

24

24

|

end

|

|

25

25

|

|

|

26

|

+ @doc """

|

|

27

|

+ Parallel map values of a map keeping the keys intact.

|

|

28

|

+

|

|

29

|

+ ## Examples

|

|

30

|

+

|

|

31

|

+ iex> Benchee.Utility.MapValues.p_map_values(%{a: %{b: 2, c: 0}},

|

|

32

|

+ ...> fn(value) -> value + 1 end)

|

|

33

|

+ %{a: %{b: 3, c: 1}}

|

|

34

|

+

|

|

35

|

+ iex> Benchee.Utility.MapValues.p_map_values(%{a: %{b: 2, c: 0}, d: %{e: 2}},

|

|

36

|

+ ...> fn(value) -> value + 1 end)

|

|

37

|

+ %{a: %{b: 3, c: 1}, d: %{e: 3}}

|

|

38

|

+ """

|

|

39

|

+ def p_map_values(map, function) do

|

|

40

|

+ map

|

|

41

|

+ |> Enum.map(fn({key, child_map}) ->

|

|

42

|

+ {key, Task.async(fn -> do_map_values(child_map, function) end)}

|

|

43

|

+ end)

|

|

44

|

+ |> Enum.map(fn({key, task}) ->

|

|

45

|

+ {key, Task.await(task)}

|

|

46

|

+ end)

|

|

47

|

+ |> Map.new

|

|

48

|

+ end

|

|

49

|

+

|

|

26

50

|

defp do_map_values(child_map, function) do

|

|

27

51

|

child_map

|

|

28

52

|

|> Enum.map(fn({key, value}) -> {key, function.(value)} end)

|

changed

mix.exs

|

|

@@ -1,7 +1,7 @@

|

|

1

1

|

defmodule Benchee.Mixfile do

|

|

2

2

|

use Mix.Project

|

|

3

3

|

|

|

4

|

- @version "0.6.0"

|

|

4

|

+ @version "0.7.0"

|

|

5

5

|

|

|

6

6

|

def project do

|

|

7

7

|

[

|

|

|

@@ -15,6 +15,11 @@ defmodule Benchee.Mixfile do

|

|

15

15

|

deps: deps(),

|

|

16

16

|

docs: [source_ref: @version],

|

|

17

17

|

package: package(),

|

|

18

|

+ test_coverage: [tool: ExCoveralls],

|

|

19

|

+ preferred_cli_env: [

|

|

20

|

+ "coveralls": :test, "coveralls.detail": :test,

|

|

21

|

+ "coveralls.post": :test, "coveralls.html": :test,

|

|

22

|

+ "coveralls.travis": :test],

|

|

18

23

|

name: "Benchee",

|

|

19

24

|

source_url: "https://github.com/PragTob/benchee",

|

|

20

25

|

description: """

|

|

|

@@ -38,6 +43,7 @@ defmodule Benchee.Mixfile do

|

|

38

43

|

{:credo, "~> 0.4", only: :dev},

|

|

39

44

|

{:ex_doc, "~> 0.11", only: :dev},

|

|

40

45

|

{:earmark, "~> 1.0.1", only: :dev},

|

|

46

|

+ {:excoveralls, "~> 0.6.1", only: :test},

|

|

41

47

|

{:inch_ex, "~> 0.5", only: :docs}

|

|

42

48

|

]

|

|

43

49

|

end

|